A vessel bend approach to synthesis of optical nerve head region

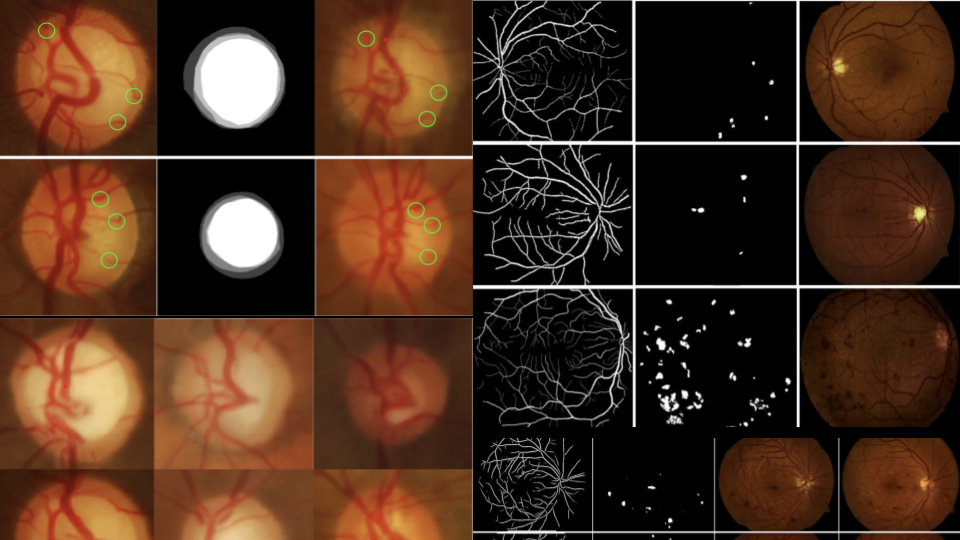

The Optic Disc (OD) and Optic Cup (OC) boundaries play a critical role in the detection of glaucoma. However, very few annotated datasets are available for both OD and OC that are required for segmentation. Recently, convolutional neural networks have shown significant improvements in segmentation performance. However, the full potential of CNNs is hindered by the lack of a large amount of annotated training images. To address this issue, we explore a method to generate synthetic images which can be used to augment the training data. Given the segmentation masks of OD, OC and vessels from arbitrarily different Fundus images, the proposed method employs a combination of B-spline registration and GAN to generate high quality images that ensure that the vessels bend at the edge of the OC in a realistic manner. In contrast, the existing GAN based methods for fundus image synthesis fail to capture the local details and vasculature in the Optic Nerve Head (ONH) region. The utility of the proposed method in training deep networks for the challenging problem of OC segmentation is explored and an improvement in the dice score from 0.85 to 0.92 is seen with the inclusion of the synthetic images in the training set.

A direct approach to synthesis of optical nerve head region

Earlier, we explored the method getting new OC mask/image pair leveraged the domain-specific property of vessels bending at the edge of OC. We get the benefit of having anatomical accuracy at the cost of the method’s flexibility. Here, we implement G2-Resnet to synthesise Fundus image as well as a binary Optic cup mask. Thus this method takes in just Optic disk(OD) mask and vessel mask to synthesise Fundus image as well as an OC mask. We compare the images generated as well as the reliability of the OC generated to the vessel bend based method. We also verify if the generated OC mask depends more on the input vessel or on the input OD. Our assumptions states that the generated OC should have a more significant dependence on the input vessel compared to the input OD. While this method is more flexible and might give a better performance with a larger dataset, it is clear that for smaller datasets and constrained problems, integrating prior

knowledge can be a more reliable solution.

Realistic drusen synthesis in optical coherence tomography

Image-to-image translation has been used for a variety of synthesis tasks in medical imaging. The current state of the art methods lack in preserving the edge detail. Hence, application to noisy modalities like Optical Coherence Tomography (OCT) is challenging. We propose a method to synthesise lesions (Drusen) in OCT slices from available Normal slices. We incorporate Self-attention in a CycleGAN and utilise an ESSIM based loss function to synthesise Drusen in a morphologically realistic manner. The FID score based assessment of the synthesised results show the proposed method to be outperforming current state of the art methods.

Drusen are yellow deposits under the retina. Drusen are made up of lipids, a fatty protein. Drusen likely do not cause age-related macular degeneration (AMD). But having Drusen increases a person’s risk of developing AMD. Optical coherence tomography (OCT) is a useful tool for the visualization retinal layers. This is especially helpful to of Drusen, a retinal abnormality seen in patients with agerelated macular degeneration (AMD). The Drusen can be observed as folds in retinal pigment epithelium (RPE) layer of the OCT.

Since OCT is a 3D modality synthesis of full OCT volume is a very challenging task so we kept our focus on 2D B-scans of the OCT. We introduce Drusen to a normal OCT B-scan. We show that existing methods are not able to capture fine details like Drusen not just affecting the RPE layer but also the Photorecptor layer and ONL and OPL layer above it. We show that an edge based loss function and an attention based generation helps resolve this issues and compare our model to other attention based image to image translation methods.