More Parameters? No Thanks!

Zeeshan Khan, Kartheek Akella , Vinay Namboodiri C.V. Jawahar

CVIT, IIIT-H , CVIT, IIIT-H Univ. of Bath, CVIT, IIIT-H

[Code] [Presentation Video] [ TED Dataset]

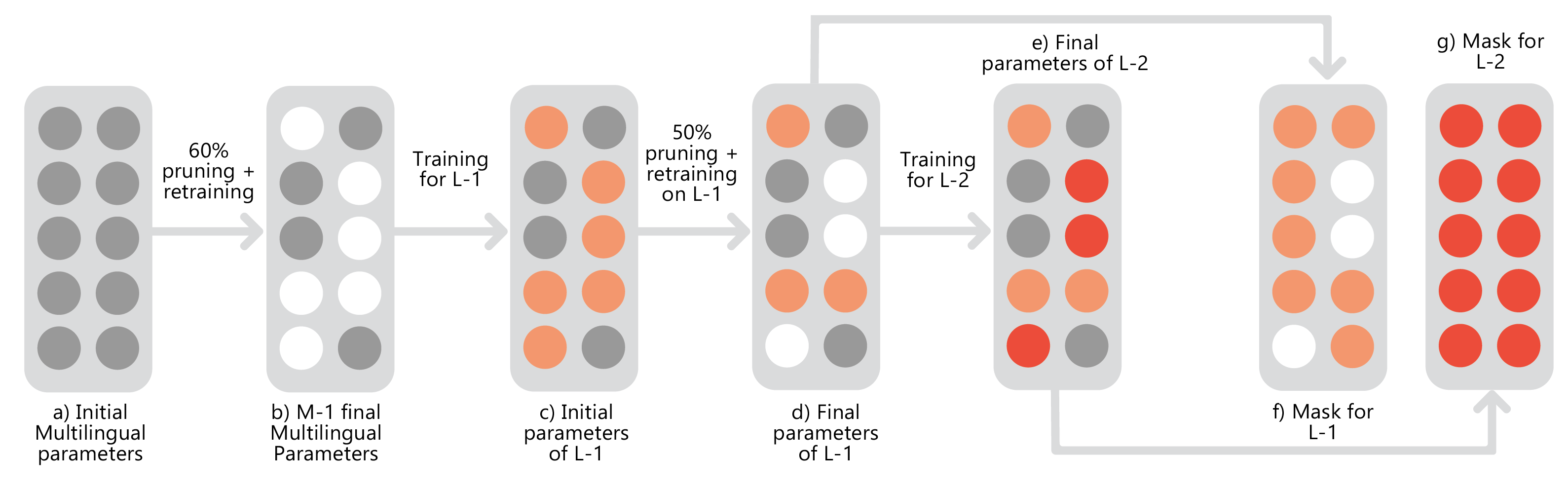

Illustration of the evolution of model parameters. (a) shows the multilingual parameters in grey. Through 60\% pruning and retraining, we arrive at (b), here white represents the free weights with value=0. The surviving weights in grey will be fixed for the rest of the method. Now, we train the free parameters on the first bilingual pair (L-1) and arrive at (c), which represents the initial parameters of L-1 in orange, and share weights with the previously trained multilingual parameters in grey. Again, with 50\% pruning and retraining on the current L-1 specific weights in orange, we get the final parameters for L-1 shown in (d) and extract the final mask for L-1 in (f). We repeat the same procedure for all the bilingual pairs and extract the masks for each pair.

Abstract

This work studies the long-standing problems of model capacity and negative interference in multilingual neural machine translation (MNMT). We use network pruning techniques and observe that pruning 50-70% of the parameters from a trained MNMT model results only in a 0.29-1.98 drop in the BLEU score. Suggesting that there exist large redundancies even in MNMT models. These observations motivate us to use the redundant parameters and counter the interference problem efficiently. We propose a novel adaptation strategy, where we iteratively prune and retrain the redundant parameters of an MNMT to improve bilingual representations while retaining the multilinguality. Negative interference severely affects high resource languages, and our method alleviates it without any additional adapter modules. Hence, we call it parameter-free adaptation strategy, paving way for the efficient adaptation of MNMT. We demonstrate the effectiveness of our method on a 9 language MNMT trained on TED talks, and report an average improvement of +1.36 BLEU on high resource pairs. Code will be released here

Paper

Official Presentation

Our presentation at Findings of ACL, 2021 is available on : youtube

Contact

- Zeeshan Khan -

This email address is being protected from spambots. You need JavaScript enabled to view it. - Kartheek Akella -

This email address is being protected from spambots. You need JavaScript enabled to view it.