Motion Trajectory Based Video Retrieval

Introduction

|

|

| Original Video | Query |

It's a sample video taken from UCF Sports Action dataset. The content of the video is difficult to

express in words and will vary from person to person. On the right-hand side a sample online

user sketch which can be used to retrieve the video has been shown

Content based video retrieval is an active area in Computer Vision. The most common type of retrieval strategies we know about are query by text (Youtube, Vimeo etc) and query by example-video or image (Video Google). When the query is in the form of a text, most of the current systems search the tags and metadata associated with the video. A problem with such an approach is that the tags or metadata need not be the real content of the video and are misleading. Moreover, often the queries may be abstract and lenghty. For example, " a particular diving style in swimming where the swimmer does three somersaults before diving " The other method i.e the query by example paradigm is limited by the absence of an example in hand at the right time.

Instead, certain videos can be identified from unique motion trajectories. For example the query mentioned above or another query like "the first strike in carrom where three or more carrom men or disks go to pockets" or "All red cars which came straight from North and then took a left turn". Clearly, queries like these describe the actual content of the video, which is unlikely to be found from the tags and metadata. Under these circumstances, queries can be framed using sketches. A basic sketch containing the object and the motion patterns of the object should suffice to describe the actual content of the video. Sketches can be offline (images) or online(temporal data collected using a tablet).

In this project we are trying to build a sketch-based video retrieval system using online sketches as queries.

Challenges

Although sketch-based search appears to be a very intuitive way to depict the content of a video, it suffers from perceptual variability. In simple words multiple users perceive the same motion in different ways. The variability is in terms of spatio-temporal properties like shape, direction, scale and speed. The following figures illustrate the problem precisly.

|

|

|

|

|

| Original Video | User: 1 | User: 2 | User: 3 |

A sample sinusoidal motion and its three different user interpretations

If we try to match these different representations in 2D Euclidean space then they wont match. Beacause quantitatively they are different. But they have qualitative similarity. So we have to project these sketches to a space where they are mapped similarly.

Another set of challenges is involved with extraction of robust trajectories from unconstrained videos. Real word videos taken using handheld or mobile cameras contain camera motion and blur. On the other hand dynamic background, illuminated surfaces, non-separable foreground and background are very common. Fast moving objects also pose serious challenges to tracking. Tracking in unconstrained videos is a very active problem in Computer Vision.

Contribution

We have defined and extracted features from the user sketches and videos which give us a qualitative understanding of the trajectories. There are four different type of features that we extract. We try to capture approximate shape, order and ditection of the trajectories and then combine them using a multilevel retrieval strategy. Our mutilevel retrieval strategy gives us a two-fold advantage.

- Firstly, it lets us combine the effect of multiple feature vectors of the same trajectory. The different feature vector captures different aspect of motion which is difficult.

- Secondly, temporal data suffers from the problem of unequal length feature vector. Our method handles this issue intelligently.

- Thirdly, our filters are arranged in increasing order of complexity and hence like a cascade they reduce the search space at each stage.

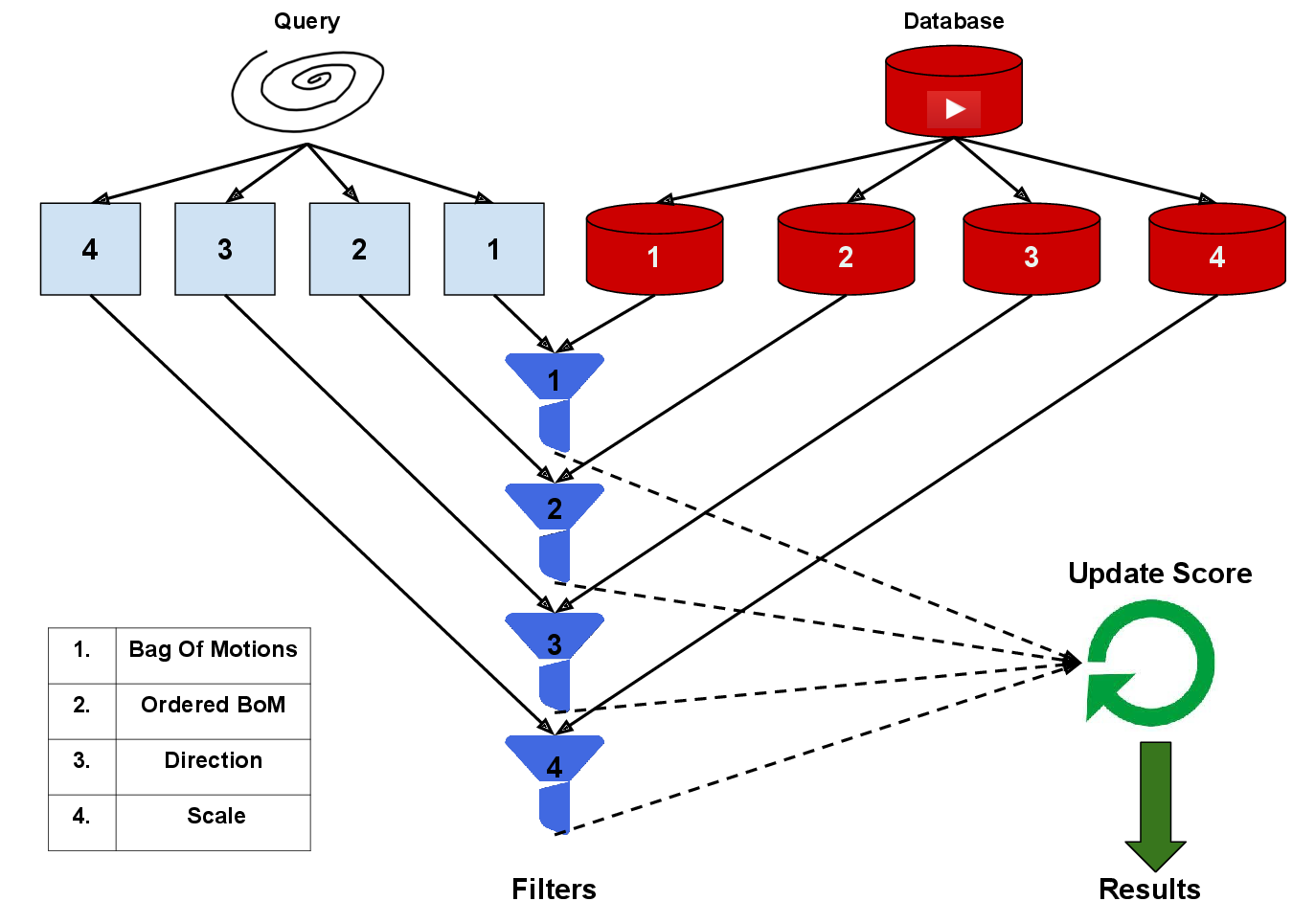

The adjacent figure explains our algorithm for retrieval. There are four different representations for the query and sketch. They are compared and matched at four different filters. The updated score is used for the final outcome.

Currently, we are focussing on extracting robust trajectories from unconstrained videos by improving some of the state of the art algorithms.

For a detailed description of the features used, please refer to our publication :

Koustav Ghosal, Anoop M. Namboodiri "A Sketch-Based Approach to Video Retrieval using Qualitative Features Proceedings of the Ninth Indian Conference on Computer Vision, Graphics and Image Processing, 14-17 Dec 2014, Bangalore, India.

Dataset

To validate our algorithm we had developed a dataset containing.

- A set of 100 Pool videos. Each video has been extracted from multiple International Pool Championship matches. All of them are top-view and HD videos

- A set of 100 Synthetic Videos. Each synthetic video represents a particular type of motion seen in real world scenarios.

Our datasets can be downloaded from the following links:

Please mail us at

Results

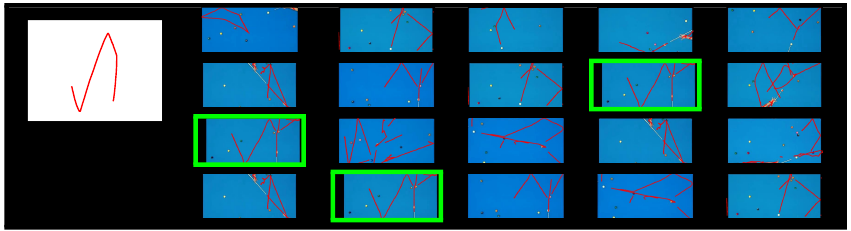

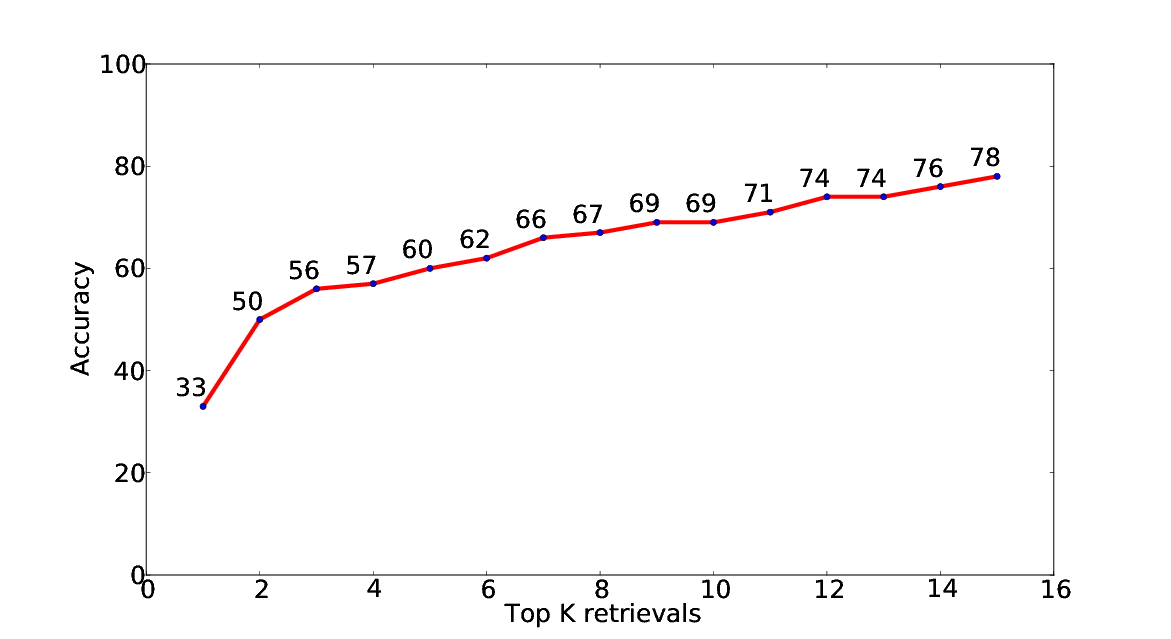

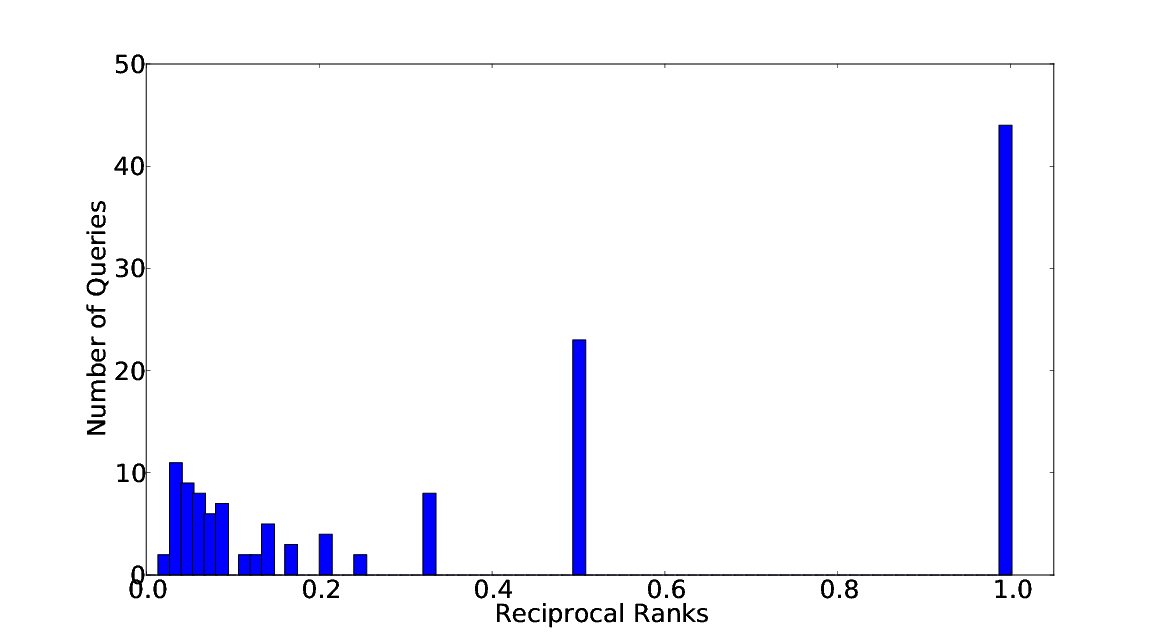

|

|

Related Publications

Koustav Ghosal, Anoop M. Namboodiri - A Sketch-Based Approach to Video Retrieval using Qualitative Features Proceedings of the Ninth Indian Conference on Computer Vision, Graphics and Image Processing, 14-17 Dec 2014, Bangalore, India. [PDF]