Extreme-scale Talking-Face Video Upsampling with Audio-Visual Priors

Sindhu B Hegde* , Rudrabha Mukhopadhyay*, Vinay Namboodiri and C.V. Jawahar

IIIT Hyderabad University of Oxford Univ. of Bath

ACM-MM, 2022

[ Code ] | [ Paper ] | [ Demo Video ]

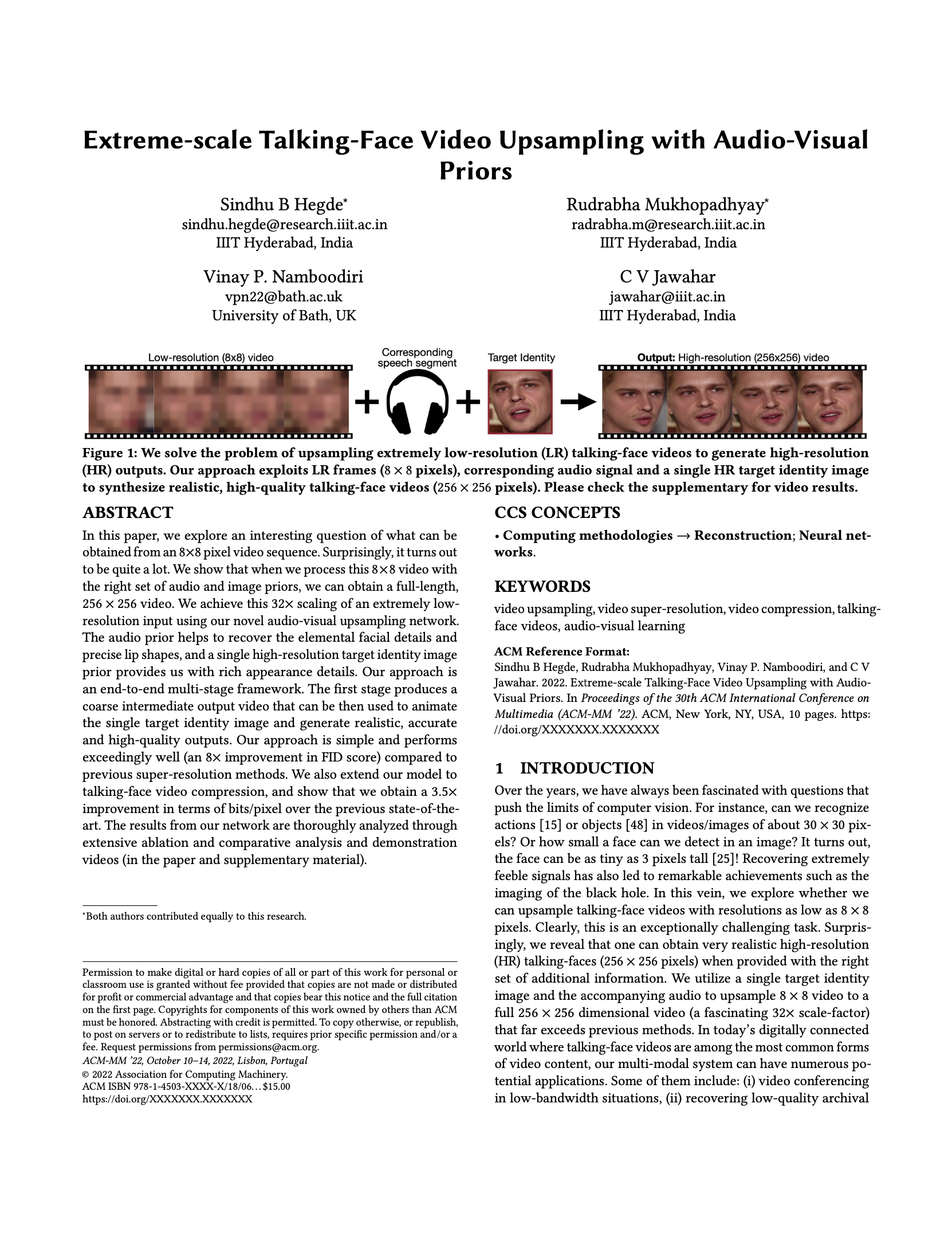

We solve the problem of upsampling extremely low-resolution (LR) talking-face videos to generate high-resolution (HR) outputs. Our approach exploits LR frames (8x8 pixels), corresponding audio signal and a single HR target identity image to synthesize realistic, high-quality talking-face videos (256x256 pixels).

Abstract

In this paper, we explore an interesting question of what can be obtained from an 8×8 pixel video sequence. Surprisingly, it turns out to be quite a lot. We show that when we process this 8x8 video with the right set of audio and image priors, we can obtain a full-length, 256x256 video. We achieve this 32x scaling of an extremely low-resolution input using our novel audio-visual upsampling network. The audio prior helps to recover the elemental facial details and precise lip shapes, and a single high-resolution target identity image prior provides us with rich appearance details. Our approach is an end-to-end multi-stage framework. The first stage produces a coarse intermediate output video that can be then used to animate the single target identity image and generate realistic, accurate and high-quality outputs. Our approach is simple and performs exceedingly well (an 8× improvement in FID score) compared to previous super-resolution methods. We also extend our model to talking-face video compression, and show that we obtain a 3.5x improvement in terms of bits/pixel over the previous state-of-the-art. The results from our network are thoroughly analyzed through extensive ablation and comparative analysis and demonstration videos (in the paper and supplementary material).

Paper

Demo

--- COMING SOON ---

Contact

- Sindhu Hegde -

This email address is being protected from spambots. You need JavaScript enabled to view it. - Rudrabha Mukhopadhyay -

This email address is being protected from spambots. You need JavaScript enabled to view it.