TexTAR – Textual Attribute Recognition in Multi-domain and Multi-lingual Document Images

[Paper] [Code & Dataset]

Abstract

Recognising textual attributes such as bold, italic, underline, and strikeout is essential for understanding text semantics, structure and visual presentation. Existing methods struggle with computational efficiency or adaptability in noisy, multilingual settings. To address this, we introduce TexTAR, a multi-task, context-aware Transformer for Textual Attribute Recognition (TAR). Our data-selection pipeline enhances context awareness, and our architecture employs a 2-D RoPE mechanism to incorporate spatial context for more accurate predictions. We also present MMTAD, a diverse multilingual dataset annotated with text attributes across real-world documents. TexTAR achieves state-of-the-art performance in extensive evaluations.

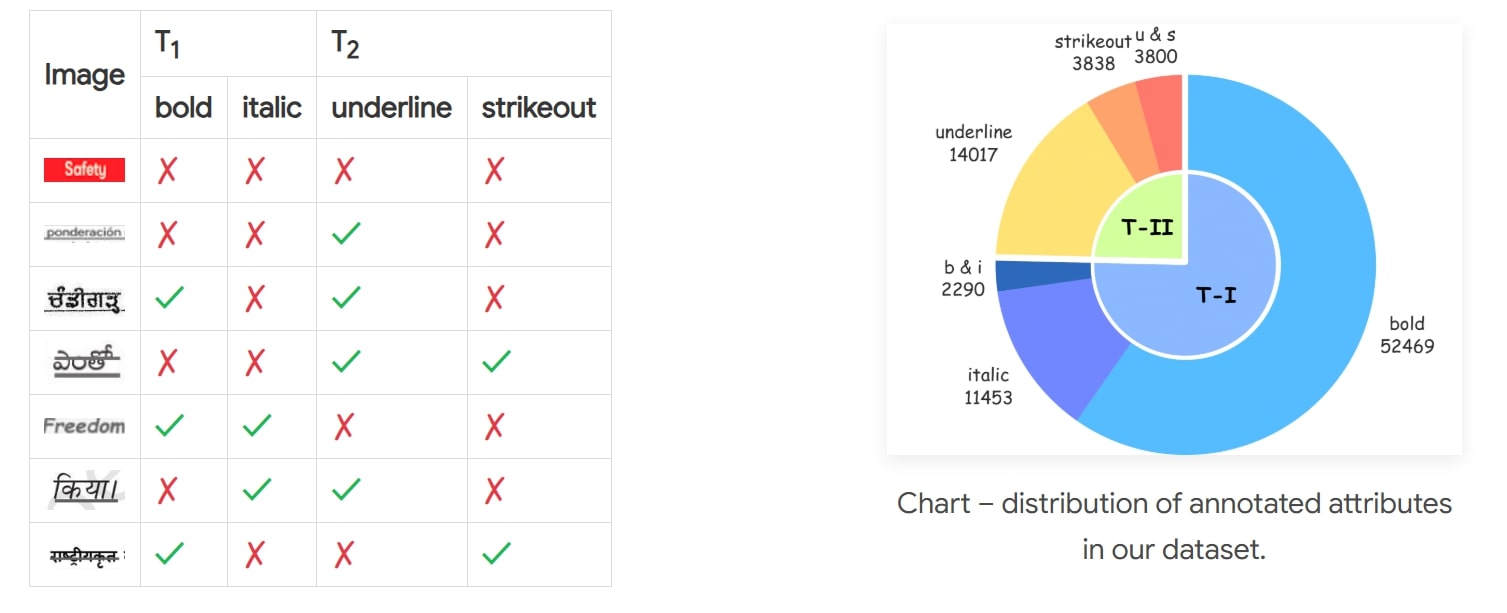

Textual Attributes in the Dataset

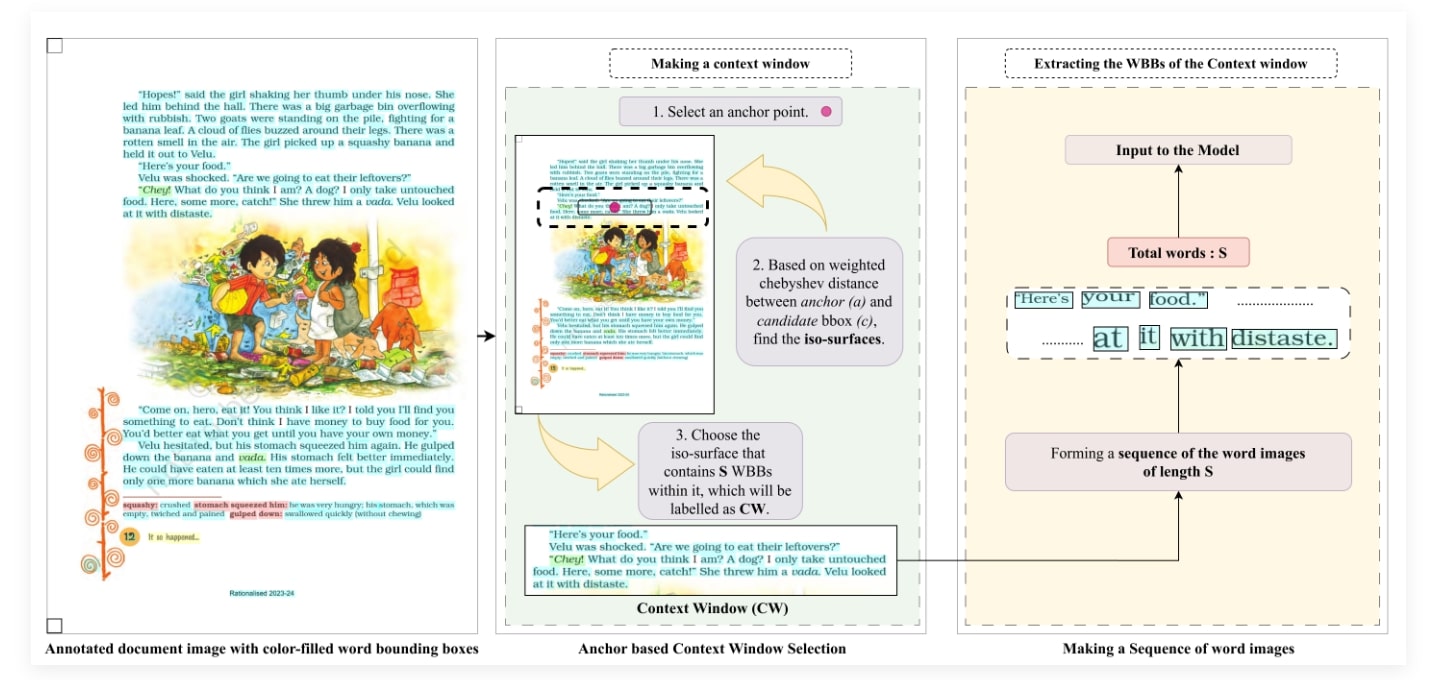

Data-selection Pipeline

Model Architecture

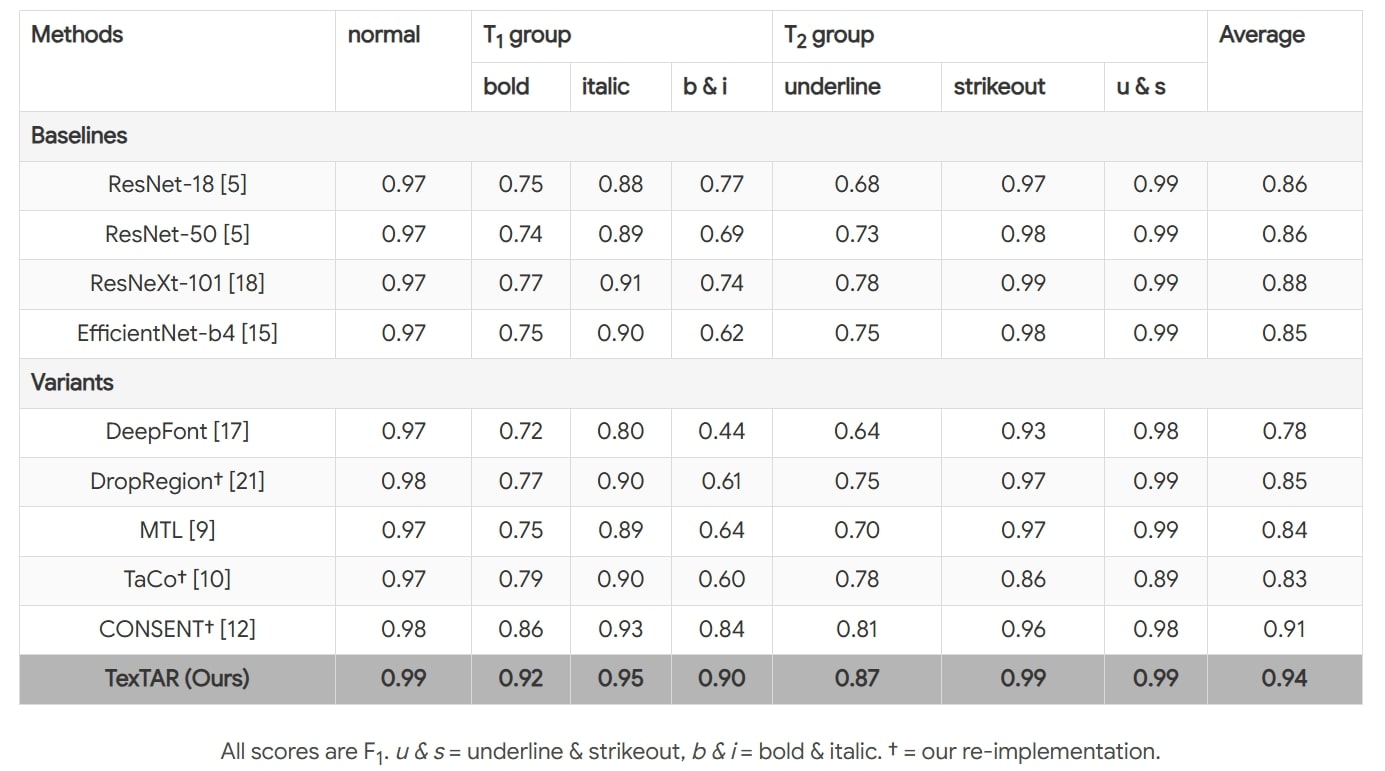

Comparison with State-of-the-Art Approaches

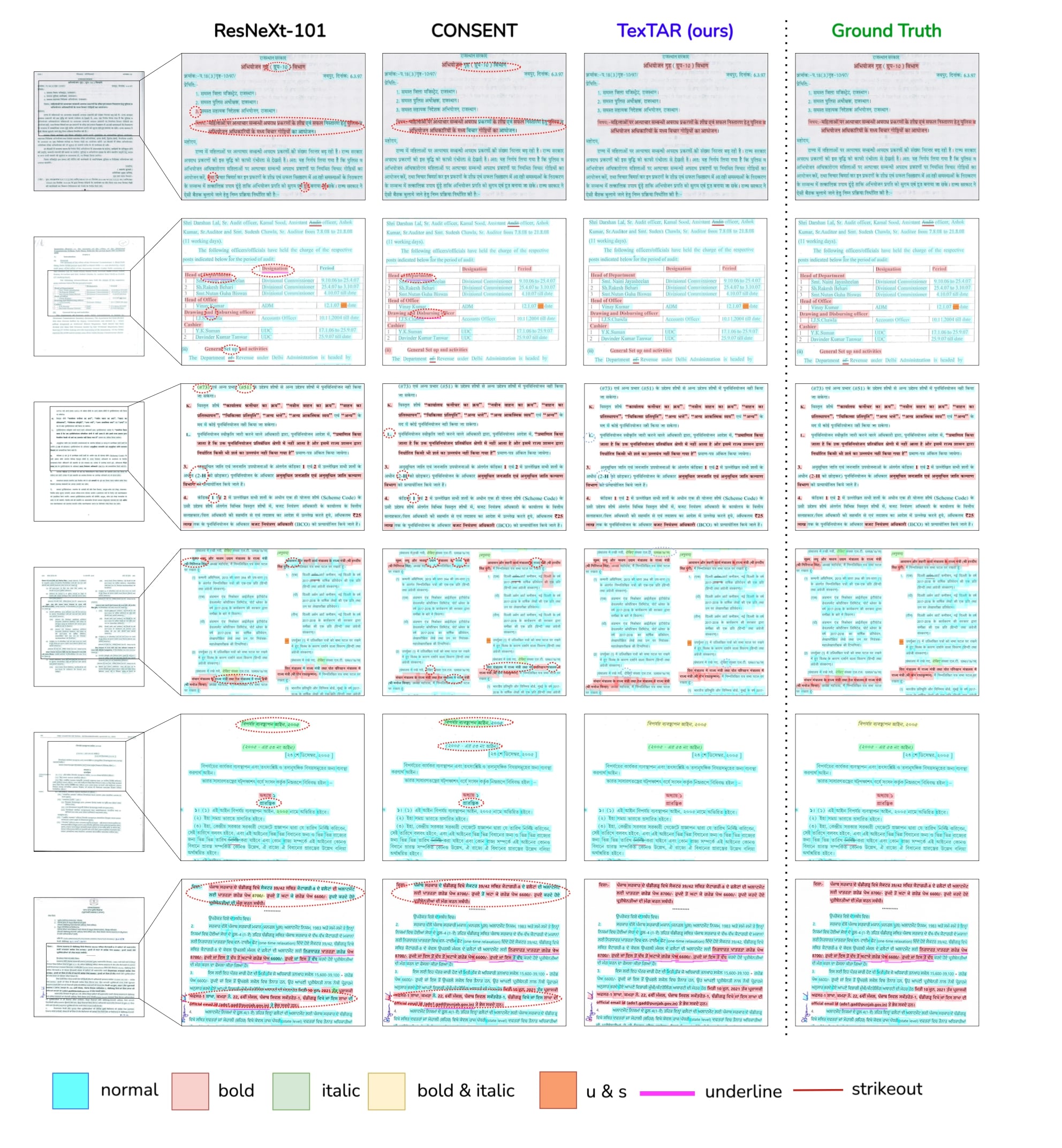

Visualization of results for a subset of baselines and variants in comparison with TexTAR

Download the Dataset and Weights

Model weights and the MMTAD testset can be downloaded from the link. To get access to the full dataset, please contact

Citation

@article{Kumar2025TexTAR,

author = {Rohan Kumar and Jyothi Swaroopa Jinka and Ravi Kiran Sarvadevabhatla},

title = {TexTAR: Textual Attribute Recognition in Multi-domain and Multi-lingual Document Images},

booktitle = {International Conference on Document Analysis and Recognition, ICDAR},

year = {2025},

}

Acknowledgements

International Institute of Information Technology Hyderabad, India..

Contact