Improving Word Recognition using Multiple Hypotheses and Deep Embeddings

Siddhant Bansal Praveen Krishnan C.V. Jawahar

ICPR 2020

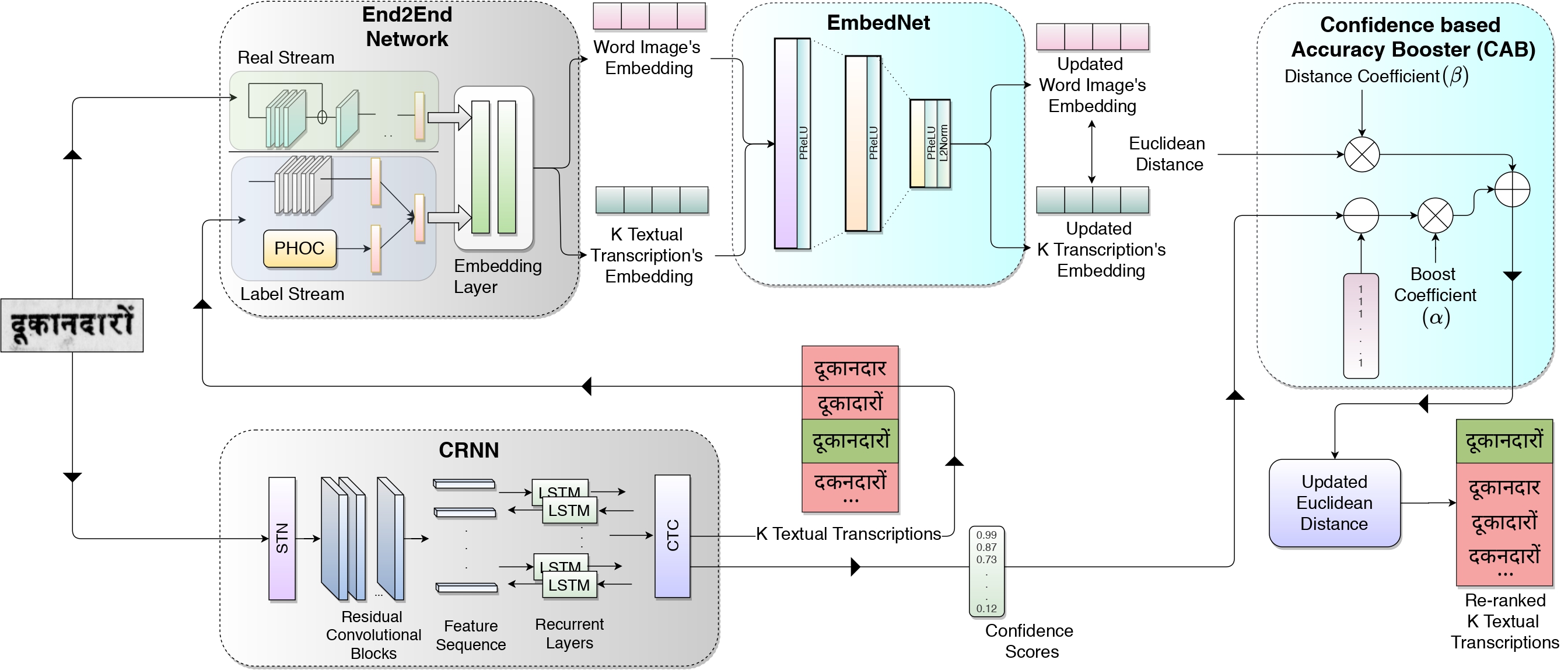

We propose to fuse recognition-based and recognition-free approaches for word recognition using learning-based methods. For this purpose, results obtained using a text recognizer and deep embeddings (generated using an End2End network) are fused. To further improve the embeddings, we propose EmbedNet, it uses triplet loss for training and learns an embedding space where the embedding of the word image lies closer to its corresponding text transcription’s embedding. This updated embedding space helps in choosing the correct prediction with higher confidence. To further improve the accuracy, we propose a plug-and-play module called Confidence based Accuracy Booster (CAB). It takes in the confidence scores obtained from the text recognizer and Euclidean distances between the embeddings and generates an updated distance vector. This vector has lower distance values for the correct words and higher distance values for the incorrect words. We rigorously evaluate our proposed method systematically on a collection of books that are in the Hindi language. Our method achieves an absolute improvement of around 10% in terms of word recognition accuracy.

For generating the textual transcription, we pass the word image through the CRNN and the End2End network (E2E), simultaneously. The CRNN generates multiple (K) textual transcriptions for the input image, whereas the E2E network generates the word image's embedding. The K textual transcriptions generated by the CRNN are passed through the E2E network to generate their embeddings. We pass these embeddings through the EmbedNet proposed in this work. The EmbedNet projects the input embedding to an updated Euclidean space, using which we get updated word image embedding and K transcriptions' embedding. We calculate the Euclidean distance between the input embedding and each of the K textual transcriptions. We then pass the distance values through the novel Confidence based Accuracy Booster (CAB), which uses them and the confidence scores from the CRNN to generate an updated list of Euclidean distance, which helps in selecting the correct prediction.

Paper

- ArXiv: PDF

- ICPR: Coming soon!

@misc{bansal2020improving,

title={Improving Word Recognition using Multiple Hypotheses and Deep Embeddings},

author={Siddhant Bansal and Praveen Krishnan and C. V. Jawahar},

year={2020},

eprint={2010.14411},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Code

This work is implemented using the pytorch neural network framework. Code is available in this GitHub repository: .