3D Human body estimation

Reconstructing 3D model with texture of human shapes from images is a challenging task as the object geometry of non-rigid human shapes evolve over time, yielding a large space of complex body poses as well as shape variations. In addition to this, there are several other challenges such as self-occlusions by body parts, obstructions due to free form clothing, background clutter (in a non-studio setup), sparse set of cameras with non-overlapping fields of views, sensor noise, etc as shown in the figure below

Deep Textured 3D reconstruction of human bodies

Abstract:

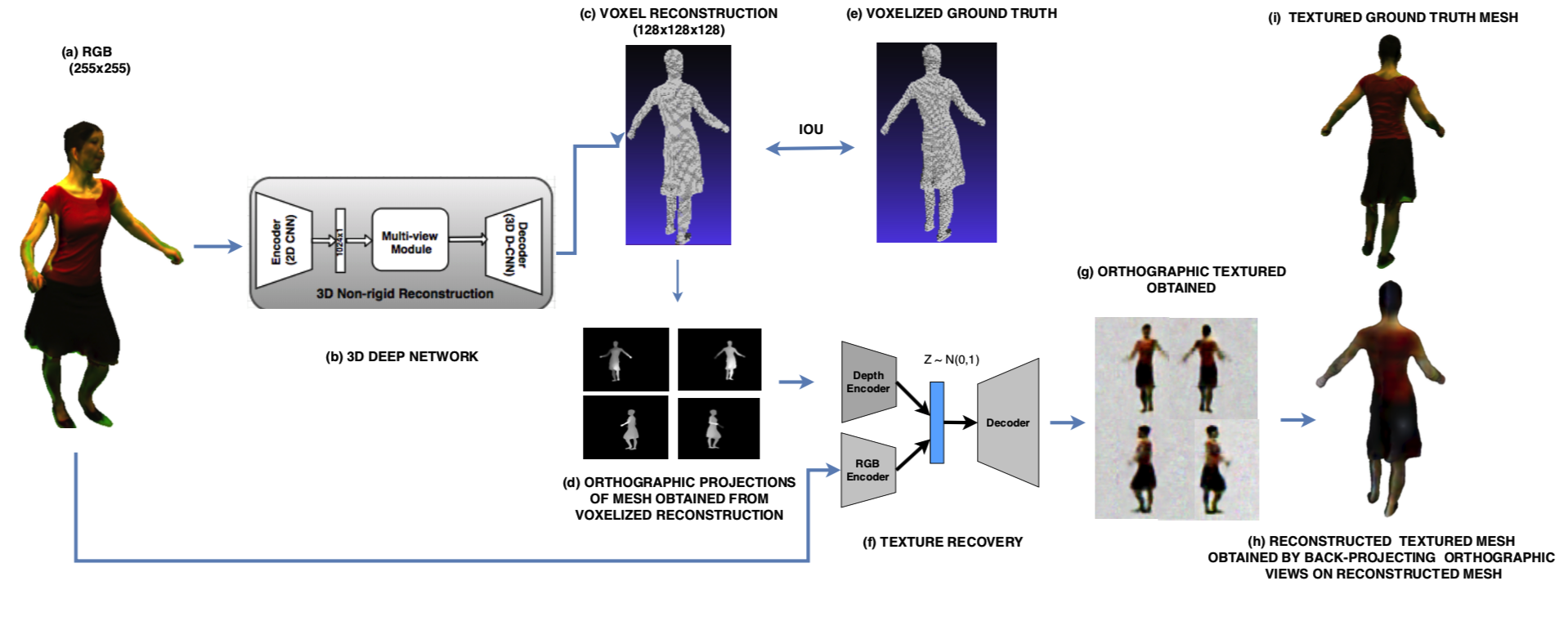

Recovering textured 3D models of non-rigid human body shapes is challenging due to self- occlusions caused by complex body poses and shapes, clothing obstructions, lack of surface texture, background clutter, sparse set of cameras with non-overlapping fields of view, etc. Further, a calibration-free environment adds additional complexity to both - reconstruction and texture recovery. In this paper, we propose a deep learning based solution for textured 3D reconstruction of human body shapes from a single view RGB image. This is achieved by first recovering the volumetric grid of the non-rigid human body given a single view RGB image followed by orthographic texture view synthesis using the respective depth projection of the reconstructed (volumetric) shape and input RGB image. We propose to co-learn the depth information readily available with affordable RGBD sensors (e.g., Kinect) while showing multiple views of the same object during the training phase. We show superior reconstruction performance in terms of quantitative and qualitative results, on both, publicly available datasets (by simulating the depth channel with virtual Kinect) as well as real RGBD data collected with our calibrated multi Kinect setup.

Method

In this work, we propose a deep learning based solution for textured 3D reconstruction of human body shapes given an input RGB image, in a calibration-free environment. Given a single view RGB image, both reconstruction and texture generation are ill-posed problems. Thus, we proposed to co-learn the depth cues (using depth images obtained from affordable sensors like Kinect) with RGB images while training the network. This helps the network learn the space of complex body poses, which otherwise is difficult with just 2D content in RGB images. Although we propose to learn the reconstruction network with multi-view RGB and depth images (shown one at a time during training), co-learning them with shared filters enabled us to recover 3D volumetric shapes using just a single RGB image at test time. Apart from the challenge of non-rigid poses, the depth information also helps addressing the challenges caused by cluttered background, shape variations and free form clothing. Our texture recovery network uses a variational auto- encoder to generate orthographic texture images of reconstructed body models that are subsequently backprojected to recover a texture 3D mesh model.

Contributions:

- We introduce a novel deep learning pipeline to obtain textured 3D models of nonrigid human body shapes from a single image. To the best of our knowledge, obtaining the reconstruction of non-rigid shapes in a volumetric form (whose advantages we demonstrate) has not yet been attempted in literature. Further, this would be an initial effort in the direction of single view non- rigid reconstruction and texture recovery in an end-to-end manner.

- We demonstrate the importance of depth cues (used only at train time) for the task of non-rigid reconstruction. This is achieved by our novel training methodology of alternating RGB and D in order to capture the large space of pose and shape deformation.

- We show that our model can partially handle non-rigid deformations induced by free form clothing, as we do not impose any model constraint while training the volumetric reconstruction network.

- We proposed to use depth cues for texture recovery in the variational autoencoder setup. This is the first attempt to do so in texture synthesis literature.

Related Publication:

- Abhinav Venkat, Sai Sagar Jinka, Avinash Sharma - Deep Textured 3D reconstruction of human bodies, British Machine Vision Conference (BMVC2018). [pdf]