Content Based Image Retrieval - CBIR

FISH: A Practical System for Fast Interactive Image Search in Huge Database

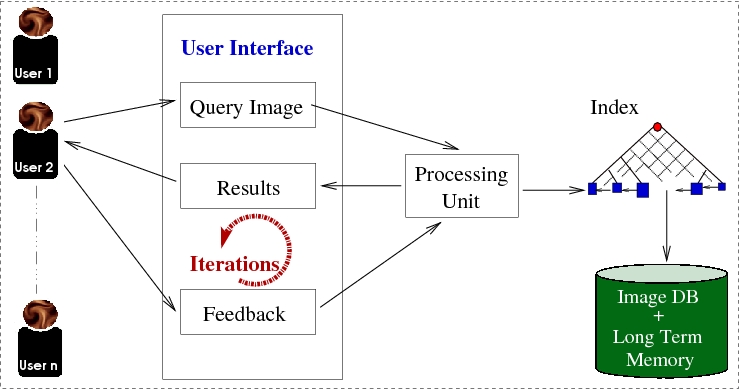

The problem of search and retrieval of images using relevance feedback has attracted tremendous attention in recent years from the research community. A real-world-deployable interactive image retrieval system must (1) be accurate, (2) require minimal user-interaction, (3) be efficient, (4) be scalable to large collections (millions) of images, and (5) support multi-user sessions. For good accuracy, we need effective methods for learning the relevance of image features based on user feedback, both within a user-session and across sessions. Efficiency and scalability require a good index structure for retrieving results. The index structure must allow for the relevance of image features to continually change with fresh queries and user-feedback. The state-of-the-art methods available today each address only a subset of these issues. In this paper, we build a complete system FISH -- Fast Image Search in Huge databases. In FISH, we integrate selected techniques available in the literature, while adding a few of our own. We perform extensive experiments on real datasets to demonstrate the accuracy, efficiency and scalability of FISH. Our results show that the system can easily scale to millions of images while maintaining interactive response time.

Private Content Based Image Retrieval

For content level access, very often database needs the query as a sample image. However, the image may contain private information and hence the user does not wish to reveal the image to the database. Private Content Based Image Retrieval (PCBIR) deals with retrieving similar images from an image database without revealing the content of the query image. not even to the database server. We propose algorithms for PCBIR, when the database is indexed using hierarchical index structure or hash based indexing scheme. Experiments are conducted on real datasets with popular features and state of the art data structures. It is observed that specialty and subjectivity of image retrieval (unlike SQL queries to a relational database) enables in computationally efficient yet private solutions.

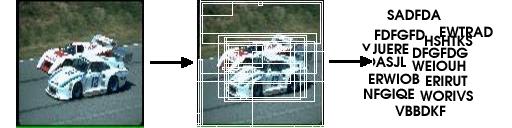

Virtual Textual Representation for Efficient Image Retrieval

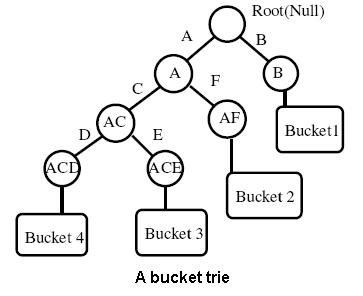

Effecient Region Based Indexing and Retrieval for Images with Elastic Bucket Tries

Retrieval and indexing in multimedia databases has been an active topic both in the Information Retrieval and com- puter vision communities for a long time. In this paper we propose a novel region based indexing and retrieval scheme for images. First we present our virtual textual description using which, images are converted to text documents con- taining keywords. Then we look at how these documents can be indexed and retrieved using modified elastic bucket tries and show that our approach is one order better than stan- dard spatial indexing approaches. We also show various operations required for dealing with complex features like relevance feedback. Finally we analyze the method compar- atively and and validate our approach.

A Rule-based Approach to Image Retrieval

Imagine the world if computers could comprehend and decipher our verbal descriptions of scenes from the real world and present us with possible pictures of our thoughts. This proved motivation enough for a team from CVIT to exploring the possibility of an image retrieval system which took natural language descriptions of what they were looking for and processed it and closely matched it with the images in the database and presented the users with a select set of retrieved results. A sample query could be like - reddish orage upper egde and bright yellowish centre. The system is a rule-based system where rules describe the image content.

Related Publications

Dhaval Mehta, E.S.V.N.L.S.Diwakar, and C. V. Jawahar, A Rule-based Approach to Image Retrieval, Proceedings of the IEEE Region 10 Conference on Convergent Technologies(TENCON), Oct. 2003, Bangalore, India, pp. 586--590. [PDF]

- Suman Karthik, C.V. Jawahar - Analysis of Relevance Feedback in Content Based Image Retrieval, Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2006, Singapore. [PDF]

- Suman Karthik, C.V. Jawahar - Virtual Textual Representation for Efficient Image Retrieval, Proceedings of the 3rd International Conference on Visual Information Engineering(VIE), 26-28 September 2006 in Bangalore, India. [PDF]

- Suman Karthik, C.V. Jawahar - Effecient Region Based Indexing and Retrieval for Images with Elastic Bucket Tries, Proceedings of the International Conference on Pattern Recognition(ICPR), 2006. [PDF]

Associated People

- Dr. C. V. Jawahar

- Pradhee Tandon

- Pramod Sankar

- Praveen Dasigi

- Piyush Nigam

- P. Suman Karthik

- Natraj J.

- Saurabh K. Pandey

- Dhaval Mehta

- E. S. V. N. L. S. Diwakar