DGAZE Dataset for driver gaze mapping on road

Isha Dua Thrupthi John Riya Gupta C.V. Jawahar

Mercedes Benz IIIT Hyderabad IIIT Hyderabad IIIT Hyderabad

IROS 2020

Dataset Overview

[ paper ]

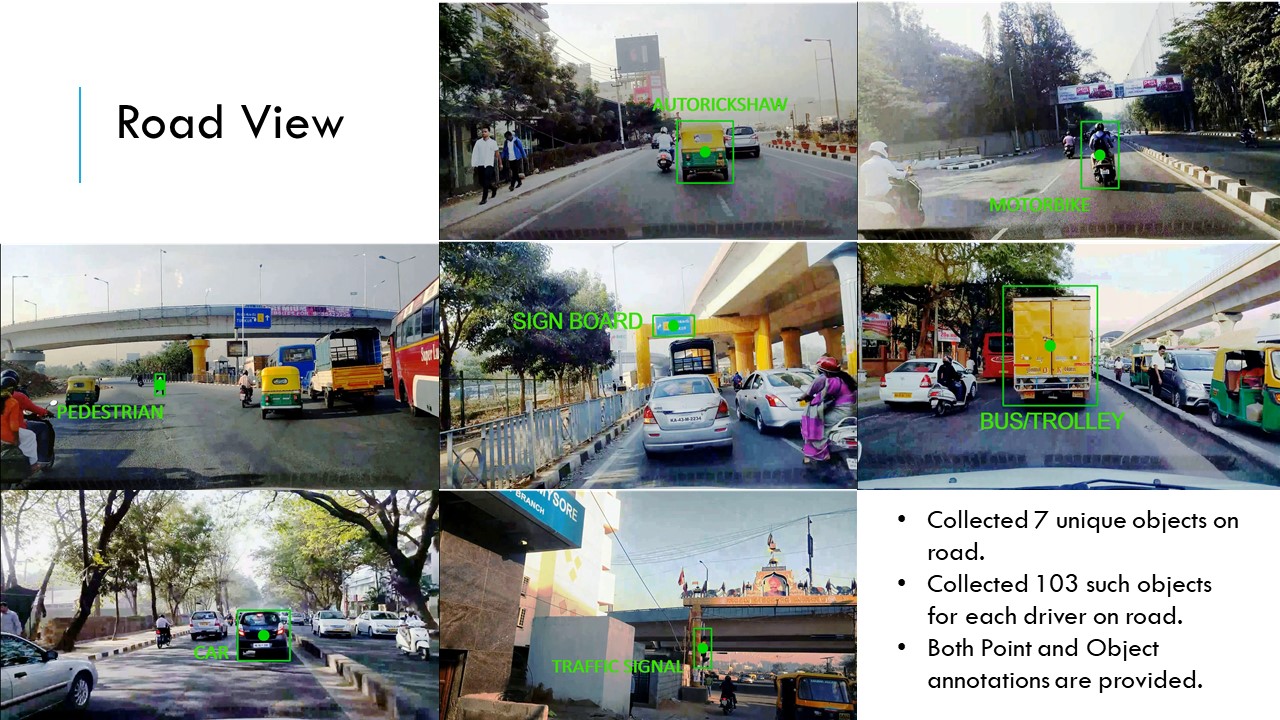

We collected road videos using mobile phones mounted on the dashboards of cars driven in the city. We combined the road videos to create a single 18-minute video that had a good mix of road, lighting, and traffic conditions. The road images have varied illumination as the images are captured from morning to evening in the real cars on actual roads. For each frame, we annotated a single object belonging to one of the classes: car, bus, motorbike, pedestrian, auto-rickshaw, traffic signal and sign board. We also marked the center of each bounding box to serve as the groundtruth for the point detection challenge. We annotated objects that typically take up a driver's attention such as relevant signage, pedestrians, and intercepting vehicles.

Use Cases

Citation

All documents and papers that report research results obtained using the DGAZE dataset should cite the below paper:

Citation: Isha Dua, Thrupthi Ann John, Riya Gupta and C. V. Jawahar. DGAZE:Diver Gaze Mapping On Road In IROS 2020.

Bibtex

@inproceedings{isha2020iros,

title={DGAZE: Driver Gaze Mapping on Road},

author={Dua, Isha and John, Thrupthi Ann and Gupta, Riya and Jawahar, CV},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2020},

year={2020}

}

Download Dataset

The DGAZE dataset is available free of charge for research purposes only. Please use the link below to download the dataset.

Disclaimer: While every effort has been made to ensure accuracy, DGaze database owners cannot accept responsibility for errors or omissions.

[ Download Dataset ]

Acknowledgements

We would like to thank Akshay Uttama Nambi and Venkat Padmanabhan from Microsoft Research for providing us with resources to collect DGAZE dataset. This work is partly supported by DST through the IMPRINT program. Thrupthi Ann John is supported by Visvesvaraya Ph.D. fellowship.

Contact

For any queries about the dataset, please contact the authors below:

Isha Dua:

Thrupthi Ann John: