ETL: Efficient Transfer Learning for Face Tasks

Thrupthi Ann John[1], Isha Dua[1], Vineeth N Balasubramanian[2] and C.V. Jawahar[1]

IIIT Hyderabad[1] IIT Hyderabad[2]

[ Video ] | [ PDF ]

Abstract

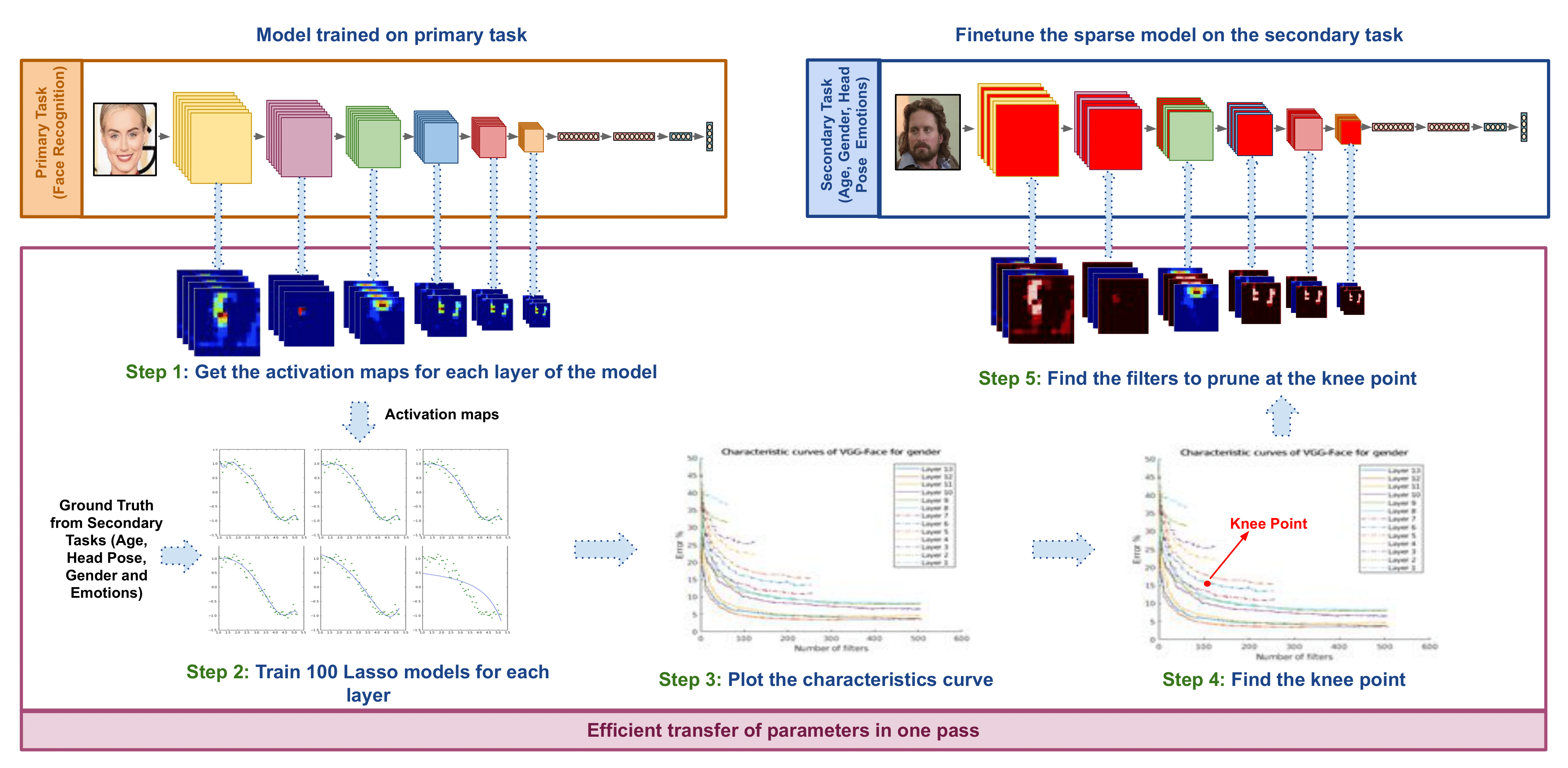

Transfer learning is a popular method for obtaining deep trained models for data-scarce face tasks such as head pose and emotion. However, current transfer learning methods are inefficient and time-consuming as they do not fully account for the relationships between related tasks. Moreover, the transferred model is large and computationally expensive. As an alternative, we propose ETL: a technique that efficiently transfers a pre-trained model to a new task by retaining only \emph{cross-task aware filters}, resulting in a sparse transferred model. We demonstrate the effectiveness of ETL by transferring VGGFace, a popular face recognition model to four diverse face tasks. Our experiments show that we attain a size reduction up to 97\% and an inference time reduction up to 94\% while retaining 99.5\% of the baseline transfer learning accuracy.

Demo

Related Publications

ETL: Efficient Transfer Learning for Face tasks

Thrupthi Ann John, Isha Dua, Vineeth N Balasubramanian and C. V. Jawahar

ETL: Efficient Transfer Learning for Face Tasks , 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 2022. [ PDF ] , [ BibTeX ]

Contact

For any queries about the work, please contact the authors below

- Thrupthi Ann John - thrupthi [dot] ann [at] research [dot] iiit [dot] ac [dot] in

- Isha Dua: duaisha1994 [at] gmail [dot] com