PSUMNet: Unified Modality Part Streams are All You Need for Efficient Pose-based Action Recognition

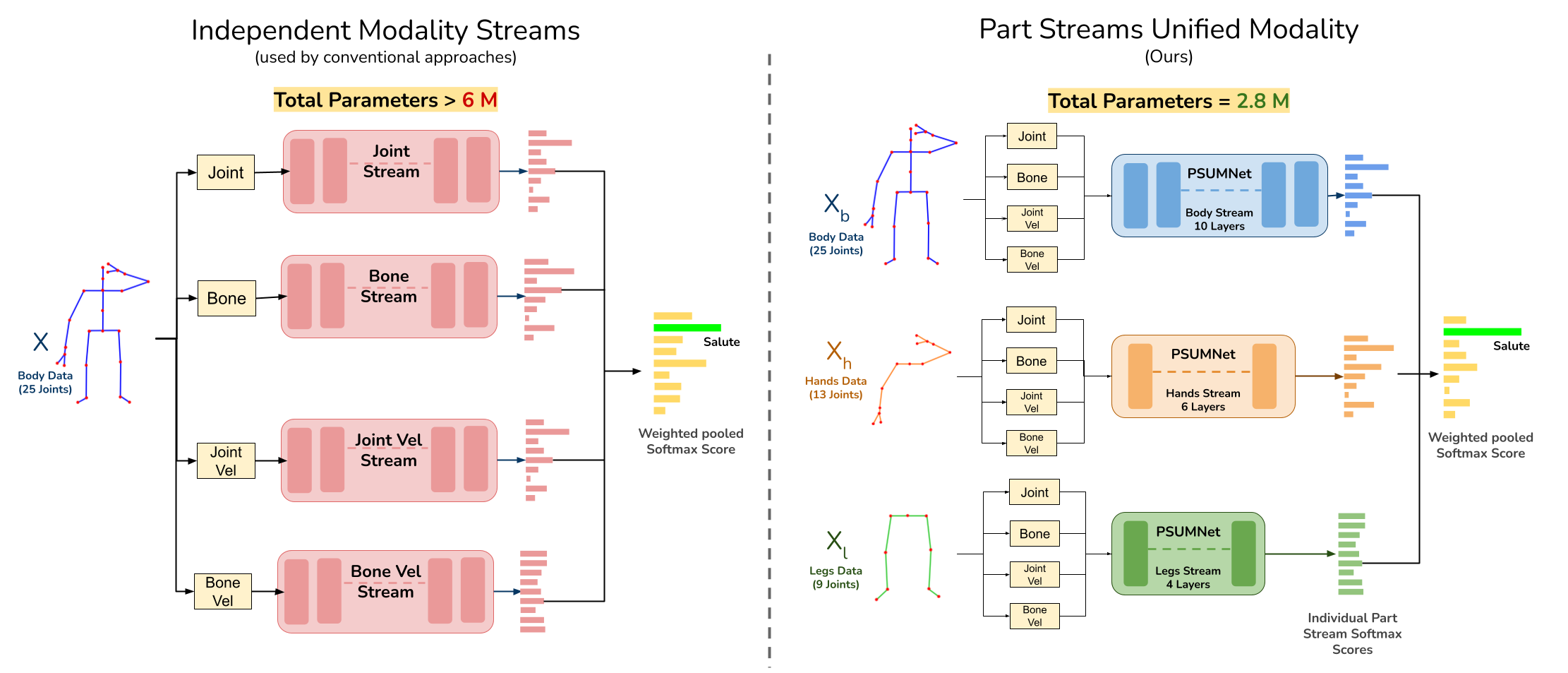

Pose-based action recognition is predominantly tackled by approaches which treat the input skeleton in a monolithic fashion, i.e. joints in the pose tree are processed as a whole. However, such approaches ignore the fact that action categories are often characterized by localized action dynamics involving only small subsets of part joint groups involving hands (e.g. ‘Thumbs up’) or legs (e.g. ‘Kicking’). Although part-grouping based approaches exist, each part group is not considered within the global pose frame, causing such methods to fall short. Further, conventional approaches employ independent modality streams (e.g. joint, bone, joint velocity, bone velocity) and train their network multiple times on these streams, which massively increases the number of training parameters

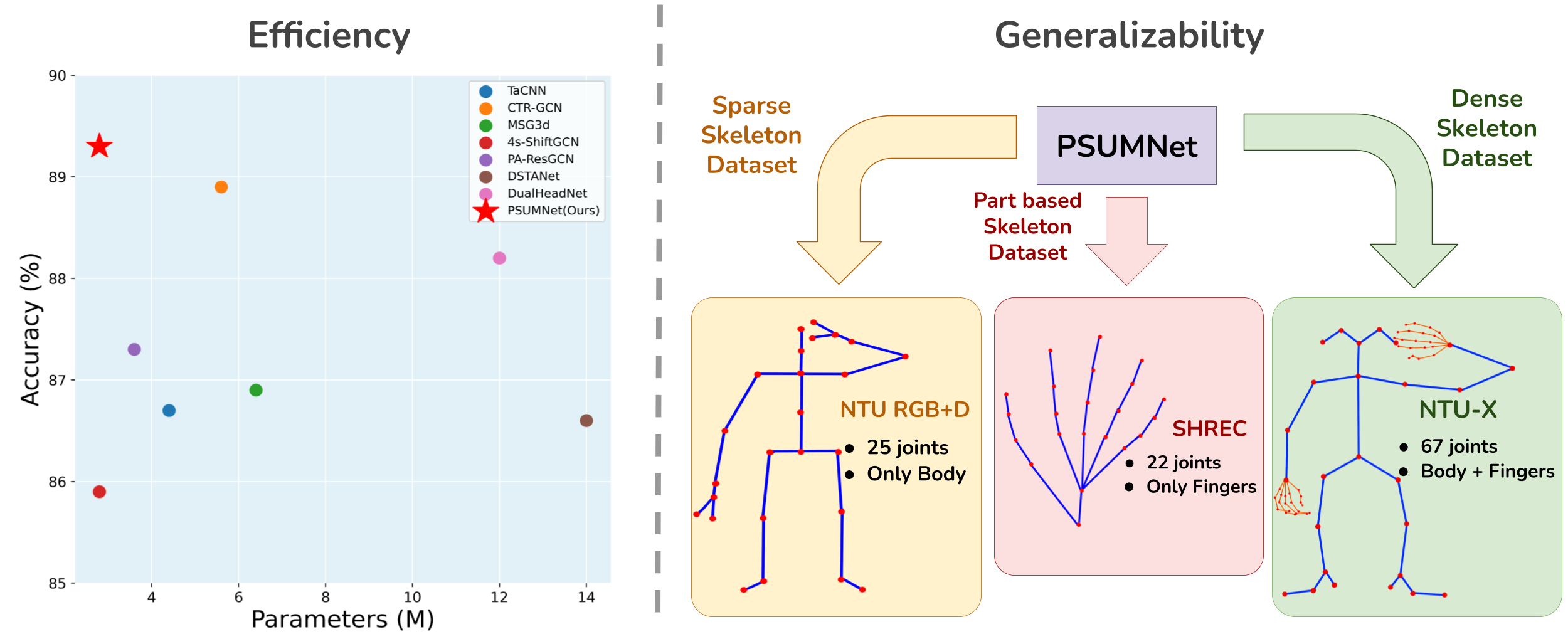

The plot on left shows accuracy against # parameters for our proposed architecture PSUMNet (⋆) and existing approaches for the large-scale NTURGB+D 120 human actions dataset (cross subject).

Comparison between conventional training procedure used in most of the previous approaches (left) and our approach (right).

To address these issues, we introduce PSUMNet, a novel approach for scalable and efficient pose-based action recognition. At the representation level, we propose a global frame based part stream approach as opposed to conventional modality based streams. Within each part stream, the associated data from multiple modalities is unified and consumed by the processing pipeline. Experimentally, PSUMNet achieves state of the art performance on the widely used NTURGB+D 60/120 dataset and dense joint skeleton dataset NTU 60-X/120-X. PSUMNet is highly efficient and outperforms competing methods which use 100%-400% more parameters. PSUMNet also generalizes to the SHREC hand gesture dataset with competitive performance. Overall, PSUMNet’s scalability, performance and efficiency makes it an attractive choice for action recognition and for deployment on computerestricted embedded and edge devices.

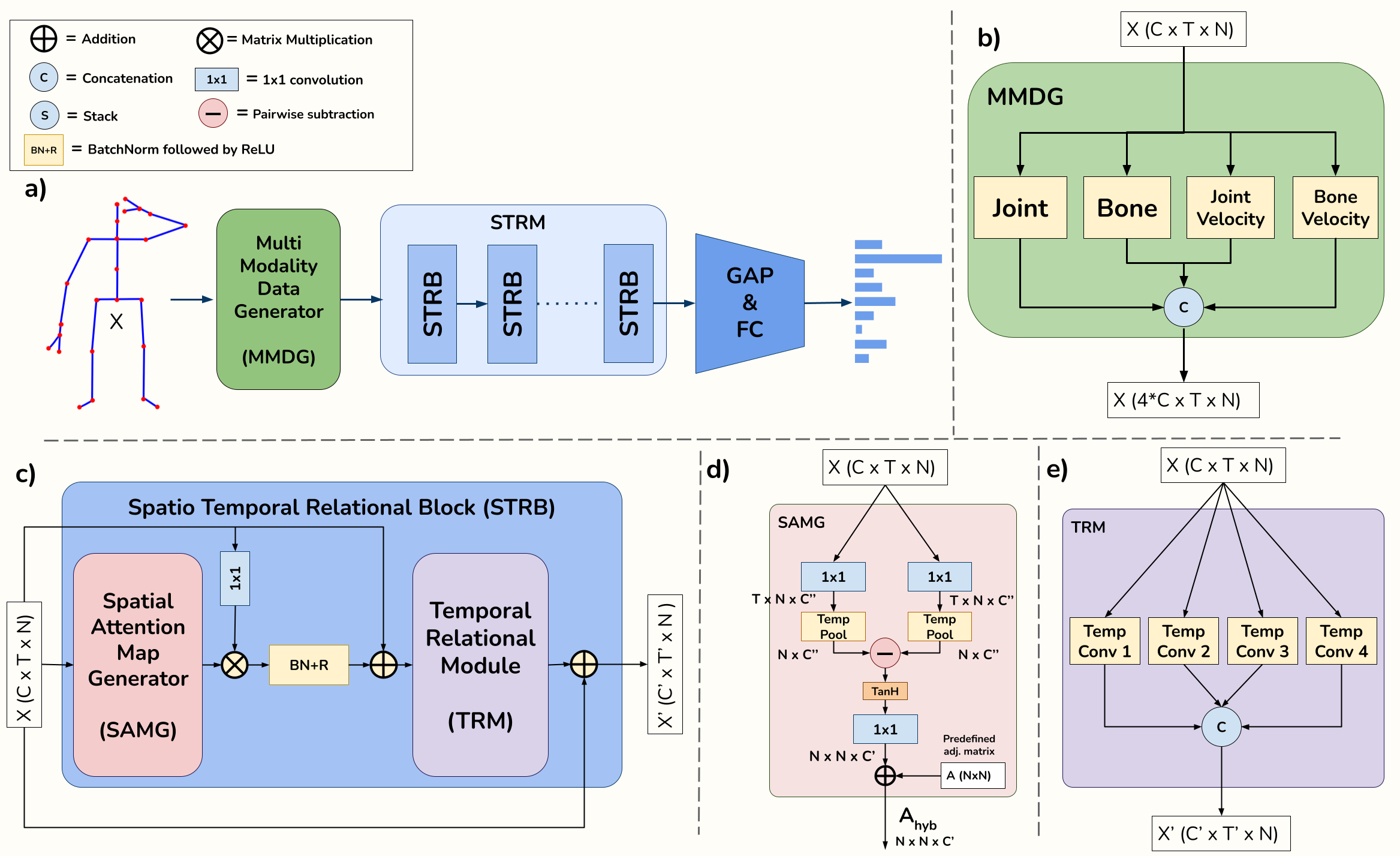

- Overall Architecture of one stream of the proposed architecture. The input skeleton is passed through Multi modality data generator (MMDG), which generates joint, bone, joint velocity and bone velocity data from input and concatenates each modality data into channel dimension as shown in (b).

- This multi-modal data is processed via Spatio Temporal Relational Module (STRM) followed by global average pooling and FC.

- Spatio Temporal Relational Block (STRB), where input data is passed through Spatial Attention Map Generator (SAMG) for spatial relation modeling, followed by Temporal Relational Module. As shown in (a) multiple STRB stacked together make the STRM.

- Spatial Attention Map Generator (SAMG), dynamically models adjacency matrix (Ahyb)to model spatial relations between joints. Predefined adjacency matrix (A) is used for regularization.

- Temporal Relational Module (TRM) consists of multiple temporal convolution blocks in parallel. Output of each temporal convolution block is concatenated to generate final features.