Kernel Methods and Factorization for Image and Video Analysis

Ranjeeth Kumar Dasineni (homepage)

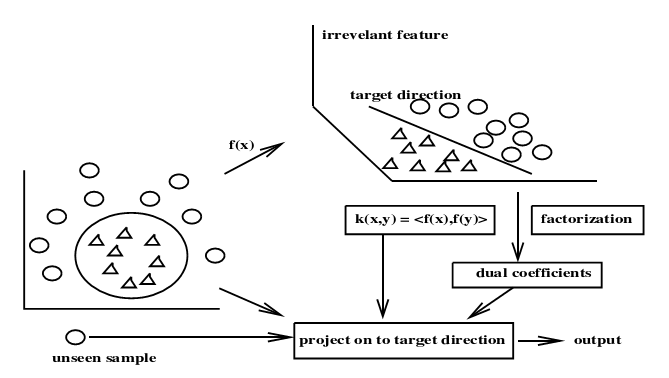

Image and Video Analysis is one of the most active research areas in computer science with a large number of applications in security, surveillance, broadcast video processing etc. Prior to the past two decades, the primary focus in this domain was on efficient processing of image and video data. However, with the increase in computational power and advancements in Machine Learning, the focus has shifted to a wide range of other problems. Machine learning techniques have been widely used to perform higher level tasks such as recognizing faces from images, facial expression analysis in videos, printed document recognition and video understanding which require extensive analysis of data. The field of Machine Learning itself, witnessed the evolution of Kernel Methods as a principled and efficient approach to analyze nonlinear relationships in the data. The new algorithms are computationally efficient and statistically stable. This is in stark contrast with the previous methods used for nonlinear problems, such as neural networks and decision trees, which often suffered from overfitting and computational expense. In addition, kernel methods provide a natural way to treat heterogeneous data (like categorical data, graphs and sequences) under a unified framework. These advantages led to their immense popularity in many fields, such as computer vision, data mining and bioinformatics. In computer vision, the use of kernel methods such as support vector machine, kernel principal component analysis and kernel discriminant analysis resulted in remarkable improvements in performance at tasks such as classification, recognition and feature extraction. Like Kernel Methods, Factorization techniques enabled elegant solutions to many problems in computer vision such as eliminating redundancy in representation of data and analysis of their generative processes. Structure from Motion and Eigen Faces for feature extraction are examples of successful applications of factorization in vision. However, factorization, so far, has been used on the traditional matrix representation of image collection and videos. This representation fails to completely exploit the structure in 2D images as each image is represented using a single 1D vector. Tensors are more natural representations for such data and recently gained wide attention in computer vision. Factorization becomes an even more useful tool with such representations.

While both Kernel Methods and Factorization both aid in analysis of the data and detection of inherent regularities, they do so in orthogonal manner. The central idea in kernel methods is to work with new sets of features derived from the input set of features. Factorization, on the other hand, operates by eliminating redundant or irrelevant information. Thus, they form a complementary set of tools to analyze data. This thesis addresses the problem of effective manipulation of dimensionality of representation of visual data, using these tools, for solving problems in image analysis. The purpose of this thesis is three fold: i) Demonstrating useful applications of kernel methods to problems in image analysis. New kernel algorithms are developed for feature selection and time series modeling. These are used for biometric authentication using weak features, planar shape recognition and handwritten character recognition. ii) Using the tensor representation and factorization of tensors to solve challenging problems in facial video analysis. These are used to develop simple and efficient methods to perform expression transfer, expression recognition and face morphing. iii) Investigating and demonstrating the complementary nature of Kernelization and Factorization and how they can be used together for analysis of the data. (more...)

| Year of completion: | December 2007 |

| Advisor : |

Related Publications

Ranjeeth Kumar and C.V. Jawahar - Kernel Approach to Autoregressive Modeling Proc. of The Thirteen National Conference on Communications(NCC 2007), Kanpur, 2007 . [PDF]

Ranjeeth Kumar and C.V. Jawahar - Class-Specific Kernel Selection for Verification Problems Proc. of The Six International Conference on Advances in Pattern Recognition(ICAPR 2007), Kolkatta, 2007. [PDF]

S. Manikandan, Ranjeeth Kumar and C.V. Jawahar - Tensorial Factorization Methods for Manipulation of Face Videos, The 3rd International Conference on Visual Information Engineering 26-28 September 2006 in Bangalore, India. [PDF]

Ranjeeth Kumar, S. Manikandan and C. V. Jawahar - Task Specific Factors for Video Characterization, 5th Indian Conference on Computer Vision, Graphics and Image Processing, Madurai, India, LNCS 4338 pp.376-387, 2006. [PDF]

- Ranjeeth Kumar, S. Manikandan and C. V. Jawahar - Face Video Alteration Using Tensorial Methods, in Pattern Recognition, Journal of Pattern Recognition Society (Submitted)

Downloads

![]()