RemoteVIS: A Remote Rendering System

Soumyajit Deb (homepage)

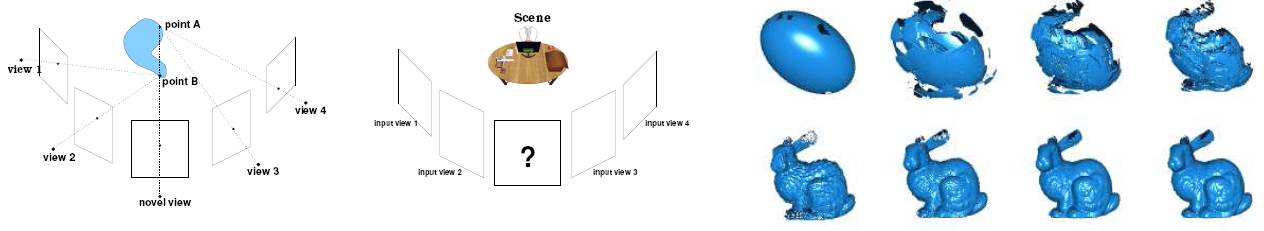

Large graphics models require a large amount of memory to store and high end graphics capabilities to render. It is useful to store such virtual models on a server and stream it to a remote client on demand for visualization. In many dynamic environments, this is the only option available. We present the design and implementation of RemoteVIS, a system to perform . The goal of our system is to provide the best visualization quality given the capabilities and connection parameters of the client. The system is designed to adapt to a wide range of clients and connection speeds. The system uses a suitable streaming representation based on the situation. This could range from geometry-based representation combining levels of detail and visibility culling to an image-based representation for clients with low capability. The client’s graphics and storage capabilities, the speed of viewer motion, and the network bandwidth and latency are taken into account for this. The client acquires model data sufficient for an interval in the future using path prediction for freeze and jerk free rendering. In a remote rendered walk through, the virtual environment including all models, textures and other data are stored on the server side. The client only receives the required parts of the virtual environment that the client is viewing without having to download the entire virtual environment. One of the major bottlenecks of the system is network bandwidth between the server and the client. The system optimizes the rendering quality and mesh detail of the world based upon this bandwidth. The geometry based renderer uses Object Based Visible Space Model Representation algorithms for selecting visible geometry to be transmitted to the client. This automatically culls out all hidden and invisible objects in the scene. On the client side, View Frustum Culling is used to render only the visible polygons. The client side code includes path based prediction of motion for a smooth jerk-free walk through. The image based renderer is useful in cases of extremely low bandwidth and/or when the client does not possess a hardware graphics accelerator. Our current implementation uses Visible Space Models as the geometry-based streaming format and trifocal tensors as the image-based streaming format. We present results of the study conducted on a representative range of the relevant parameters.

| Year of completion: | 2008 |

| Advisor : |

Related Publications

Downloads

![]()