Proxy Based Compression of Depth Movies

Pooja Verlani (homepage)

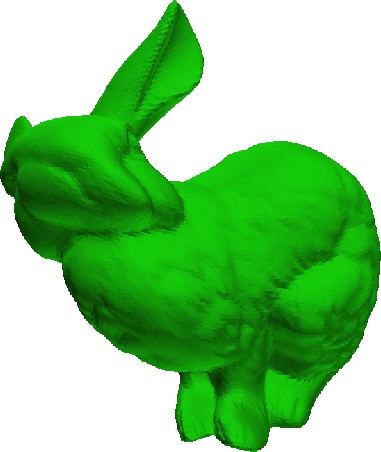

Sensors for 3D data are common today. These include multicamera systems, laser range scan- ners, etc. Some of them are suitable for the real-time capture of the shape and appearance of dynamic events. The 2-1/2 D model of aligned depth map and image, called a Depth Image, has been 1 popular for Image Based Modeling and Rendering (IBMR). Capturing the 2-1/2D geometric structure and photometric appearance of dynamic scenes is possible today. Time varying depth and image sequences, called Depth Movies, can extend IBMR to dynamic events. The captured event con- tains aligned sequences of depth maps and textures and are often streamed to a distant location for immersive viewing. The applications of such systems include virtual-space tele-conferencing, remote 3D immersion, 3D entertainment, etc. We study a client-server model for tele-immersion where captured or stored depth movies from a server is sent to multiple, remote clients on demand. Depth movies consist of dynamic depth maps and texture maps. Multiview image compression and video compression have been studied earlier, but there has been no study about dynamic depth map compression. This thesis contributes towards dynamic depth map compression for efficient transmission in a server-client 3D teleimmersive environment. The dynamic depth maps data is heavy and need efficient compression schemes. Immersive applications requires time-varying se- quences of depth images from multiple cameras to be encoded and transmitted. At the remote site of the system, the 3D scene is generated back by rendering the whole scene. Thus, depth movies of a generic 3D scene from multiple cameras become very heavy to be sent over network considering the available bandwidth.

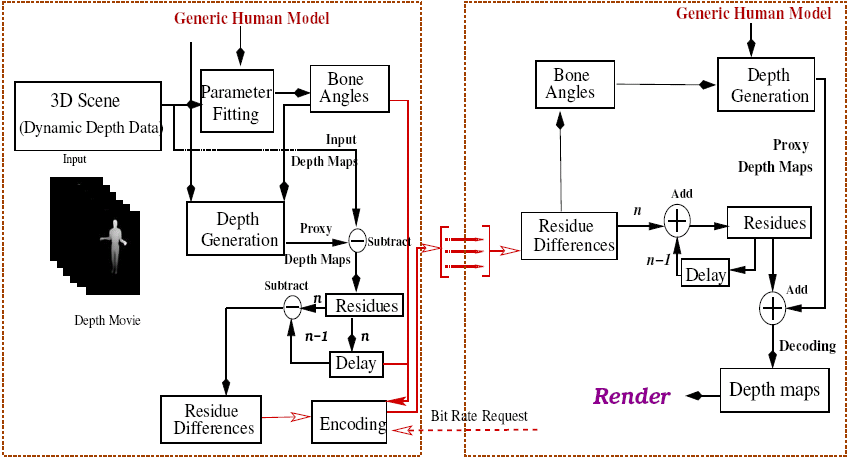

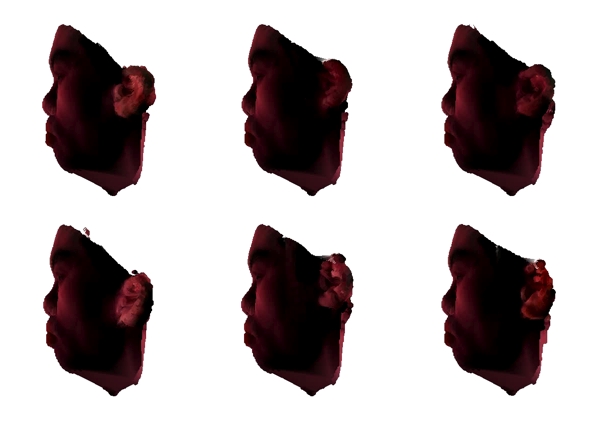

This thesis presents a scheme to compress depth movies of human actors using a parametric proxy model for the underlying action. We use a generic articulated human model as the proxy to represent the human in action and the various joint angles of the model to parametrize the proxy for each time instant. The proxy represents a common prediction of the scene structure. The difference between the captured depth and the depth of the proxy is called as the residue and is used to represent the scene exploiting the spatial coherence. A few variations of this algorithm are presented in this thesis. We experimented with bit-wise compression of the residues and analyzed the quality of the generated 3D scene. Differences in residues across time are used to exploit temporal coherence. Intra-frame coded frames and difference-coded frames provide random access and high compression. We show results on several synthetic and real actions to demonstrate the compression ratio and resulting quality using a depth-based rendering of the decoded scene. The performance achieved is quite impressive. We present the articulation fitting tool, the com- pression module with different algorithms and the server-client system with several variants for the user. The thesis first explains the concepts about 3D reconstruction by image based rendering and modeling, compressing such 3D representations, teleconferencing, later we proceed towards the concept of depth images and movies, followed by the main algorithms, examples, experiments and results.

| Year of completion: | 2008 |

| Advisor : | P. J. Narayanan |

Related Publications

Pooja Verlani, P. J. Narayanan - Proxy-Based Compression of 2-1/2D Structure of Dynamic Events for Tele-immersive Systems Proceedings of 3D Data Processing, Visualization and Transmission June 18-20, 2008, Georgia Institute of Technology, Atlanta, GA, USA. [PDF]

Pooja Verlani, P. J. Narayanan - Parametric Proxy-Based Compression of Multiple Depth Movies of Humans Proceedings of Data Compression Conference 2008 March 25 to March 27 2008, Salt Lake City, Utah. [PDF]

Pooja Verlani, Aditi Goswami, P.J. Narayanan, Shekhar Dwivedi and Sashi Kumar Penta - Depth Image: representations and Real-time Rendering, Third International Symposium on 3D Data Processing, Visualization and Transmission,North Carolina, Chappel Hill, June 14-16, 2006. [PDF]

Downloads

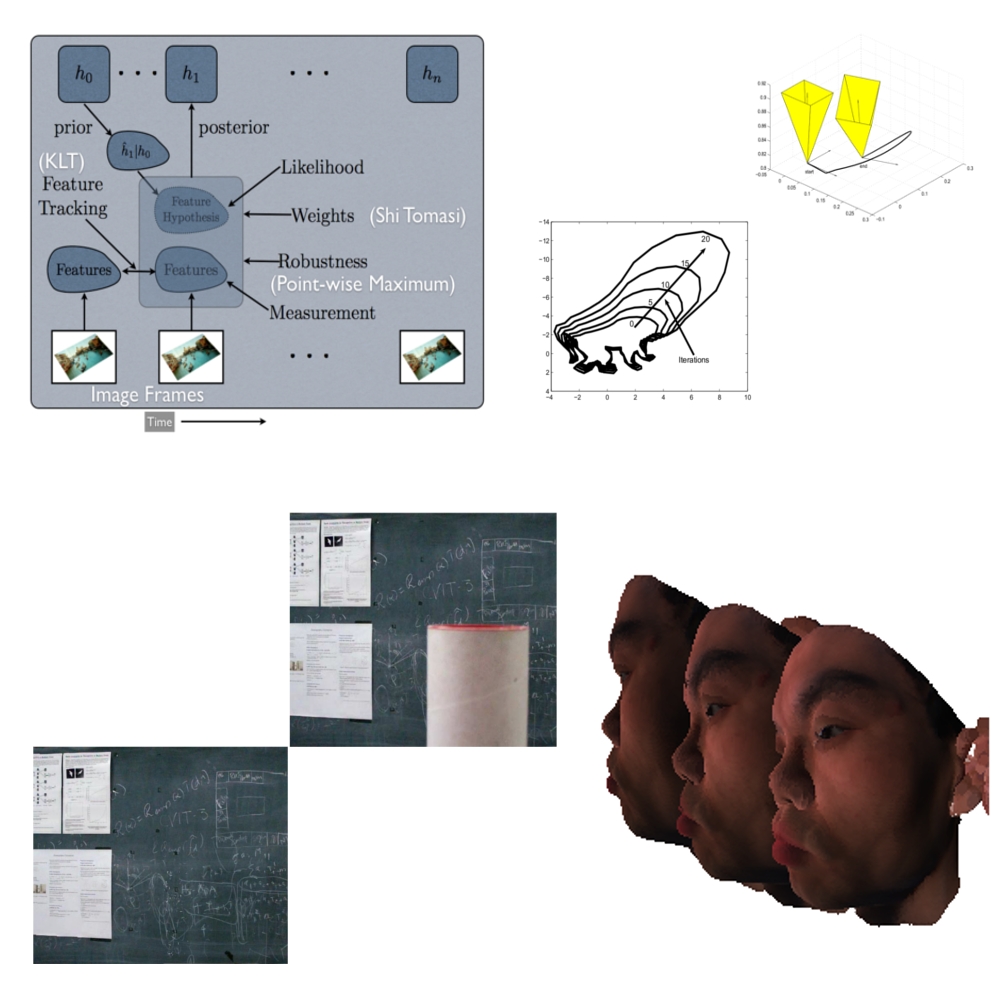

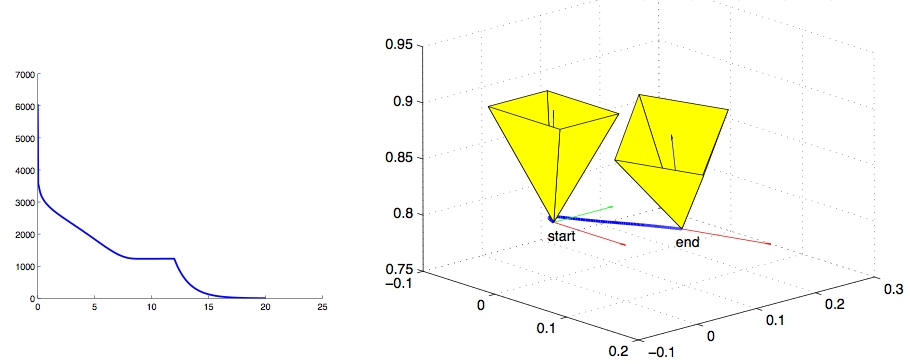

Then we show an application of frequency domain based MVG to the task of robot positioning. Positioning (or Visual Servoing) is a task that enables a robot to assume a desired pose with respect to an object of interest, with the help of a camera. This object might be a heart, as in surgery, or an automobile part, as in industrial settings. We show that by using frequency domain techniques in MVG, we can achieve algorithms that require only rough correspondence between images, unlike earlier algorithms that needed specific point-to-point correspondences. This is further developed into a general framework for servoing that is capable of straight Cartesian paths and path following, which are recent problems in servoing.

Then we show an application of frequency domain based MVG to the task of robot positioning. Positioning (or Visual Servoing) is a task that enables a robot to assume a desired pose with respect to an object of interest, with the help of a camera. This object might be a heart, as in surgery, or an automobile part, as in industrial settings. We show that by using frequency domain techniques in MVG, we can achieve algorithms that require only rough correspondence between images, unlike earlier algorithms that needed specific point-to-point correspondences. This is further developed into a general framework for servoing that is capable of straight Cartesian paths and path following, which are recent problems in servoing. Within computer vision, we explore the use of MVG for various image and video editing tasks. Tasks like removing a scene object from a video in a consistent manner would fall in this category (Predicting how the video would look like without the object). In this area, we propose an algorithm for video inpainting, where specific objects from a video are removed and resulting space-time holes are filled in a consistent manner. The algorithm is fully automatic unlike traditional image and video inpainting algorithms, and takes as input two functions; one function defines the object to be removed, and the other defines the background model that is used for hole-filling.

Within computer vision, we explore the use of MVG for various image and video editing tasks. Tasks like removing a scene object from a video in a consistent manner would fall in this category (Predicting how the video would look like without the object). In this area, we propose an algorithm for video inpainting, where specific objects from a video are removed and resulting space-time holes are filled in a consistent manner. The algorithm is fully automatic unlike traditional image and video inpainting algorithms, and takes as input two functions; one function defines the object to be removed, and the other defines the background model that is used for hole-filling.

GPUs have been used increasingly for a wide range of problems involving heavy computations in graphics, computer vision, scientific processing, etc. One of the key aspects for their wide acceptance is the high performance to cost ratio. In less than a decade, GPUs have grown from non-programmable graphics co-processors to a general-purpose unit with a high level language interface that delivers 1 TFLOPs for $400. GPU's architecture including the core layout, memory, scheduling, etc. is largely hidden. It also changes more frequently than the single core and multi core CPU architecture. This makes it difficult to extract good performance for non-expert users. Suboptimal implementations can pay severe performance penalties on the GPU. This is likely to persist as many-core architectures and massively multithreaded programming models gain popularity in the future.

GPUs have been used increasingly for a wide range of problems involving heavy computations in graphics, computer vision, scientific processing, etc. One of the key aspects for their wide acceptance is the high performance to cost ratio. In less than a decade, GPUs have grown from non-programmable graphics co-processors to a general-purpose unit with a high level language interface that delivers 1 TFLOPs for $400. GPU's architecture including the core layout, memory, scheduling, etc. is largely hidden. It also changes more frequently than the single core and multi core CPU architecture. This makes it difficult to extract good performance for non-expert users. Suboptimal implementations can pay severe performance penalties on the GPU. This is likely to persist as many-core architectures and massively multithreaded programming models gain popularity in the future. One way to exploit the GPU's computing power effectively is through high level primitives upon which other computations can be built. All architecture specific optimizations can be incorporated into the primitives by designing and implementing them carefully. Data parallel primitives play the role of building blocks to many other algorithms on the fundamentally SIMD architecture of the GPU. Operations like sorting, searching etc., have been implemented for large data sets.

One way to exploit the GPU's computing power effectively is through high level primitives upon which other computations can be built. All architecture specific optimizations can be incorporated into the primitives by designing and implementing them carefully. Data parallel primitives play the role of building blocks to many other algorithms on the fundamentally SIMD architecture of the GPU. Operations like sorting, searching etc., have been implemented for large data sets. indexes of records. This facilitates the use of the GPU as a co-processor for split or sort, with the actual data movement handled separately. We can compute the split indexes for a list of 32 million records in 180 milliseconds for a 32-bit key and in 800 ms for a 96-bit key.

indexes of records. This facilitates the use of the GPU as a co-processor for split or sort, with the actual data movement handled separately. We can compute the split indexes for a list of 32 million records in 180 milliseconds for a 32-bit key and in 800 ms for a 96-bit key.