Efficient Image Retrieval Methods For Large Scale Dynamic Image Databases

Suman Karthik

The commoditization of imaging hardware has led to an exponential growth in image and video data, making it difficult to access relevant data when it is required. This has led to a great amount of research into multimedia retrieval and Content Based Image Retrieval (CBIR) in particular. Yet, CBIR has not found widespread acceptance in real world systems. One of the primary reasons for this is the inability of traditional CBIR systems to scale effectively to Large Scale image databases. The introduction of the Bag of Words model for image retrieval has changed some of these issues for the better, yet bottlenecks remain and their utility is limited when it comes to Highly Dynamic image databases (image databases where the set of images is constantly changing). In this thesis, we focus on developing methods that address the scalability issues of traditional CBIR systems and adaptability issues of Bag of Words based image retrieval systems.

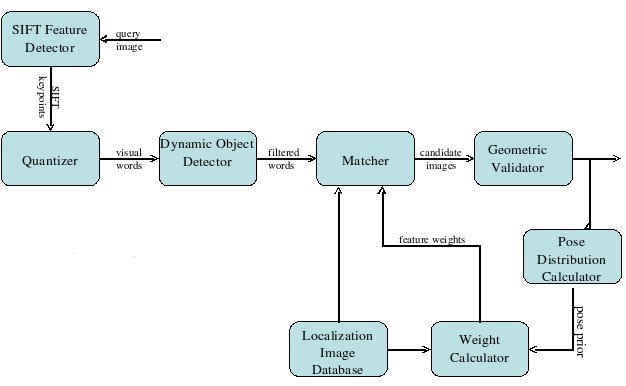

Traditional CBIR systems find relevant images by finding nearest neighbors in a high dimensional feature space. This is computationally expensive, and does not scale as the number of images in the database grow. We address this problem by posing the image retrieval problem as a text retrieval task. We do this by transforming the images into text documents called the Virtual Textual Description (VTD). Once this transformation is done, we further enhance the performance of the system by incorporating a novel relevance feedback algorithm called discriminative relevance feedback. Then we use the virtual textual description of images to index and retrieve images efficiently using a data structure called the Elastic Bucket Trie(EBT).

Traditional CBIR systems find relevant images by finding nearest neighbors in a high dimensional feature space. This is computationally expensive, and does not scale as the number of images in the database grow. We address this problem by posing the image retrieval problem as a text retrieval task. We do this by transforming the images into text documents called the Virtual Textual Description (VTD). Once this transformation is done, we further enhance the performance of the system by incorporating a novel relevance feedback algorithm called discriminative relevance feedback. Then we use the virtual textual description of images to index and retrieve images efficiently using a data structure called the Elastic Bucket Trie(EBT).

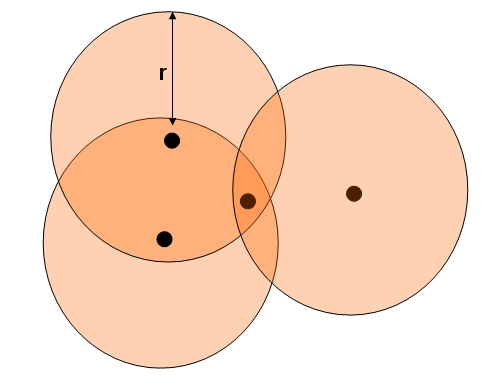

Contemporary bag of visual words approaches to image retrieval perform one-time offline vector quantization to create the visual vocabulary. However, these methods do not adapt well to dynamic image databases whose nature constantly changes as new data is added. In this thesis, we design, present and examine with experiments a novel method for incremental vector quantization(IVQ) to be used in image and video retrieval systems with dynamic databases.

Contemporary bag of visual words approaches to image retrieval perform one-time offline vector quantization to create the visual vocabulary. However, these methods do not adapt well to dynamic image databases whose nature constantly changes as new data is added. In this thesis, we design, present and examine with experiments a novel method for incremental vector quantization(IVQ) to be used in image and video retrieval systems with dynamic databases.

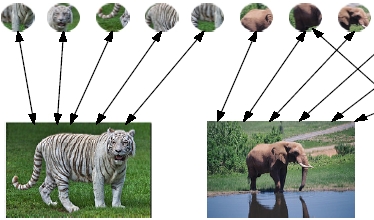

Semantic indexing has been invaluable in improving the performance of bag of words based image retrieval systems. However, contemporary approaches to semantic indexing for bag of words image retrieval do not adapt well to dynamic image databases. We introduce and examine with experiments a bipartite graph model (BGM), which is a scalable datastructure that aids in on-line semantic indexing and a cash flow algorithm that works on the BGM to retrieve semantically relevant images from the database. We also demonstrate how traditional text search engines can beused to build scalable image retrieval systems.

Semantic indexing has been invaluable in improving the performance of bag of words based image retrieval systems. However, contemporary approaches to semantic indexing for bag of words image retrieval do not adapt well to dynamic image databases. We introduce and examine with experiments a bipartite graph model (BGM), which is a scalable datastructure that aids in on-line semantic indexing and a cash flow algorithm that works on the BGM to retrieve semantically relevant images from the database. We also demonstrate how traditional text search engines can beused to build scalable image retrieval systems.

| Year of completion: | July 2009 |

| Advisor : | C. V. Jawahar |

Related Publications

Suman Karthik, Chandrika Pulla and C.V. Jawahar - Incremental Online Semantic Indexing for Image Retrieval in Dynamic Databases Proceedings of International Workshop on Semantic Learning Applications in Multimedia (SLAM: CVPR 2009), 20-25 June 2009, Miami, Florida, USA. [PDF]

- Suman Karthik, C.V. Jawahar - Analysis of Relevance Feedback in Content Based Image Retrieval, Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision (ICARCV), 2006, Singapore. [PDF]

- Suman Karthik, C.V. Jawahar, Virtual Textual Representation for Efficient Image Retrieval, Proceedings of the 3rd International Conference on Visual Information Engineering(VIE), 26-28 September 2006 in Bangalore, India. [PDF]

- Suman Karthik, C.V. Jawahar, Effecient Region Based Indexing and Retrieval for Images with Elastic Bucket Tries, Proceedings of the International Conference on Pattern Recognition(ICPR), 2006. [PDF]

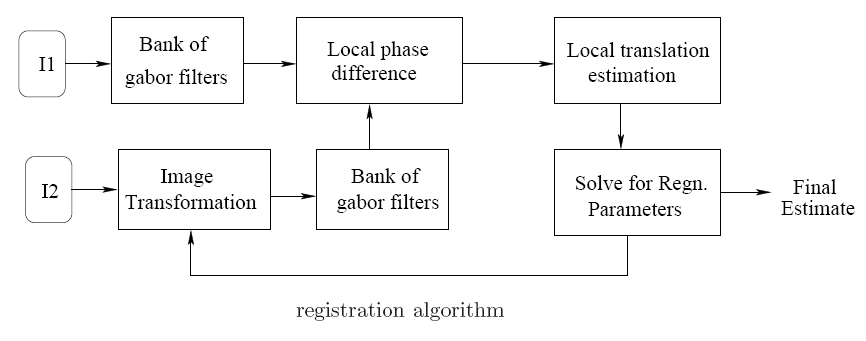

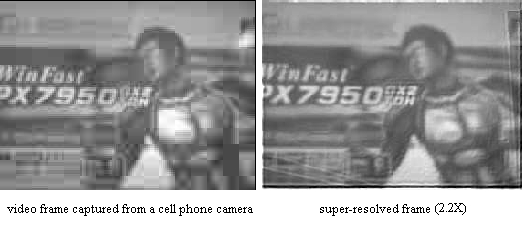

We explore an alternate solution to the problem of robustness in the registration step of a SR algorithm. We present an accurate registration algorithm that uses the local phase information, which is robust to the above degradations. The registration step is formulated as optimization of the local phase alignment at various spatial frequencies. We derive the theoretical error rate of the estimates in presence of non-ideal band-pass behavior of the filter and show that the error converges to zero over iterations. We also show the invariance of local phase to a class of blur kernels. Experimental results on images taken under varying conditions are demonstrated.

We explore an alternate solution to the problem of robustness in the registration step of a SR algorithm. We present an accurate registration algorithm that uses the local phase information, which is robust to the above degradations. The registration step is formulated as optimization of the local phase alignment at various spatial frequencies. We derive the theoretical error rate of the estimates in presence of non-ideal band-pass behavior of the filter and show that the error converges to zero over iterations. We also show the invariance of local phase to a class of blur kernels. Experimental results on images taken under varying conditions are demonstrated. Recently, Lin and Shum has shown an upper limit on multi-frame SR techniques. For practical purposes this limit is very small. Another class of SR algorithms formulate the high-quality image generation as an inference problem. High-resolution image is inferred from a set of learned training patches. This class of algorithm works well for natural structures but for many man-made structures this technique does not produce accurate results. We propose to capture the images at optimal zoom from the perspective of image super-resolution. The images captured at this zoom has sufficient amount of information so that it can be magnified further by using any SR algorithm which promotes step edges and certain features. This can have a variety of applications in consumer cameras, large-scale automated image mosaicing, robotics and improving the recognition accuracy of many computer vision algorithms. Existing efforts are limited to image a pre-determined object at the right zoom. In the proposed approach, we learn the patch structures at various down-sampling factors. To further enhance the output we impose the local context around the patch in a MRF framework. Several constraints are introduced to minimize the extent of zoom-in.

Recently, Lin and Shum has shown an upper limit on multi-frame SR techniques. For practical purposes this limit is very small. Another class of SR algorithms formulate the high-quality image generation as an inference problem. High-resolution image is inferred from a set of learned training patches. This class of algorithm works well for natural structures but for many man-made structures this technique does not produce accurate results. We propose to capture the images at optimal zoom from the perspective of image super-resolution. The images captured at this zoom has sufficient amount of information so that it can be magnified further by using any SR algorithm which promotes step edges and certain features. This can have a variety of applications in consumer cameras, large-scale automated image mosaicing, robotics and improving the recognition accuracy of many computer vision algorithms. Existing efforts are limited to image a pre-determined object at the right zoom. In the proposed approach, we learn the patch structures at various down-sampling factors. To further enhance the output we impose the local context around the patch in a MRF framework. Several constraints are introduced to minimize the extent of zoom-in.