Vision based Robot Navigation using an On-line Visual Experience

D. Santosh Kumar (homepage)

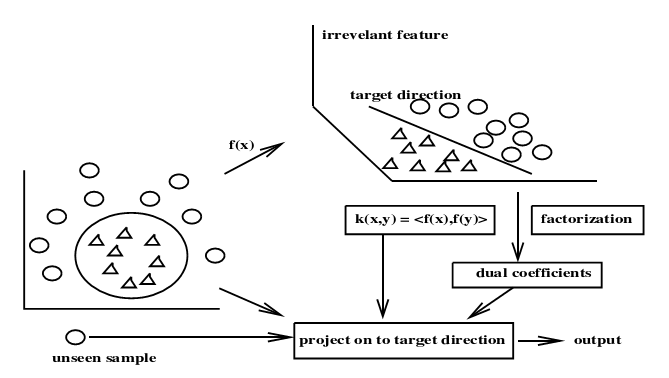

Vision-based robot navigation has long been a fundamental goal in both robotics and computer vision research. While the problem is largely solved for robots equipped with active range-finding devices, for a variety of reasons, the task still remains challenging for robots equipped only with vision sensors. Vision is an attractive sensor as it helps in the design of economically viable systems with simpler sensor limitations. It facilitates passive sensing of the environment and provides valuable semantic information about the scene that is unavailable to other sensors. Two popular paradigms have emerged to analyze this problem, namely Model-based and Model-free algorithms. Model-based approaches demand apriori model information to be made available in advance. In case of the latter, required 3D information is computed online. Model-free navigation paradigms have gained popularity over modelbased approaches due to their simpler assumptions and wider applicability. This thesis discusses a new paradigm to vision-based navigation, namely Image-based navigation. The basic concept is that model-free paradigms involve an unnecessary intermediate depth computation, which is redundant for the purpose of navigation. Rather the motion instruction required to control the robot can be inferred directly from the acquired images. This approach is more attractive as the modeling of objects is now simply substituted by the memorization of views, which is far easier than 3D modeling.

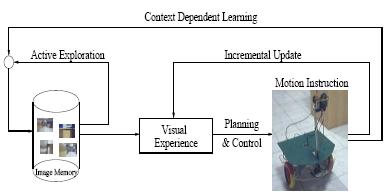

In this thesis, a new image-based navigation architecture is developed, which facilitates online learning about the world by a robot. The framework capacitates a robot to autonomously explore and navigate a variety of unknown environments, in a way that facilitates path planning and goal-oriented tasks, using visual maps that are contextually built in the process. It also facilitates the incorporation of feedback received from performing specific goal oriented tasks to update the visual representation. Based on this architecture, the design of the individual algorithms required for performing the navigation task (exploration, servoing and learning) is discussed. (more...)

| Year of completion: |

June 2007 |

| Advisor : | C. V. Jawahar |

Related Publications

D. Santohs and C.V. Jawahar - Visual Servoing in Non-Regid Environment: A Space-Time Approach Proc. of IEEE International Conference on Robotics and Automation(ICRA'07), Roma, Italy, 2007. [PDF]

D. Santosh Kumar and C.V. Jawahar - Visual Servoing in Presence of Non-Rigid Motion, Proc. 18th IEEE International Conference on Pattern Recognition(ICPR'06), Hong Kong, Aug 2006. [PDF]

D. Santosh Kumar and C.V. Jawahar - Robust Homography-Based Control for Camera Positioning in Piecewise Planar Environment , 5th Indian Conference on Computer Vision, Graphics and Image Processing, Madurai, India, LNCS 4338 pp.906-918, 2006. [PDF]

- D. Santosh and C.V. Jawahar - Cooperative CONDENSATION-based Recognition, in 8th Asian Conference on Computer Vision (ACCV) (Under Review), 2007.

- D. Santosh and C.V. Jawahar - Visual Servoing in Non-Rigid Environments, in IEEE Transactions on Robotics (ITRO) (Under Submission), 2008.

- D. Santosh and C.V. Jawahar - Robot Path Planning by Reinforcement Learning along with Potential Fields, in 25th IEEE International Conference on Robotics and Automation (ICRA) (Under Submission) , 2008.

- D. Santosh, A. Supreeth and C.V. Jawahar - Mobile Robot Exploration and Navigation using a Single Camera, in 25th IEEE International Conference on Robotics and Automation (ICRA) (Under Submission) , 2008.

Downloads

![]()