Repetition Detection and Shape Reconstruction in Relief Images.

Harshit Agrawal (homepage)

Relief carving is very popular sculpting technique that is being used for decoration and depicting stories and scenes from ancient times till today. With time, many of the ancient cultural heritage artifacts are getting damaged and one of the important methods that aids preservation and study is to capture them digitally.

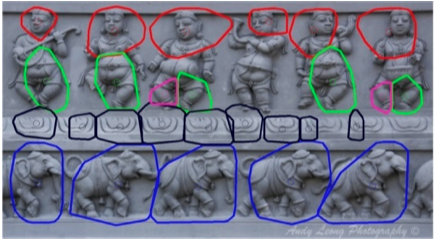

Reliefs carvings have certain specific attributes that makes them different from regular sculptures, which can be exploited in different computer vision tasks. Repetitive patterns are one such frequently occurring phenomenon in reliefs. Algorithms for detection of repeating patterns in images often assume that the repetition is regular and highly similar across the instances. Approximate repetitions are also of interest in many domains such as hand carved sculptures, wall decorations, groups of natural objects, etc. Detection of such repetitive structures can help in applications such as image retrieval, image inpainting and 3D reconstruction. In this work, we look at a specific class of approximate repetitions: those in images of hand carved relief structures. We present a robust hierarchical method for detecting such repetitions. Given a single relief panel image, our algorithm finds dense matches of local features across the image at various scales. The matching features are then grouped based on their geometric configuration to find repeating elements. We also propose a method to group the repeating elements to segment the repetitive patterns in an image. In relief images, foreground and background have nearly the same texture, and matching of a single feature would not provide reliable evidence of repetition. Our grouping algorithm integrates evidences of repetition to reliably find repeating patterns. Input image is processed on a scale-space pyramid to effectively detect all possible repetitions at different scales. Our method has been tested on images with large varieties of complex repetitive patterns and the qualitative results show the robustness of our approach.Point-based rendering suffer from the limited resolution of the fixed number of samples representing the model. At some distance, the screen space resolution is high relative to the point samples, which causes under-sampling. A better way of rendering a model is to re-sample the surface during the rendering at the desired resolution in object space, guaranteeing a sampling density sufficient for image resolution. Output sensitive sampling samples objects at a resolution that matches the expected resolution of the output image. This is crucial for hole-free point-based rendering. Many technical issues related to point-based graphics boil down to reconstruction and re-sampling. A point based representation should be as small as possible while conveying the shape well.

Reliefs carvings have certain specific attributes that makes them different from regular sculptures, which can be exploited in different computer vision tasks. Repetitive patterns are one such frequently occurring phenomenon in reliefs. Algorithms for detection of repeating patterns in images often assume that the repetition is regular and highly similar across the instances. Approximate repetitions are also of interest in many domains such as hand carved sculptures, wall decorations, groups of natural objects, etc. Detection of such repetitive structures can help in applications such as image retrieval, image inpainting and 3D reconstruction. In this work, we look at a specific class of approximate repetitions: those in images of hand carved relief structures. We present a robust hierarchical method for detecting such repetitions. Given a single relief panel image, our algorithm finds dense matches of local features across the image at various scales. The matching features are then grouped based on their geometric configuration to find repeating elements. We also propose a method to group the repeating elements to segment the repetitive patterns in an image. In relief images, foreground and background have nearly the same texture, and matching of a single feature would not provide reliable evidence of repetition. Our grouping algorithm integrates evidences of repetition to reliably find repeating patterns. Input image is processed on a scale-space pyramid to effectively detect all possible repetitions at different scales. Our method has been tested on images with large varieties of complex repetitive patterns and the qualitative results show the robustness of our approach.Point-based rendering suffer from the limited resolution of the fixed number of samples representing the model. At some distance, the screen space resolution is high relative to the point samples, which causes under-sampling. A better way of rendering a model is to re-sample the surface during the rendering at the desired resolution in object space, guaranteeing a sampling density sufficient for image resolution. Output sensitive sampling samples objects at a resolution that matches the expected resolution of the output image. This is crucial for hole-free point-based rendering. Many technical issues related to point-based graphics boil down to reconstruction and re-sampling. A point based representation should be as small as possible while conveying the shape well.

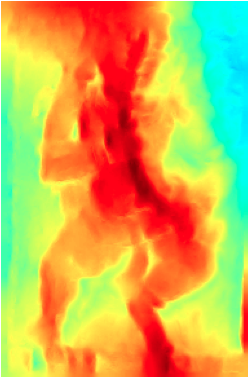

Reconstructing geometric models of relief carvings are also of great importance in preserving her- itage artifacts, digitally. In case of reliefs, using laser scanners and structured lighting techniques is not always feasible or are very expensive given the uncontrolled environment. Single image shape from shading is an underconstrained problem that tries to solve for the surface normals given the intensity image. Various constraints are used to make the problem tractable. To avoid the uncontrolled lighting, we use a pair of images with and without the flash and compute an image under a known illumination. This image is used as an input to the shape reconstruction algorithms. We present techniques that try to reconstruct the shape from relief images using the prior information learned from examples. We learn the variations in geometric shape corresponding to image appearances under different lighting conditions using sparse representations. Given a new image, we estimate the most appropriate shape that will result in the given appearance under the specified lighting conditions. We integrate the prior with the normals computed from reflectance equation in a MAP framework. We test our approach on relief images and compare them with the state-of-the-art shape from shading algorithms. (more...)

Reconstructing geometric models of relief carvings are also of great importance in preserving her- itage artifacts, digitally. In case of reliefs, using laser scanners and structured lighting techniques is not always feasible or are very expensive given the uncontrolled environment. Single image shape from shading is an underconstrained problem that tries to solve for the surface normals given the intensity image. Various constraints are used to make the problem tractable. To avoid the uncontrolled lighting, we use a pair of images with and without the flash and compute an image under a known illumination. This image is used as an input to the shape reconstruction algorithms. We present techniques that try to reconstruct the shape from relief images using the prior information learned from examples. We learn the variations in geometric shape corresponding to image appearances under different lighting conditions using sparse representations. Given a new image, we estimate the most appropriate shape that will result in the given appearance under the specified lighting conditions. We integrate the prior with the normals computed from reflectance equation in a MAP framework. We test our approach on relief images and compare them with the state-of-the-art shape from shading algorithms. (more...)

| Year of completion: | July 2014 |

| Advisor : | Prof. C. V. Jawahar |

Related Publications

Harshit Agrawal and Anoop M Namboodiri - Shape Recostruction from a Single Relief Image Proceedings of the 2nd Asian Conference Pattern Recognition, 05-08 Nov. 2013, Okinawa, Japan. [PDF]

- Harshit Agrawal, Anoop M. Namboodiri - Detection and Segmentation of Approximate Repetitive Patterns in Relief Images IEEE Eighth Indian Conference on Computer Vision, Graphics and Image Processing (ICVGIP 2012), December, 2012 [Project]