Improving image quality of synthetically zoomed tomographic images.

Neha Dixit (homepage)

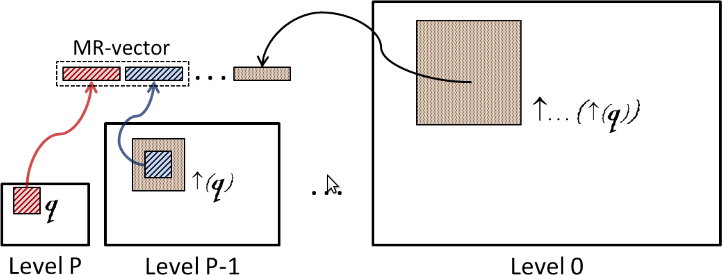

3-D medical images such as Computed Tomography(CT), Positron Emission Tomography(PET) and Magnetic Resonance Imaging(MRI) are commonly used for diagnosis. Tomographic reconstruction is the underlying technique for all these images. It is often of interest to increase the resolution of these images. Some of the possible solutions for increasing the resolution are: increasing the number of detectors, changing the detector material/geometry, increasing the excitation energy level or scanning time. These solutions are limited in terms of practical implementation. Hence, finding solutions at the processing level (post data acquisition) is of interest. This thesis focuses on the problem of preserving details and quality of tomographic images after upsampling. We have explored two different approaches for generating higher resolution images. These are based on re-examining the lattice geometry for reconstruction. We offer two novel solutions, one which uses a hexagonal instead of the conventional square lattice and a second one based super resolution strategy which combines samples drawn from two lattices.

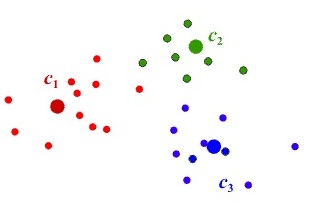

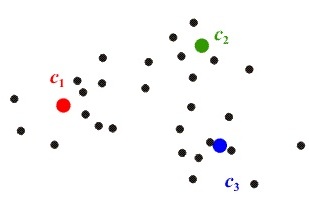

In the first solution, we reconstruct the image onto a hexagonal lattice. These samples are then interpolated to find the samples on the desired higher resolution square lattice. In the super resolution (SR)- based solution we reconstruct the images onto 2 low resolution lattices. The lattices are rotated versions of each other which provide a 'different view' of an object/scene. For generating low resolution images both square and hexagonal sampling have been considered.

The two solutions have been implemented and tested using a variety of test objects. These objects which are called phantoms were chosen to be of 2 types: analytical and real. Three analytically generated phantoms used were Concentric rings, dots and lines. Two real PET phantoms were Nema and Hoffman Brain phantoms. The results were benchmarked against upsampled images generated by: Direct upsampling and an existing super resolution technique which uses Union of Shifted Lattice (USL) samples. The evaluation results show a qualitative and quantitative improvement over results obtained by direct upsampling. The SR-based results are comparable to that obtained with USL in quality. However, the main strength of the proposed SR-based solution is the computational savings and a reduced number of required images for a given upsampling factor. (more...)

| Year of completion: | March 2012 |

| Advisor : | Jayanthi Sivaswamy |

Related Publications

N. Dixit and J. Sivaswamy - A Novel Approach to Generate Up-sampled Tomographic Images using Combination of Rotated Hexagonal Lattices Proceedings of Sixteenth National Confernece on Communications (NCC'10),29-31 Jan. 2010,Chennai, India. [PDF]

Neha Dixit, N.V. Kartheek and Jayanthi Sivaswamy - Synthetic Zooming of Tomogrphics Images by Combination of Lattices Proceedings of IEEE Nuclear Science Symposium and Medical Imaging Conference, 25-31 October, 2009. [PDF]