UAV-based Visual Remote Sensing for Automated Building Inspection (UVRSABI)

Kushagra Srivastava , Dhruv Patel , Aditya Kumar Jha , Mohit Kumar Jha, Jaskirat Singh, Ravi Kiran Sarvadevabhatla, Harikumar Kandath, Pradeep Kumar Ramancharla, K. Madhava Krishna,

[Paper] [Documentation] [GitHub]

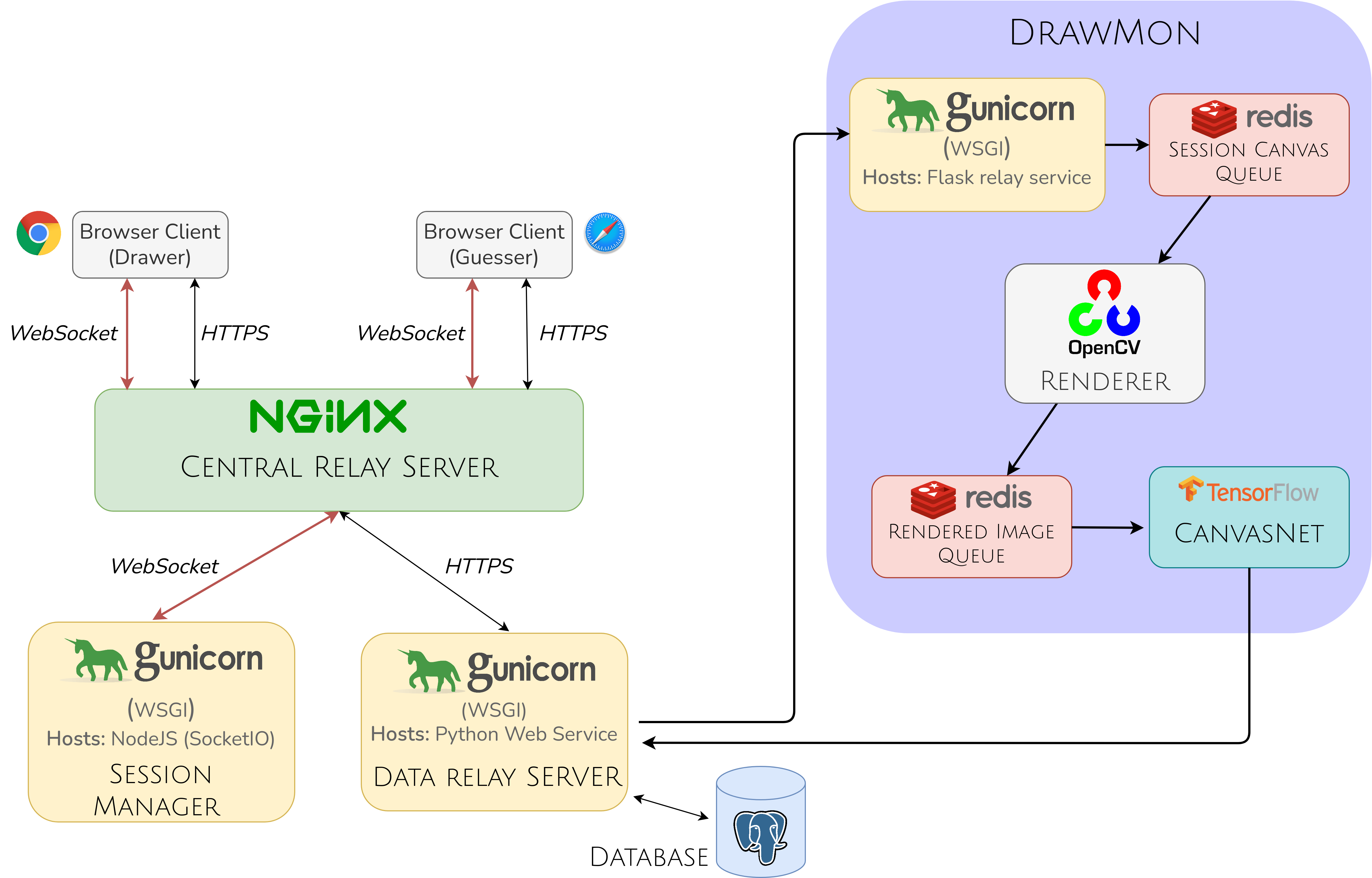

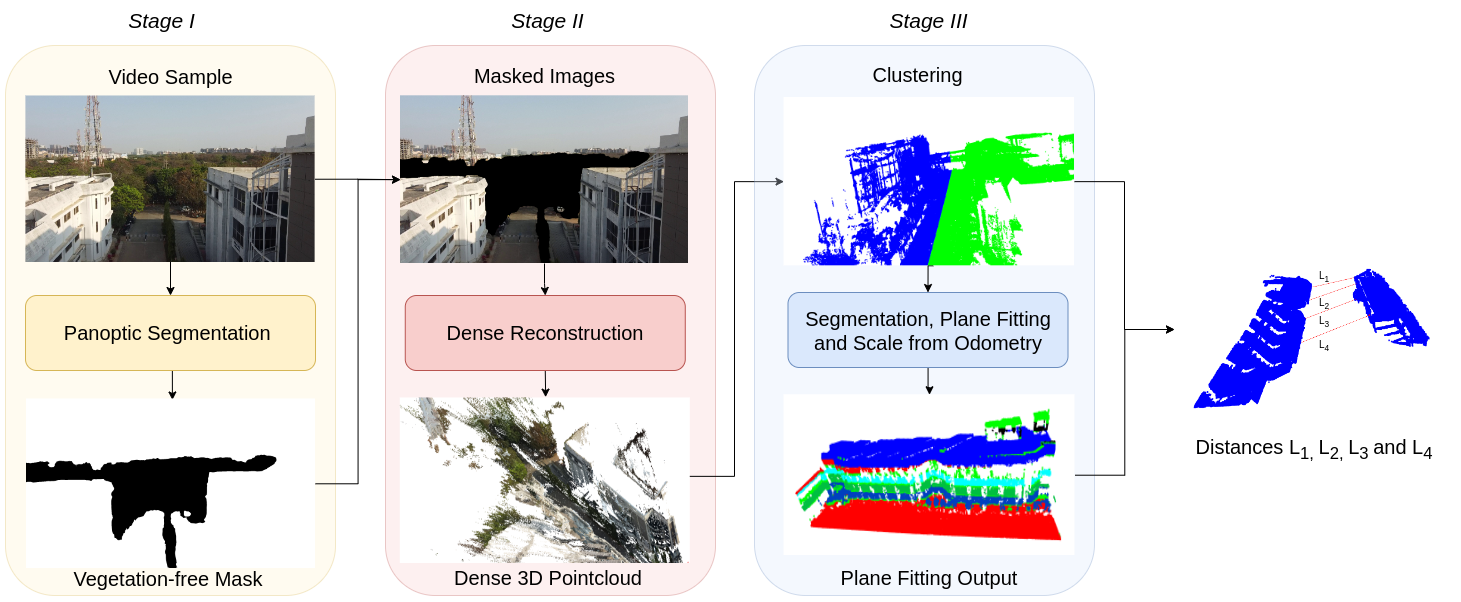

Architecture of automated building inspection using the aerial images captured using UAV. The odometry information of UAV is also used for the quantification of different parameters involved in the inspection.

Overview

- We automate the inspection of buildings through UAV-based image data collection and a post-processing module to infer and quantify the details which helps in avoiding manual inspection, reducing the time and cost.

- We introduced a novel method to estimate the distance between adjacent buildings and structures.

- We developed an architecture that can be used to segment roof tops in case of both orthogonal and non-orthogonal view using a state-of-the-art semantic segmentation model.

- Taking into consideration the importance of civil inspection of buildings we introduced a software library that helps in estimating the Distances between Adjacent Buildings, Plan-shape of a Building, Roof Area, Non-Structural Elements (NSE) on the rooftop, and the Roof Layou

Modules

In order to estimate the seismic structural parameters of the buildings the following modules have been introduced:

- Distance between Adjacent Buildings

- Plan Shape and Roof Area Estimation

- Roof Layout Estimation

Distance between Adjacent Buildings

This module provide us the distance between two adjacent buildings. We sampled the images from the videos captured by UAV and perform panoptic segmentation using state-of-art deep learning model, eliminating vegetation (like trees) from the images. The masked images are then fed to a state-of-the art image-based 3D reconstruction library which outputs a dense 3D point cloud. We then apply RANSAC for fitting planes between the segmented structural point cloud. Further, the points are sampled on these planes to calculate the distance between the adjacent buildings at different locations.

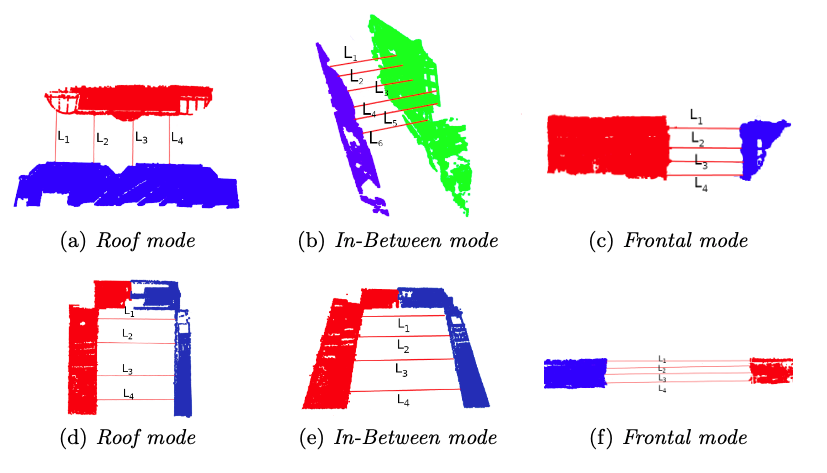

Results: Distance between Adjacent Buildings

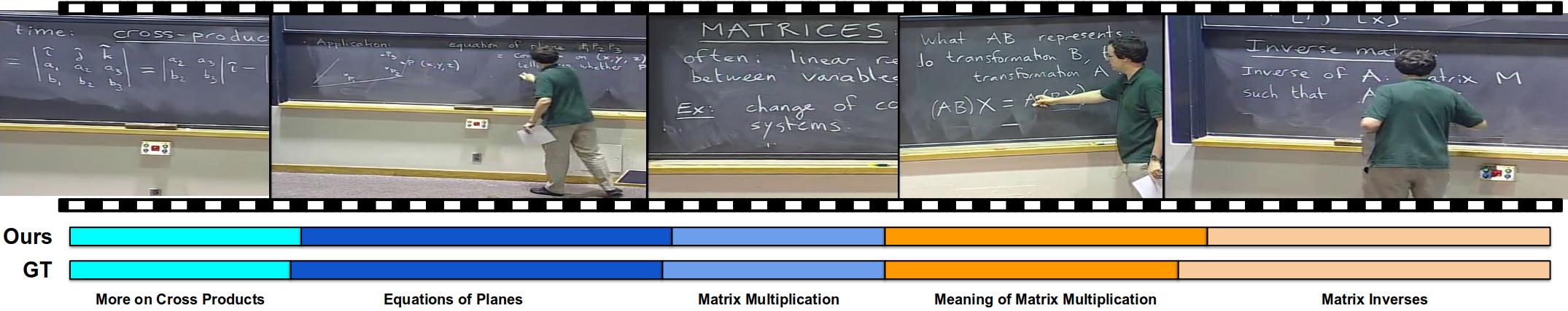

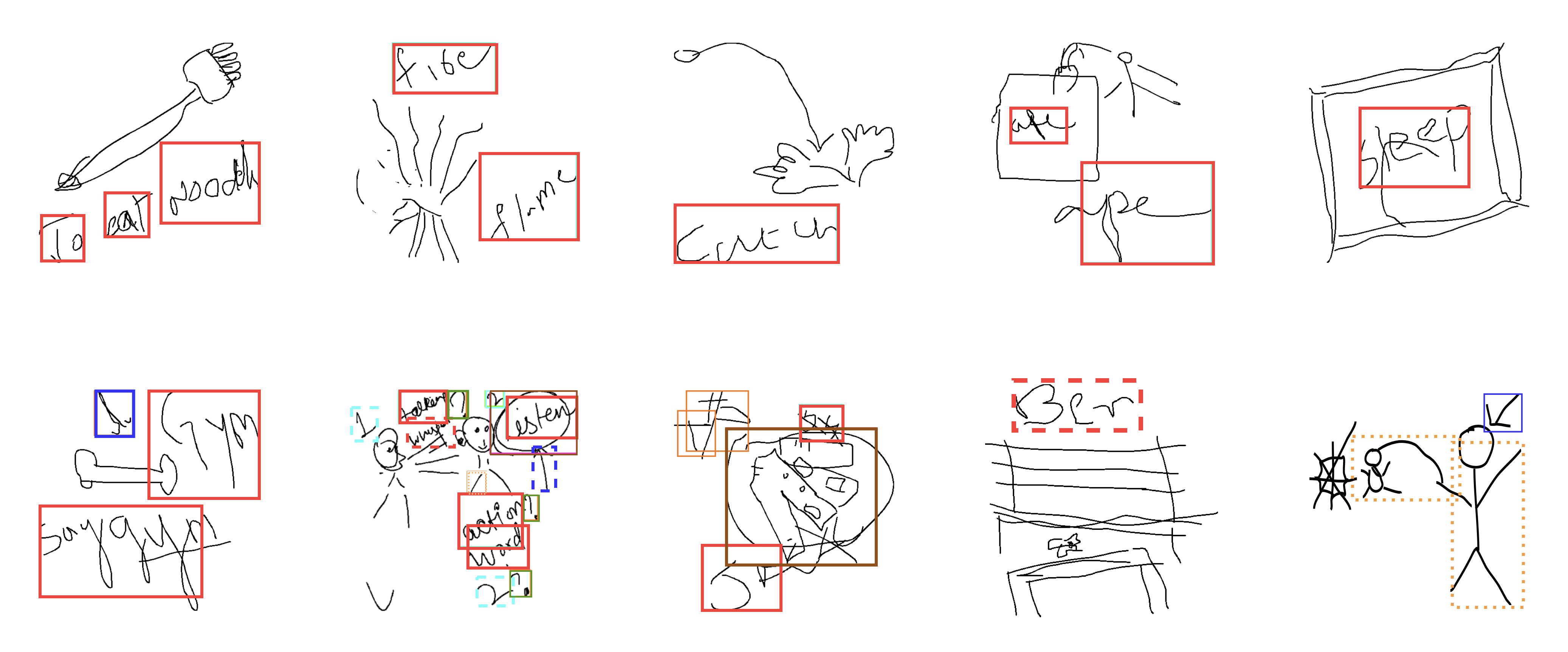

Sub-figures (a), (b) and (c) and (d), (e) and (f) represent the implementation of plane fitting using piecewise-RANSAC in different views for two subject buildings.

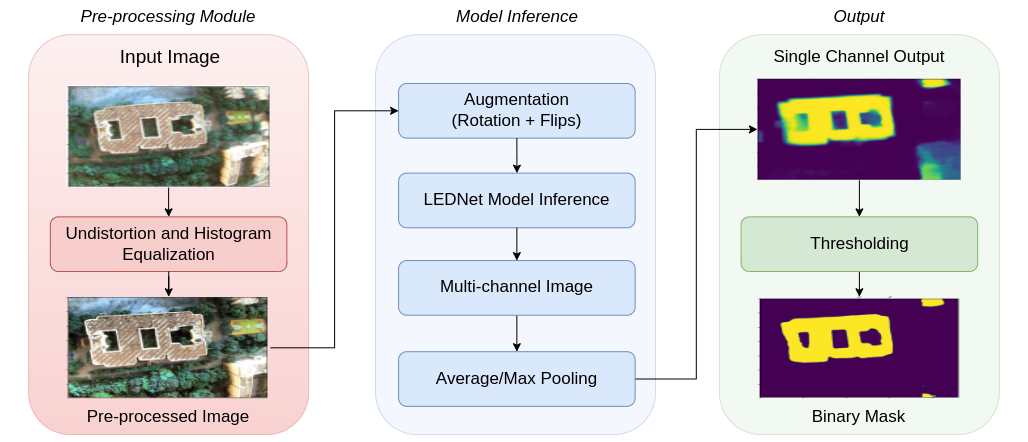

Plan Shape and Roof Area Estimation

This module provides information regarding the shape and roof area of the building. We segment the roof using a state-of-the-art semantic segmentation deep learning model. We also subjected the input images to a pre-processing module that removes distortions from the wide-angle images. Data augmentation was used to increase the robustness and performance. Roof Area was calculated using the focal length of the camera, the height of the drone from the roof and the segmented mask area in pixels.

Results: Plan Shape and Roof Area Estimation

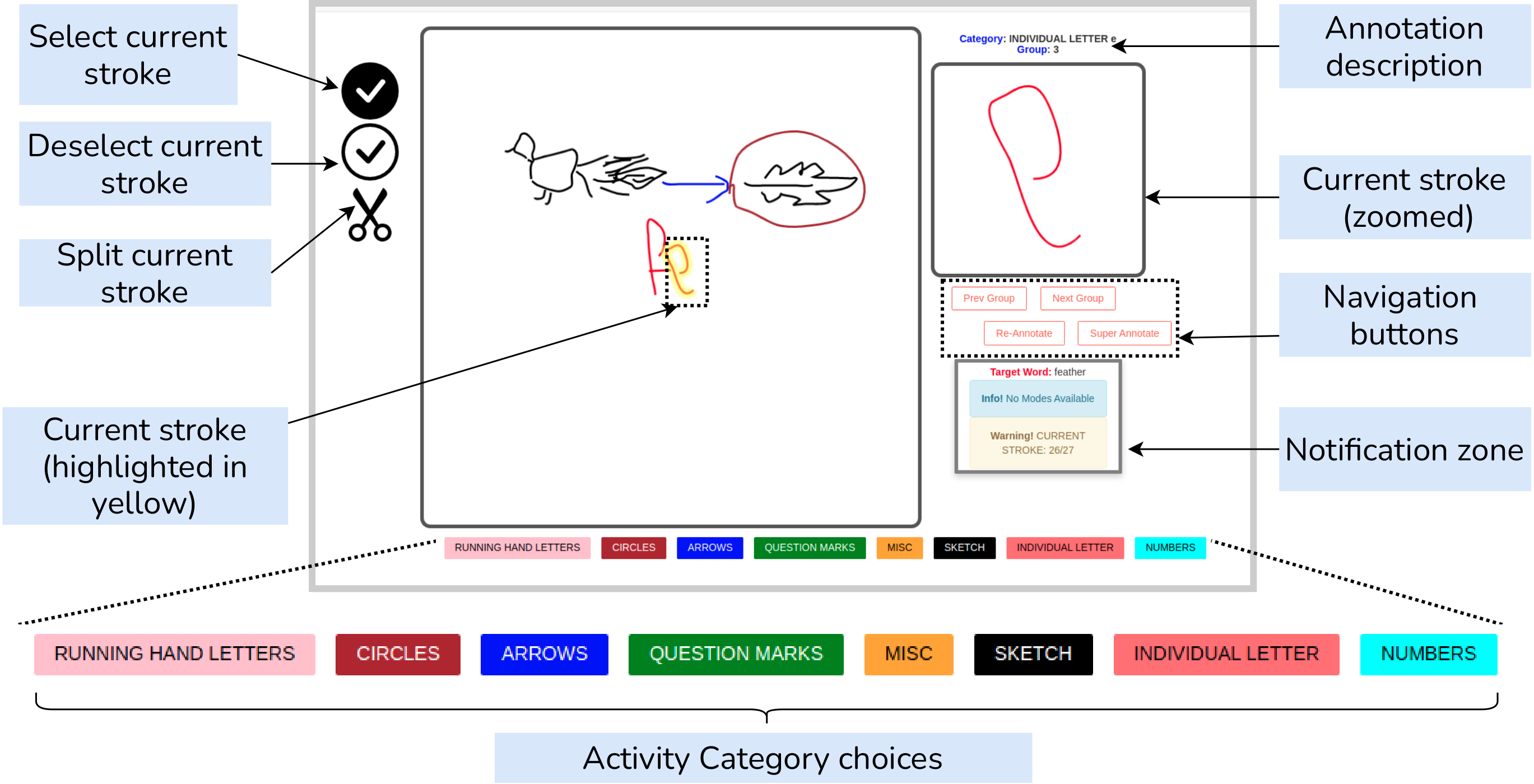

This figure represents the roof segmentation results for 4 subject buildings.

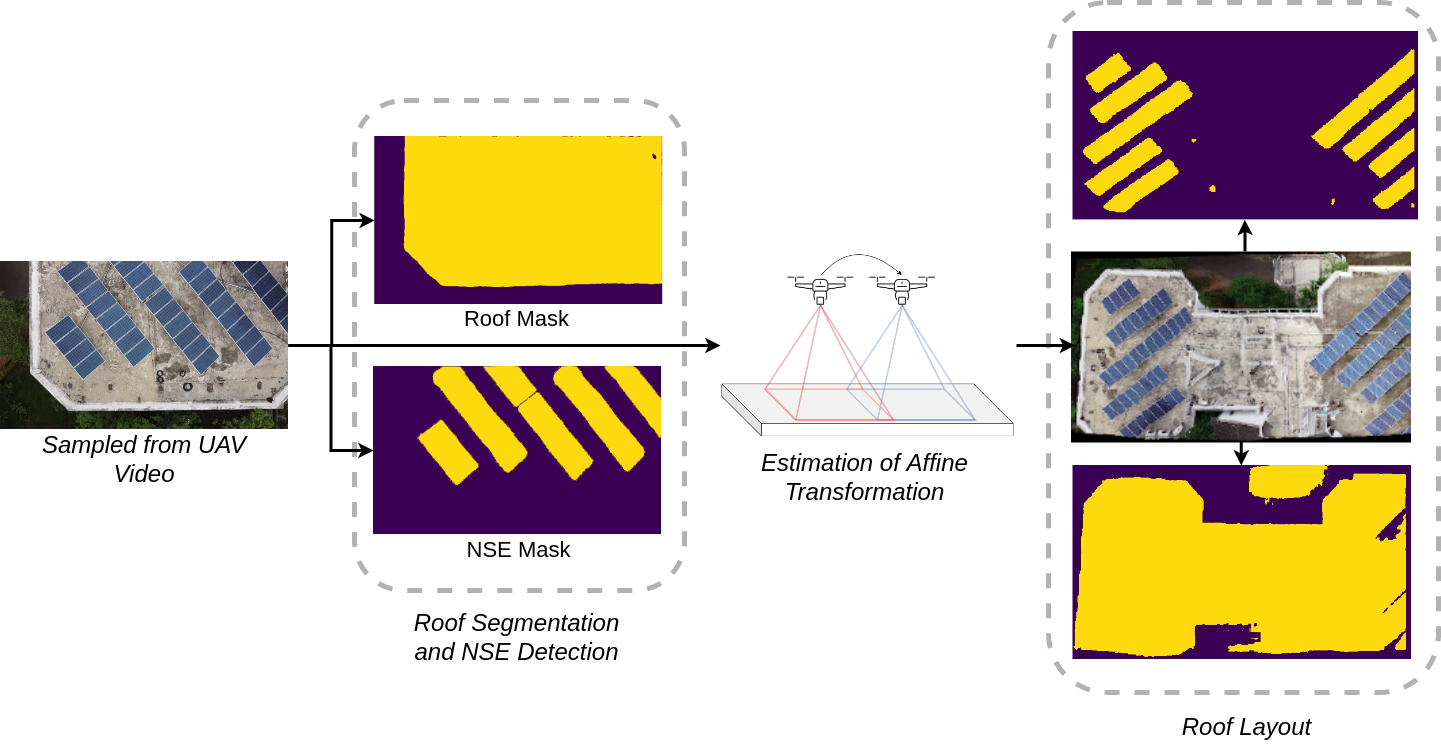

Roof Layout Estimation

This module provides information about the roof layout. Since it is not possible to capture the whole roof in a single frame specially in the case of large sized buildings, we perform large scale image stitching of partially visible roofs followed by NSE detection and roof segmentation.

Results: Roof Layout Estimation

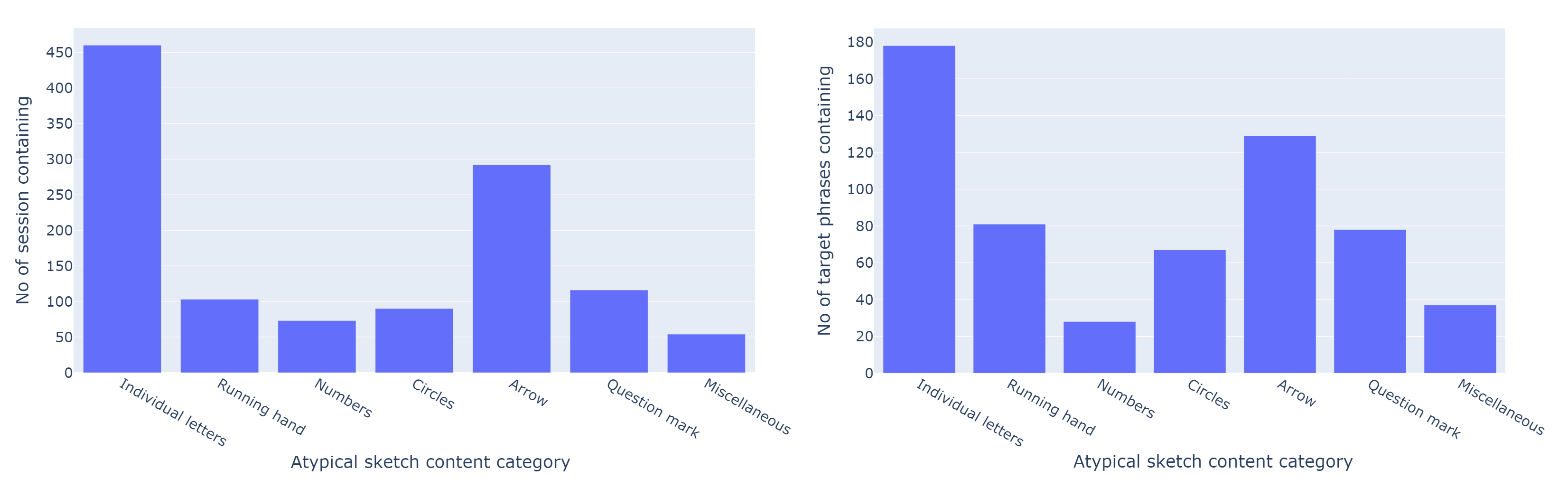

Stitched Image

Roof Mask

Object Mask

Contact

If you have any question, please reach out to any of the above mentioned authors.