Pedestrian Intention and Trajectory Prediction in Unstructured Traffic Using IDD-PeD

[Paper Link] [ Code & Dataset ]

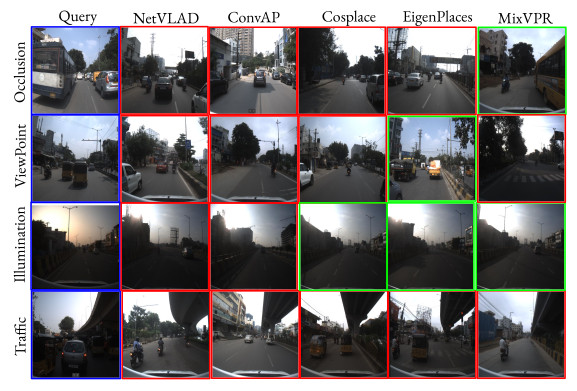

Fig. 1: Illustration of pedestrian intention and trajectory encountering various challenges within our unstructured traffic IDD-PeD dataset. The challenges include occlusions, signalized types, vehicle-pedestrian interactions, and illumination changes. Intent of C: Crossing with trajectory and NC: Not Crossing.

Abstract

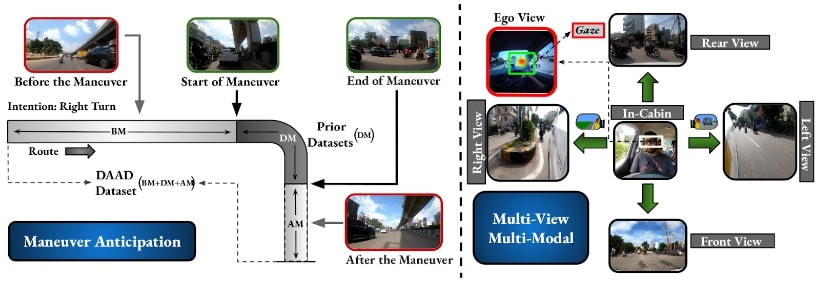

With the rapid advancements in autonomous driving, accurately predicting pedestrian behavior has become essential for ensuring safety in complex and unpredictable traffic conditions. The growing interest in this challenge highlights the need for comprehensive datasets that capture unstructured environments, enabling the development of more robust prediction models to enhance pedestrian safety and vehicle navigation. In this paper, we introduce an Indian driving pedestrian dataset designed to address the complexities of modeling pedestrian behavior in unstructured environments, such as illumination changes, occlusion of pedestrians, unsignalized scene types and vehicle-pedestrian interactions. The dataset provides high-level and detailed low-level comprehensive annotations focused on pedestrians requiring the ego-vehicle’s attention. Evaluation of the state-of-the-art intention prediction methods on our dataset shows a significant performance drop of up to 15%, while trajectory prediction methods underperform with an increase of up to 1208 MSE, defeating standard pedestrian datasets. Additionally, we present exhaustive quantitative and qualitative analysis of intention and trajectory baselines. We believe that our dataset will open new challenges for the pedestrian behavior research community to build robust models.

The IDD-PeD dataset

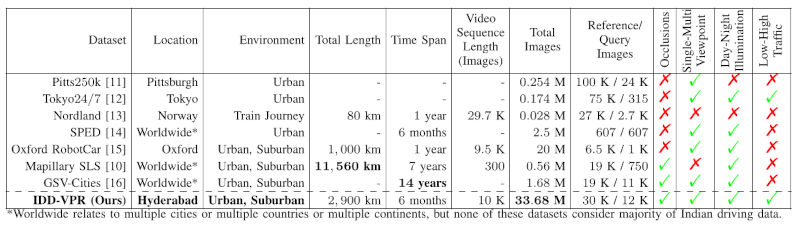

Table 1: Comparison of datasets for pedestrian behavior understanding. On-board diagnostics (OBD) provides ego-vehicle speed, acceleration, and GPS information. Group annotation represents the number of pedestrians moving together, in our dataset, about 1, 800 move individually while the rest move in groups of 2 or more. Interaction annotation refers to a label between ego-vehicle and pedestrian, where both influence each other’s movements and decisions. ✓and ✗ indicate the presence or absence of annotated data.

Fig. 2: Annotation instances and data statistics of IDD-PeD. Distribution of (a) frame-level ego-vehicle speeds, (b) pedestrian at signalized types such as crosswalk (C), signal (S), crosswalk and signal (CS), and absence of crosswalk and signal (NA), (c) pedestrians with track lengths at day and night, (d) frame-level different behavior analysis and traffic objects annotation, and (e) pedestrian occlusions.

Results

Pedestrian Intention Prediction (PIP) Baselines

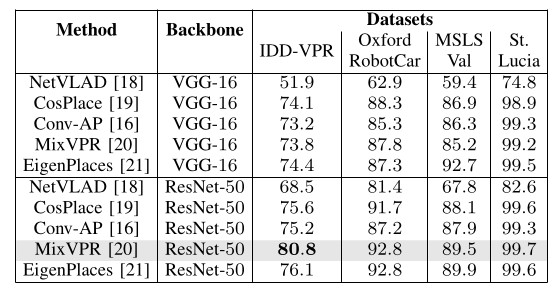

Table 2: Evaluation of PIP baselines on JAAD, PIE, and our datasets.

Pedestrian Trajectory Prediction (PTP) Baselines

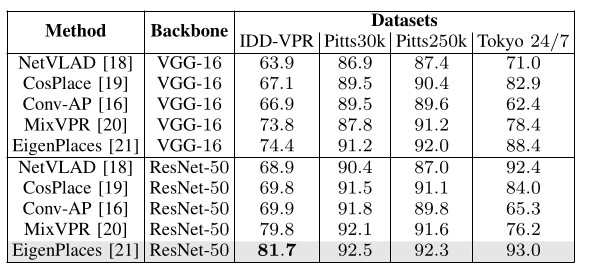

Table 3: Evaluation of PTP baselines on JAAD, PIE and our datasets. We report MSE results at 1.5s. “-” indicates no results as PIETraj needs explicit ego-vehicle speeds.

Fig.: Qualitative evaluation of the best and worst PTP models on our dataset. Red: SGNet, Blue: PIETraj, Green: Ground truth, White: Observation period. To better illustrate and highlight key factors in PIP and PTP methods, a qualitative analysis will be provided in the supplementary video.

Citation

@inproceedings{idd2025ped,

author = {Ruthvik Bokkasam, Shankar Gangisetty, A. H. Abdul Hafez, C. V. Jawahar},

title = {Pedestrian Intention and Trajectory Prediction in Unstructured Traffic Using IDD-PeD},

book title = {ICRA},

publisher = {IEEE},

year = {2025},

}

Acknowledgements

This work is supported by iHub-Data and Mobility at IIIT Hyderabad.