Scene Text Recognition in Indian Scripts

Overview

This work addresses the problem of scene text recognition in India scripts. As a first step, we benchmark scene text recognition for three Indian scripts - Devanagari, Telugu and Malayalam, using a CRNN model. To this end we release a new dataset for the three scripts , comprising nearly a 1000 images in the wild which is suitable for scene text detection and recognition tasks. We train the recognition model using synthetic word images. This data is also made publicly available.

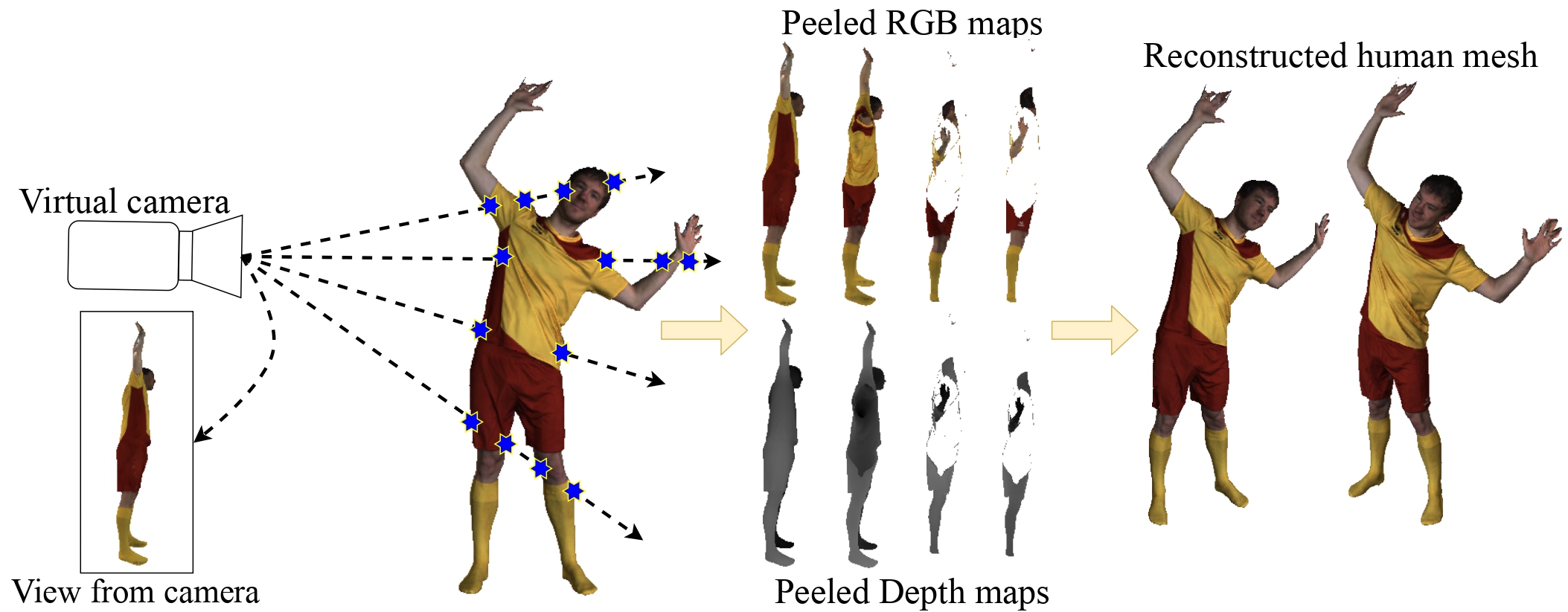

Natural scene images, having text in Indic scripts; Telugu, Malayalam and Devanagari in the clock wise order

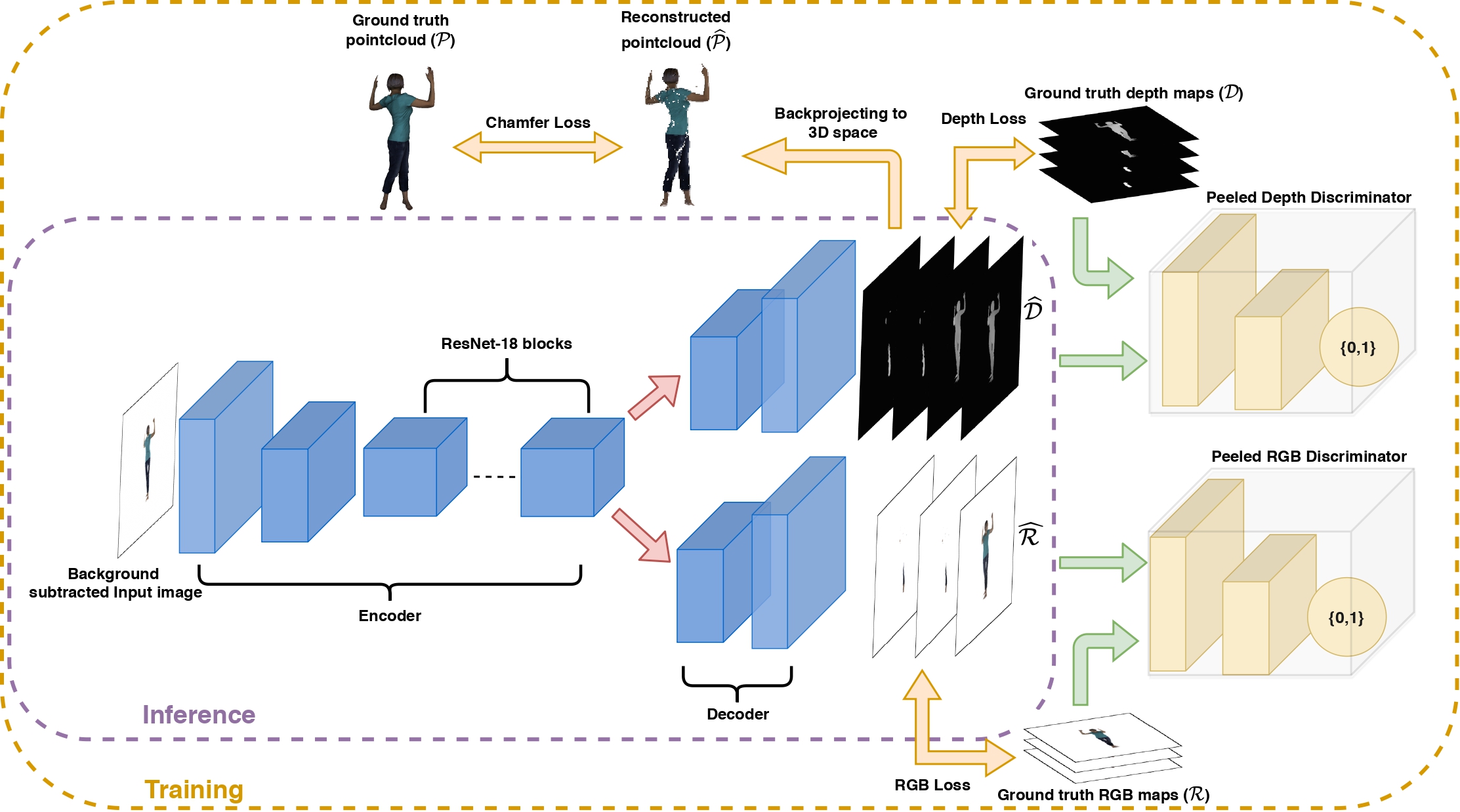

Sample word images from the dataset of synthetic word images

Related Publications

If you use the dataset of real scene text images for the three indic scripts or the synthetic dataset comprising font rendered word images, please consider citing our paper titled Benchmarking Scene Text Recognition in Devanagari, Telugu and Malayalam

@INPROCEEDINGS{IL-SCENETEXT_MINESH,

author={M. {Mathew} and M. {Jain} and C. V. {Jawahar}},

booktitle={ICDAR MOCR Workshop},

title={Benchmarking Scene Text Recognition in Devanagari, Telugu and Malayalam},

year={2017}}

Dataset Download

IIIT-ILST

IIIT-ILST contains nearly 1000 real images per each script which are annotated for scene text bounding boxes and transcriptions.

Terms and Conditions: All images provided as part of the IIIT-ILST dataset have been collected from freely accessible internet sites. As such, they are copyrighted by their respective authors. The images are hosted in this site, physically located in India. Specifically, the images are provided with the sole purpose to be used for research in developing new models for scene text recognition You are not allowed to redistribute any images in this dataset. By downloading any of the files below you explicitly state that your final purpose is to perform research in the conditions mentioned above and you agree to comply with these terms and conditions.

Download

IIIT-ILST DATASET

Synthetic Word Images Dataset

We rendered millions of word images synthetically to train scene text recognition models for the three scripts.

Errata

The synthetic images dataset provided below is different from the one we used originally in our ICDAR 2017, MOCR workshop paper. The synthetic dataset we used in the orginal work had some images with junk characters resulting from incorrect rendering of certain Unicode points while using some fonts. Hence we re-generated the synthetic word images and the one provided below is this new set.

Download

Syntetic Word Image(Part-I) | Syntetic Word Image(Part-II)

License

Synthetic Scene Text Word Images Rendering - Code

The code we used for synthetic word images for Indian languages is added here The repo includes a collection of Unicode fonts for many Indian scripts which you might find useful for works where you want to generate synthetic word images in Indian scripts and Arabic.