Robotic Vision

Introduction

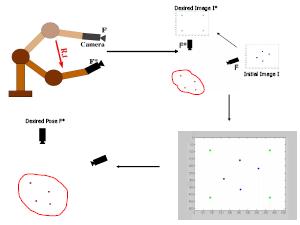

Our research activity is primarily concerned with the geometric analysis of scenes captured by vision sensors and the control of a robot so as to perform set tasks by utilzing the scene intepretation. The former problem is popular in literature as 'Structure from Motion', while the later is often refered as the 'Visual Servoing' problem.

Visual servoing consists in using the information provided by a vision sensor to control the movements of a dynamic system. This research topic is at the intersection of the fields of Computer Vision and Robotics. These fields are the subject of profitable research since many years and are particularly interesting by their very broad scientific and application spectrum. More specifically, we are concerned with enhancing the visual servoing algorithms, both in performance and in applicability so as to widen their use.

Performance Enhancement of Visual Servoing Techniques

Visual servoing is an interesting robotic vision area increasingly being applied to real-world problems. Such an application, however calls for an in-depth analysis of robustness and performance issues in visual servoing tasks. Typically, robustness issues involve handling errors in feature correspondence / pose and depth estimation. On the other hand, performance issues involve generating consistent input in-spite of noisy / varying parameters. We have developed algorithms that incorporate multiple cues in order to achieve consistent performance in presence of noisy features.

Visual Servoing in Uncoventional Environments

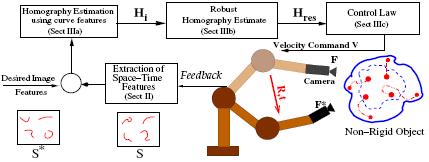

Most robotic vision algorithms are proposed by envisaging robots operating in structured environments where the world is assumed rigid and planar. These algorithms fail to provide optimum behavior when the robot has to be controlled with respect to active non-rigid non-planar targets. We have developed a new framework for visual servoing that accomplishes the robot-positioning task even in such unconventional environments. We introduced a novel space-time representation scheme for modeling the deformations of a non-rigid object and proposed a new vision-based approach that exploited the two-view geometry induced by the space-time features to perform the servoing task.

Most robotic vision algorithms are proposed by envisaging robots operating in structured environments where the world is assumed rigid and planar. These algorithms fail to provide optimum behavior when the robot has to be controlled with respect to active non-rigid non-planar targets. We have developed a new framework for visual servoing that accomplishes the robot-positioning task even in such unconventional environments. We introduced a novel space-time representation scheme for modeling the deformations of a non-rigid object and proposed a new vision-based approach that exploited the two-view geometry induced by the space-time features to perform the servoing task.

Visual Tracking by Integration of Multiple Cues

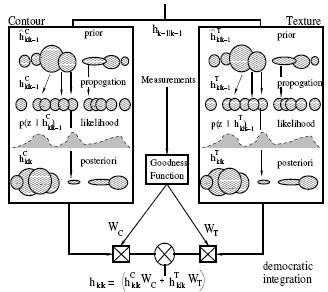

Object tracking is an important task in robotic vision, particularly for visual servoing. The tracking problem has been modeled in the robotic literature as a motion estimation problem. Thus 3D model based tracking is considered as a pose estimation problem and 2D planar object tracking as a homography estimation problem. There are two major sources of visual features that are used in marker-less visual tracking, edges and texture. Both visual features have advantages and disadvantages that make them suitable/unsuitable in many scenarios. we are designing a robust integration framework using both edge and texture features. This frame work probabilistically integrates the visual information collected from contour and texture. The integration is based on probabilistic goodness weights for each type of feature.

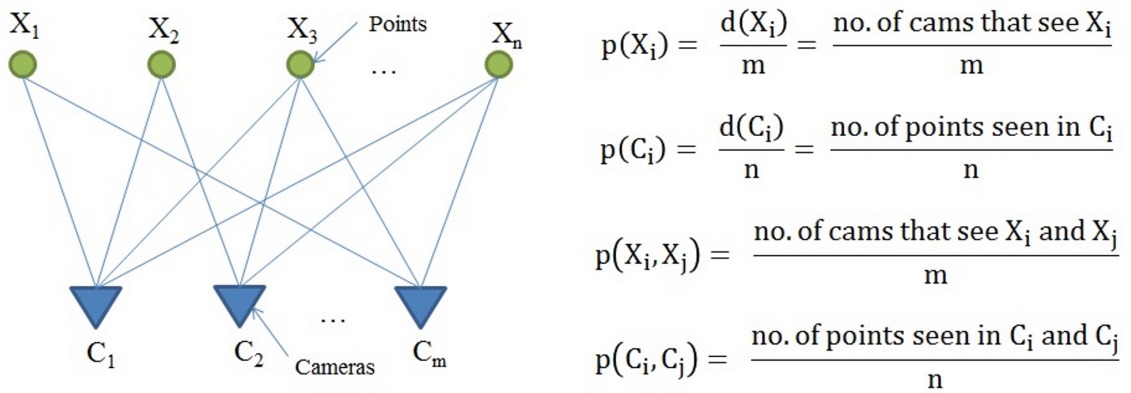

Probabilistic Robotic Vision We are also currently investigating the utility of applying the rich literature available in the field of Probabilistic Robotics to Computer Vision Problems. Computer Vision problems often involve processing of noisy data. Probabilistic approaches are then appropriate as they allow for uncertainty to be modeled and propagated through the solution process.

Related Publication

D. Santohs and C.V. Jawahar - Visual Servoing in Non-Regid Environment: A Space-Time Approach Proc. of IEEE International Conference on Robotics and Automation(ICRA'07), Roma, Italy, 2007. [PDF]

A.H. Abdul Hafez and C. V. Jawahar - Probabilistic Integration of 2D and 3D Cues for Visual Servoing, 9th International Conference on Control,Automation,Robotics and Vision(ICARCV'06), Singapore, 5-8 December, 2006. [PDF]

A.H. Abdul Hafez and C. V. Jawahar - Integration Framework for Improved Visual Servoing in Image and Cartesian Spaces, International Conference on Intelligent Robots and Systems(IROS'06), Beijing, China,October 9-15, 2006. [PDF]

D. Santosh Kumar and C.V. Jawahar - Visual Servoing in Presence of Non-Rigid Motion, Proc. 18th IEEE International Conference on Pattern Recognition(ICPR'06), Hong Kong, Aug 2006. [PDF]

A.H. Abdul Hafex and C.V. Jawahar - Target Model Estimation Using Particle Filters for Visual Servoing, Proc. 18th IEEE International Conference on Pattern Recognition(ICPR'06), Hong Kong, Aug 2006. [PDF]

- Abdul Hafez, Piyush Janawadkar and C.V. Jawahar - Novel view prediction for improved visual servoing, National Conference on Communcations (NCC) 2006, New Delhi

- Abdul Hafez, and C.V. Jawahar - Minimizing a Class of Hybrid Error Functions for Optimal Pose Alignment, International Conference on Control, Robotics, Automation and Vision (ICARCV) 2006, Singapore.

- D. Santosh Kumar and C. V. Jawahar - Robust Homography-based Control for Camera Positioning in Piecewise Planar Environments, Indain Conference on Computer Vision, Graphics and Image Processing (ICVGIP) 2006, Madurai

- Abdul Hafez, Visesh Chari and C.V. Jawahar - Combine Texture and Edges based on Goodness Weights for Planar Object Tracking International Conference on Robotics and Automation (ICRA) 2007, Rome

- Abdul Hafez and C. V. Jawahar - A Stable Hybrid Visual Servoing Agorithm, International Conference on Robotics and Automation (ICRA) 2007, Rome

Associated People

- Abdul Hafez

- Visesh

- Supreeth

- Anil

- Santosh

- Dr. C V Jawahar

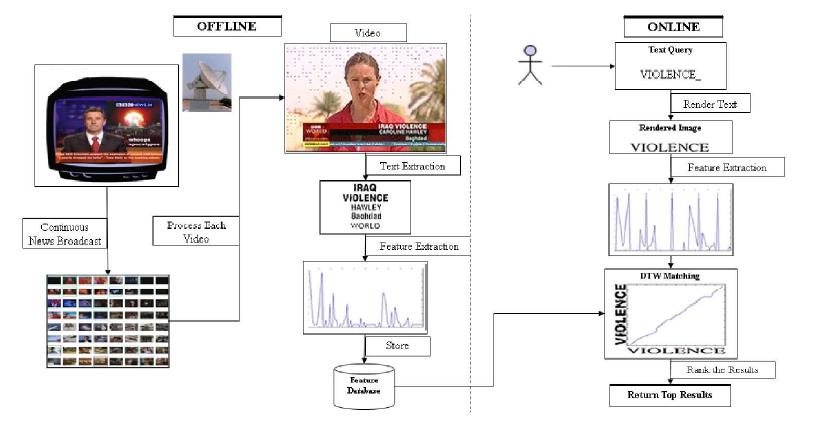

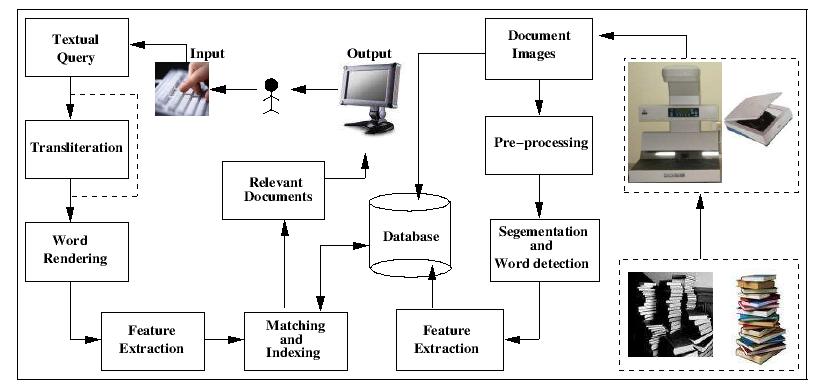

Matching words in the image space should handle the many variations in font size, font type, style, noise, degradations etc. that are present in document images. The features and the matching technique were carefully designed to handle this variety. Further, morphological word variations, such as prefix and suffixes for a given stem word, are identified using an innovative partial matching scheme. We use Dynamic Time Warping (DTW) based matching algorithm which enables us to efficiently exploit the information supplied by local features, that scans vertical strips of the word images.

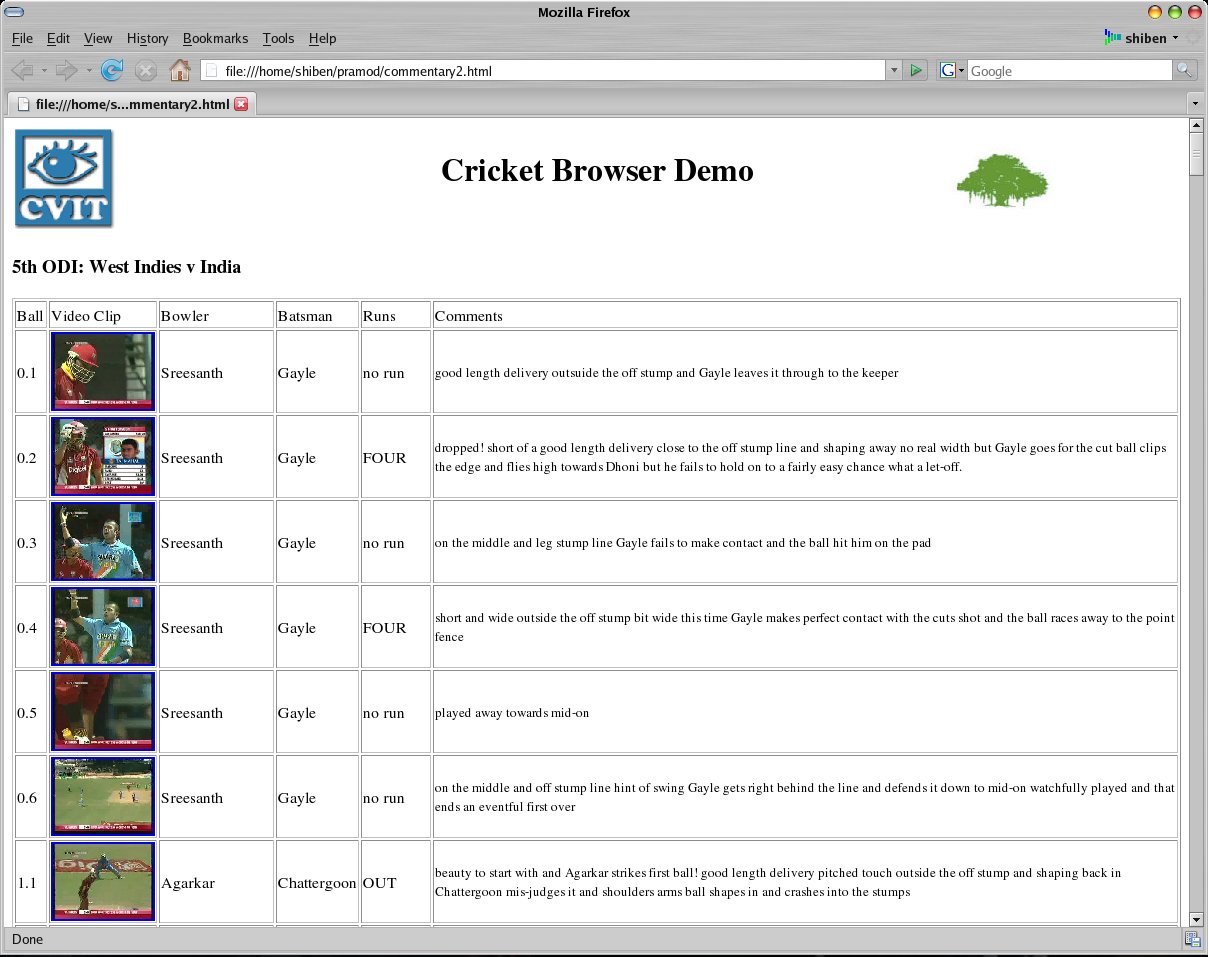

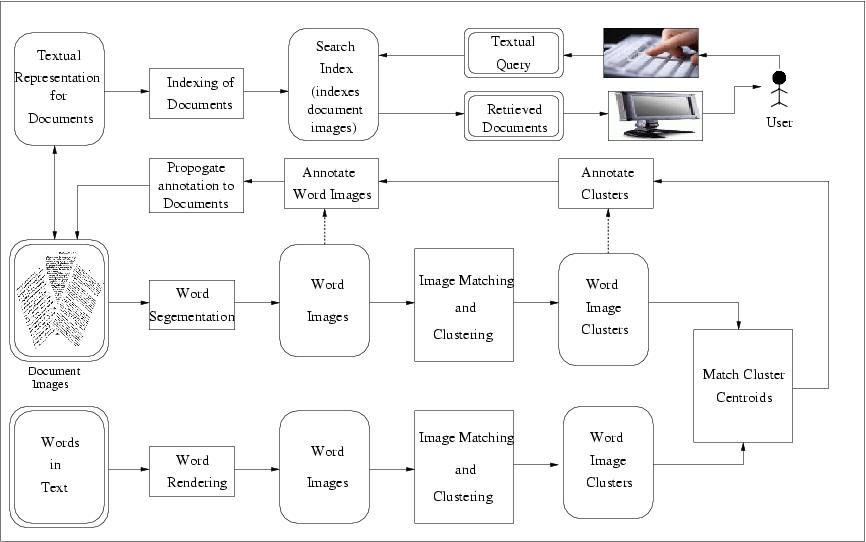

Matching words in the image space should handle the many variations in font size, font type, style, noise, degradations etc. that are present in document images. The features and the matching technique were carefully designed to handle this variety. Further, morphological word variations, such as prefix and suffixes for a given stem word, are identified using an innovative partial matching scheme. We use Dynamic Time Warping (DTW) based matching algorithm which enables us to efficiently exploit the information supplied by local features, that scans vertical strips of the word images. Online matching of a query word against a search index is a computationally intensive process and thus time consuming. This can be avoided by performing annotation of the word images. Annotation assigns a corresponding text word to each word image. This enables further processing, such as indexing and retrieval, to occur in the text domain. Search and retireval in text domain allows us to build search systems which have interactive response times.

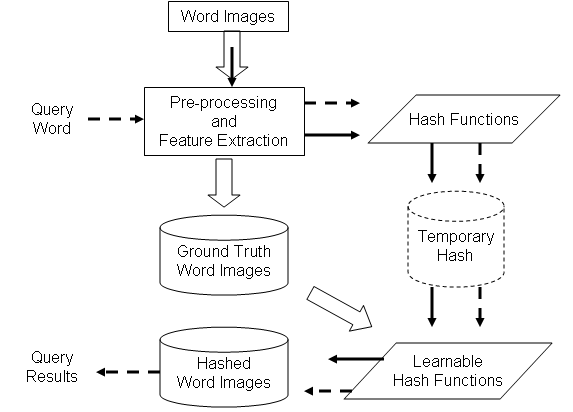

Online matching of a query word against a search index is a computationally intensive process and thus time consuming. This can be avoided by performing annotation of the word images. Annotation assigns a corresponding text word to each word image. This enables further processing, such as indexing and retrieval, to occur in the text domain. Search and retireval in text domain allows us to build search systems which have interactive response times. In databases, hashing of data is considered an efficient alternative to indexing (and clustering). In hashing, a single function value is computed for each word image. The words that have same (or similar) hash values are placed in the same bin. Effectively, the words with similar hash values are clustered together. With such a scheme, indexing search and retrieval of documents is linear in time complexity, which is a significant prospect.

In databases, hashing of data is considered an efficient alternative to indexing (and clustering). In hashing, a single function value is computed for each word image. The words that have same (or similar) hash values are placed in the same bin. Effectively, the words with similar hash values are clustered together. With such a scheme, indexing search and retrieval of documents is linear in time complexity, which is a significant prospect.

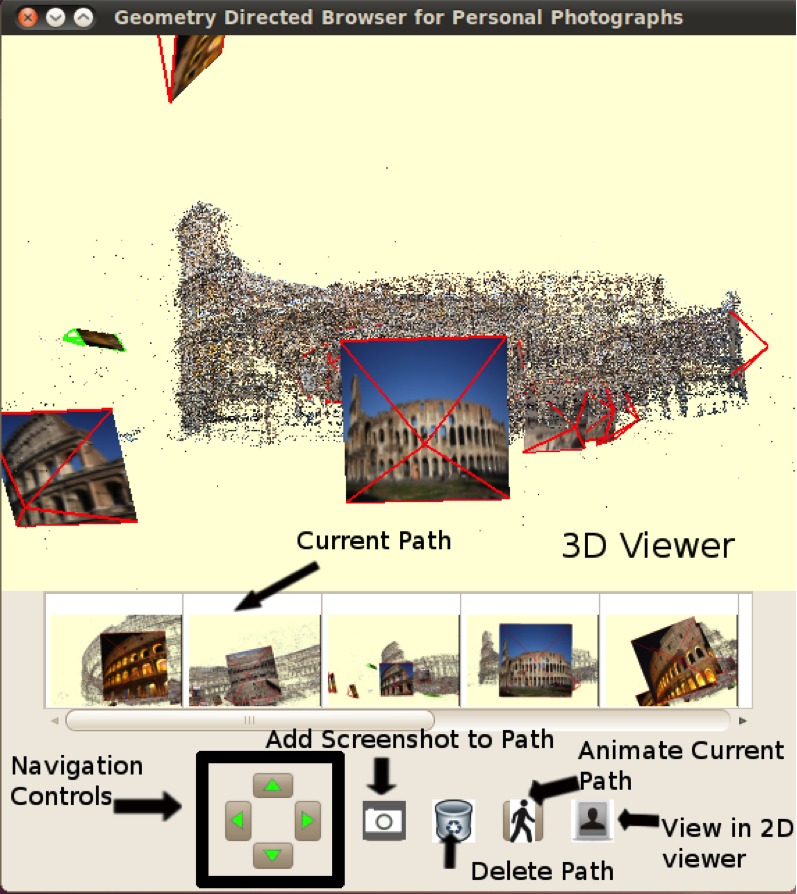

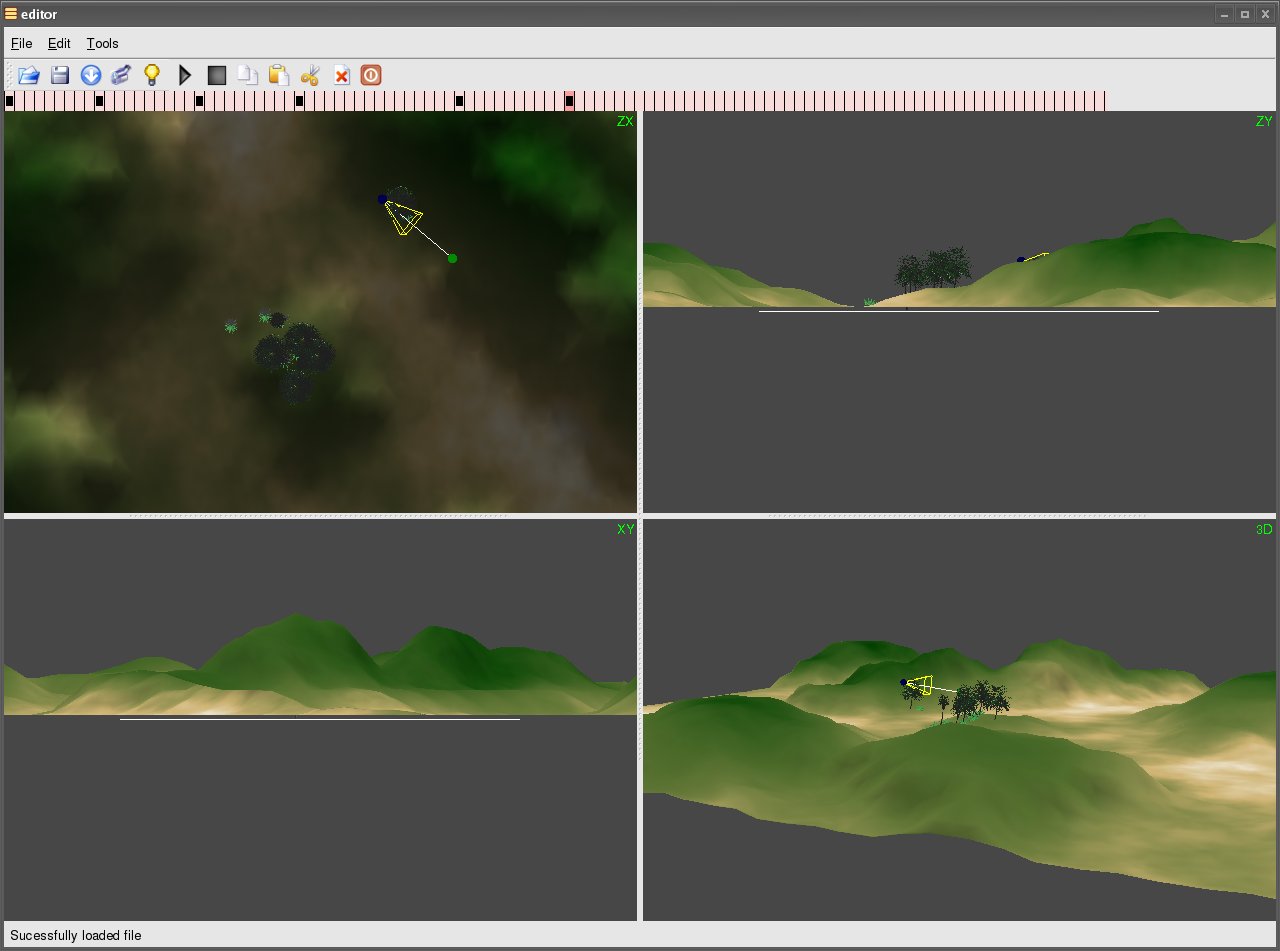

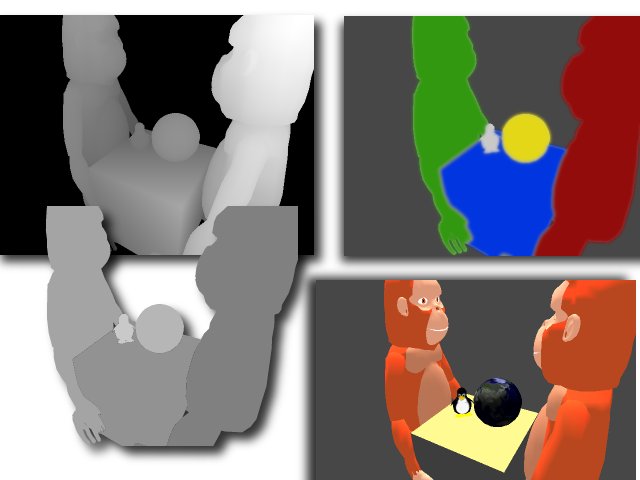

The basic objective of DGTk is to enable generation of complex representations that are required by CV and IBR algorithms with ease. Our tool provides a very simple intuitive interface for generating the following representations of a scene created in our tool::

The basic objective of DGTk is to enable generation of complex representations that are required by CV and IBR algorithms with ease. Our tool provides a very simple intuitive interface for generating the following representations of a scene created in our tool::