Security and Privacy of Visual Data

With a rapid development and acceptablity of computer vision based systems in one's daily life, securing of the visual data has become imperative. Security issues in computer vision primarily originates from the storage, distribution and processing of the personal data, whereas privacy concerns with tracking down of the user's activity. The current methods of securing an online protocol is to apply a cryptographic layer on top of an existing processing modules, thus securing the data against unauthorised third party access. However, this is often not enough to ensure the complete security of the user's privileged information. Through this work we address specific security and privacy concerns of the visual data. We propose application specific, computationally efficient and provably secure computer vision algorithms for the encrypted domain. More specifically we address the following issues:

- Efficacy: Security should not be at the cost of accuracy.

- Efficiency: Encryption/Decryption is computationaly expensive. Secure algorithms should be practical.

- Domain Knowledge: Domain specific algorithms will be more efficient than generic solutions such as SMC.

- Security: Algorithms need to be provably-secure and meet futuristic requirements.

Resources: POSTER PRESENTATION

Private Content Based Image Retrieval

![]() For content level access, very often database needs the query as a sample image. However, the image may contain private information and hence the user does not wish to reveal the image to the database. Private Content Based Image Retrieval (PCBIR) deals with retrieving similar images from an image database without revealing the content of the query image. not even to the database server. We propose algorithms for PCBIR, when the database is indexed using hierarchical index structure or hash based indexing scheme. Experiments are conducted on real datasets with popular features and state of the art data structures. It is observed that specialty and subjectivity of image retrieval (unlike SQL queries to a relational database) enables in computationally efficient yet private solutions.

For content level access, very often database needs the query as a sample image. However, the image may contain private information and hence the user does not wish to reveal the image to the database. Private Content Based Image Retrieval (PCBIR) deals with retrieving similar images from an image database without revealing the content of the query image. not even to the database server. We propose algorithms for PCBIR, when the database is indexed using hierarchical index structure or hash based indexing scheme. Experiments are conducted on real datasets with popular features and state of the art data structures. It is observed that specialty and subjectivity of image retrieval (unlike SQL queries to a relational database) enables in computationally efficient yet private solutions.

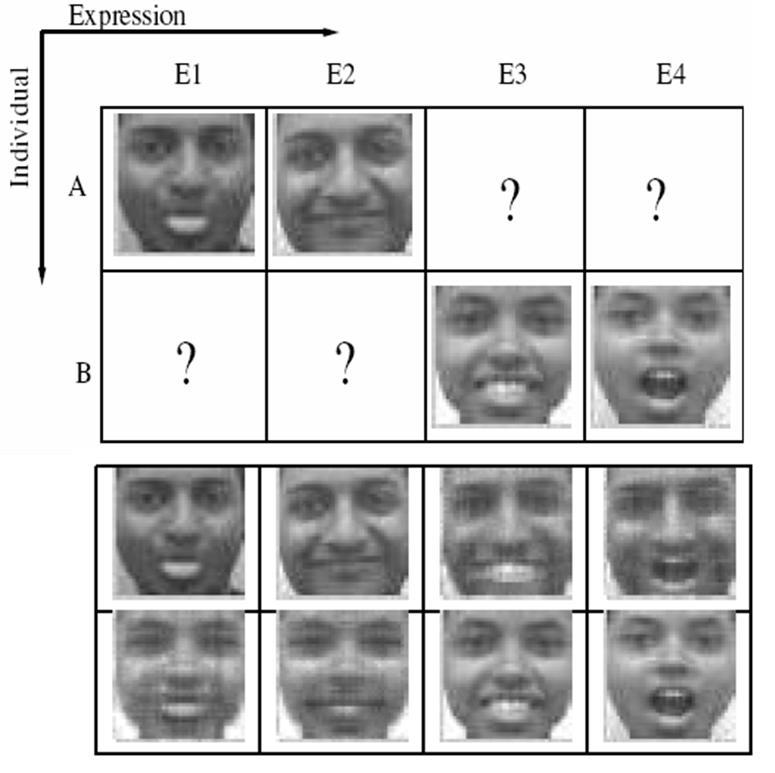

Blind Authentication: A Crypto-Biometric Verification Protocol

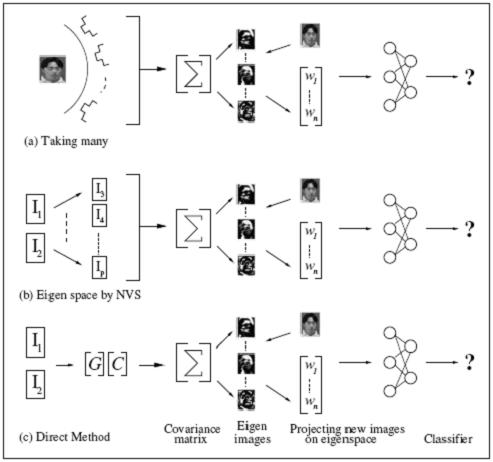

Biometric authentication provides a secure, non-repudiable and convenient method for identity verification. Hence they are ideal to be deployed in both high security as well as remote authentication applications. However, the assertions on security and non-repudiation are valid only if the integrity of the overall system is maintained. A hacker who gains physical or remote access to the system can read or modify the stored templates and successfully pose as or deny access to legitimate users. We propose a secure biometric authentication protocol over public networks using asymmetric encryption, which captures the advantages of biometric authentication as well as the security of public key cryptography. Blind Authentication provides non-repudiable identity verification, while not revealing any additional information about the user to the server or vice versa.

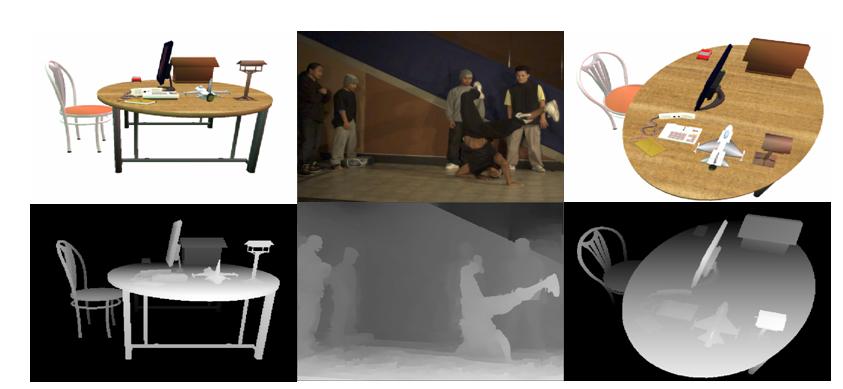

Privacy Preserving Video Surveillance

Widespread use of surveillance cameras in offices and other business establishments, pose a significant threat to the privacy of the employees and visitors. The challenge of introducing privacy and security in such a practical surveillance system has been stifled by the enormous computational and communication overhead required by the solutions. In this work, we propose to utilize some of the inherent properties of the image data to enable efficient and provably secure surveillance algorithms. Our method enables distributed secure processing and storage, while retaining the ability to reconstruct the original data in case of a legal requirement. Our proposed paradigm is highly secure and extreamly fast over the traditional SMC, making privacy preserving surveillance practical.

Fast and Secure Video Encryption

In the recent years, there has been tremendous growth in the areas like networking, digital multimedia, etc., which made multimedia distribution much simpler, for many fascinating applications. So, Businesses and other organizations are now able to perform real-time audio and video conferencing, even over a non-dedicated channel. An eavesdropper can conveniently intercept and capture the sensitive and valuable multimedia content travelling in a public channel. Hence, multimedia security is needed for commerce. This work is focused on proposing new techniques for the security of the video data especially for real-time applications. The major challenges in developing an ideal video encryption algorithm are providing good security against different types of security attacks, no overhead on the MPEG compression process and less encryption time in order to support real-time transfer of the videos. Brief project details.

Related Publications

Maneesh Upmanyu, Anoop M. Namboodiri, K. Srinathan and C. V. Jawahar - Efficient Biometric Verification in Encrypted Domain Proceedings of the 3rd International Conference on Biometrics (ICB 2009), pp. 899-908, June . 2-5, 2009, Alghero, Italy. [PDF]

Maneesh Upmanyu, Anoop M. Namboodiri, K. Srinathan and C.V. Jawahar - Efficient Privacy Preserving Video Surveillance Poceedings of the 12th International Conference on Computer Vision (ICCV), 2009, Kyoto, Japan [PDF]

C. Narsimha Raju, Gangula Umadevi, Kannan Srinathan and C. V. Jawahar - Fast and Secure Real-Time Video Encryption IEEE Sixth Indian Conference on Computer Vision, Graphics & Image Processing (ICVGIP 2008), pp. 257-264, 16-19 Dec,2008, Bhubaneswar, India. [PDF]

C. Narsimha Raju, UmaDevi Ganugula, Srinathan Kannan and C.V. Jawahar - A Novel Video Encryption Technique Based on Secret Sharing Proc. of IEEE International Conference on Image Processing(ICIP),Oct 12-15, 2008,San Diego, USA. [PDF]

Shashank J, Kowshik P, Kannan Srinathan and C.V. Jawahar - Private Content Based Image Retrieval Proceedings of IEEE computer society conference on Computer Vision and Pattern Recognition (CVPR) 2008, Egan Convention Center, Anchorage, Alaska, June 24-26, 2008. [PDF]

C. Narsimha Raju, Kannan Srinathan and C. V. Jawahar - A Real-Time Video Encryption Exploiting the Distribution of the DCT coefficients IEEE TENCON, November 18-21,2008, Hyderabad, India. [PDF]

Associated People

|

|

|

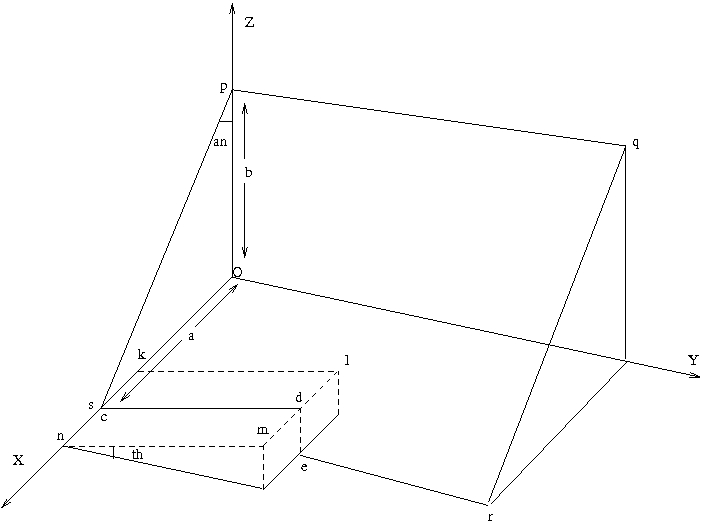

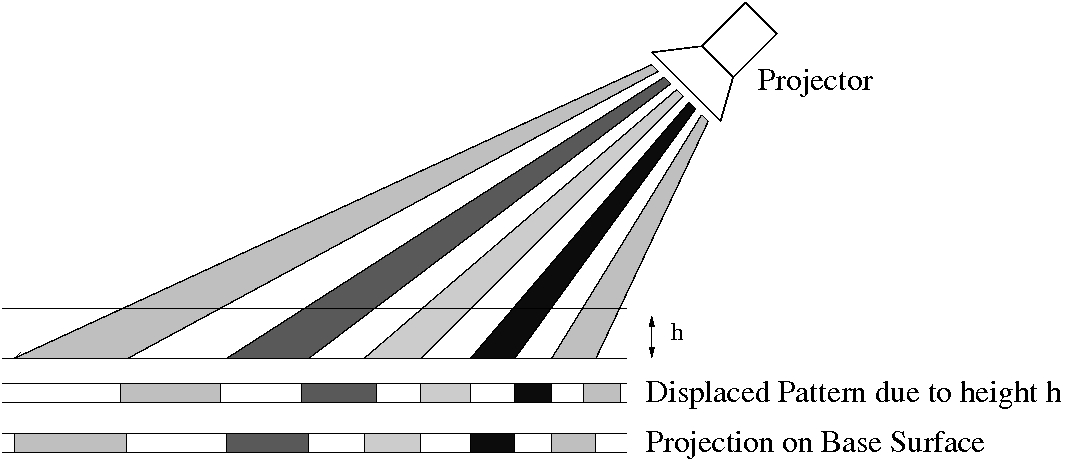

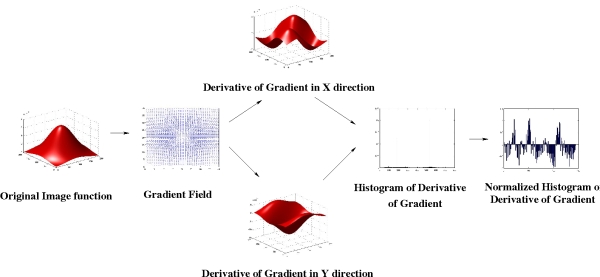

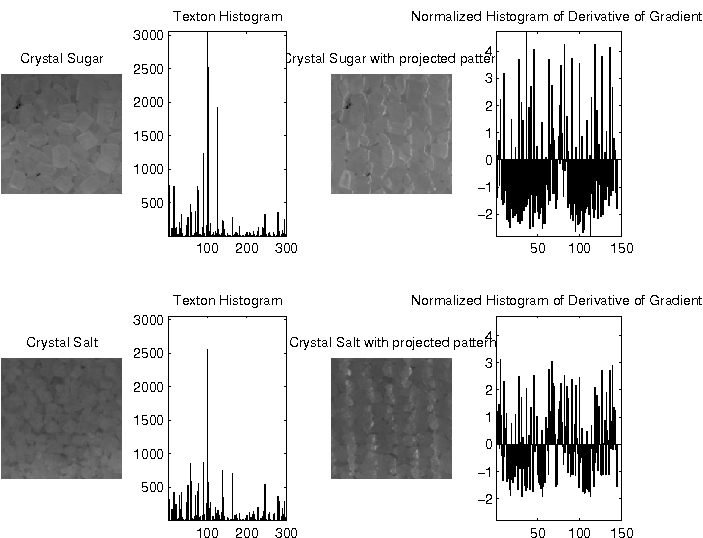

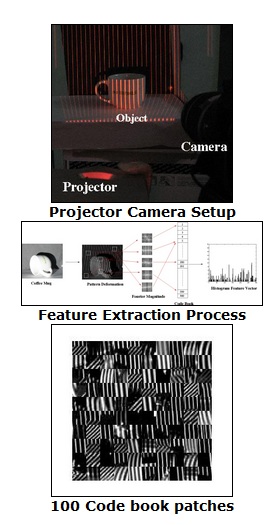

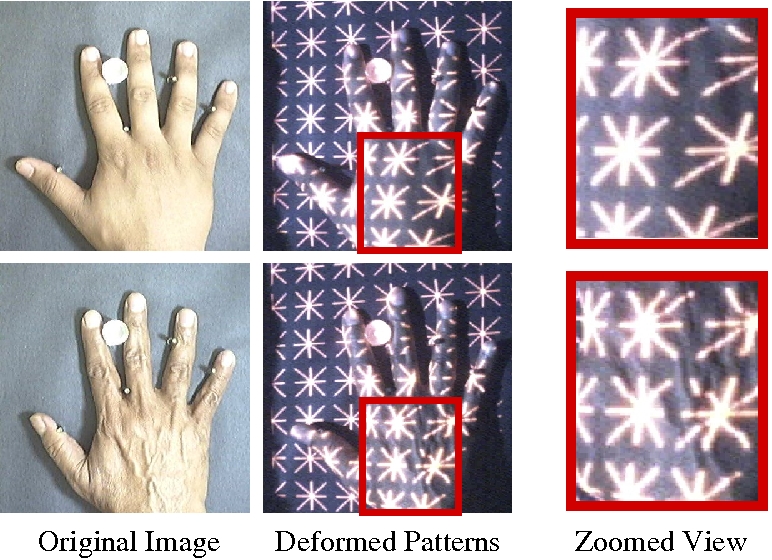

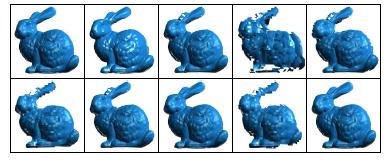

We propose the use of structured lighting patterns, which we refer to as projected texture, for the purpose of object recognition. The depth variations of the object induces deformations in the projected texture, and these deformations encode the shape information. The primary idea is to view the deformation pattern as a characteristic property of the object and use it directly for classification instead of trying to recover the shape explicitly. To achieve this we need to use an appropriate projection pattern and derive features that sufficiently characterize the deformations. The patterns required could be quite different depending on the nature of object shape and its variation across objects.

We propose the use of structured lighting patterns, which we refer to as projected texture, for the purpose of object recognition. The depth variations of the object induces deformations in the projected texture, and these deformations encode the shape information. The primary idea is to view the deformation pattern as a characteristic property of the object and use it directly for classification instead of trying to recover the shape explicitly. To achieve this we need to use an appropriate projection pattern and derive features that sufficiently characterize the deformations. The patterns required could be quite different depending on the nature of object shape and its variation across objects.

Interest point operators (IPO) are used extensively for reducing computational time and improving the accuracy of several complex vision tasks such as object recognition or scene analysis. SURF, SIFT, Harris,Corner points etc., are popular examples. Though there exists a large number of IPOs in the vision literature, most of them rely on low level features such as color,edge orientation etc., making them sensitive to degradation in the images.

Interest point operators (IPO) are used extensively for reducing computational time and improving the accuracy of several complex vision tasks such as object recognition or scene analysis. SURF, SIFT, Harris,Corner points etc., are popular examples. Though there exists a large number of IPOs in the vision literature, most of them rely on low level features such as color,edge orientation etc., making them sensitive to degradation in the images.

The scene representation using multiple Depth Images contains redundant descriptions of common parts. Our Compression methods aim at exploiting this redundancy for a compact representation. The various kinds of compression algorithms tried are ::

The scene representation using multiple Depth Images contains redundant descriptions of common parts. Our Compression methods aim at exploiting this redundancy for a compact representation. The various kinds of compression algorithms tried are ::