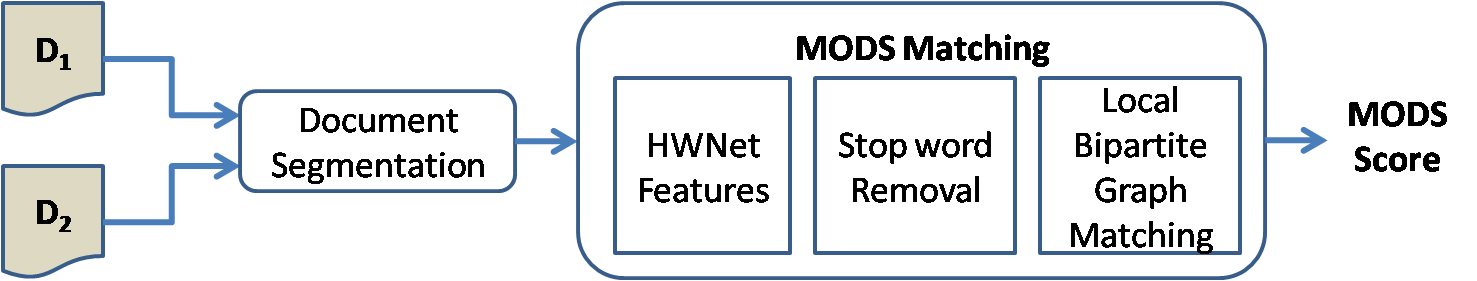

Deep Feature Embedding for Accurate Recognition and Retrieval of Handwritten Text

Abstract

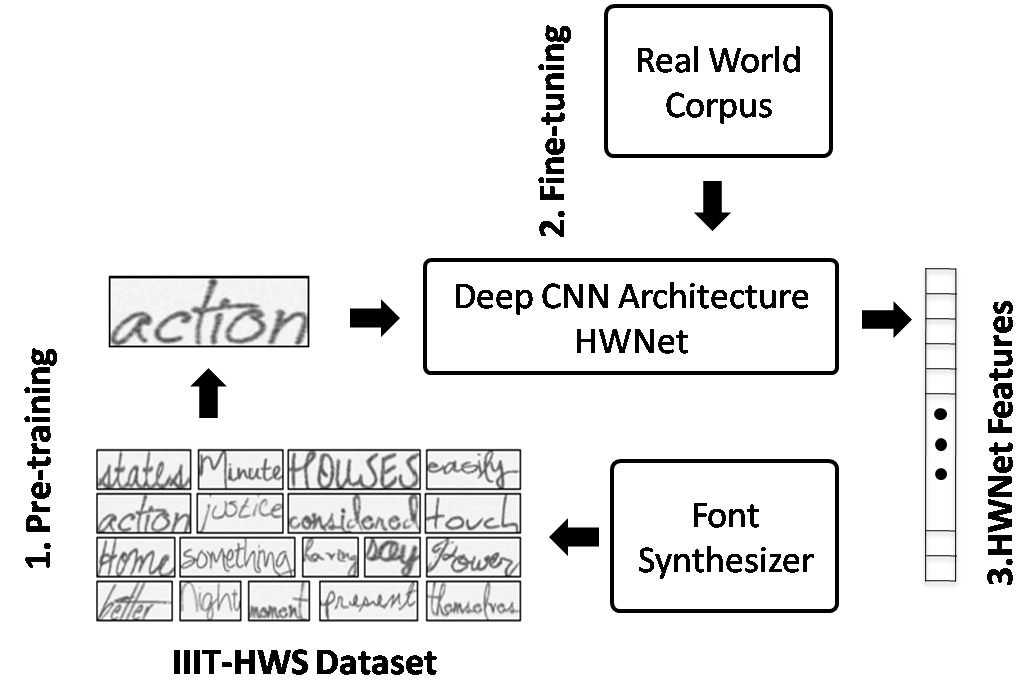

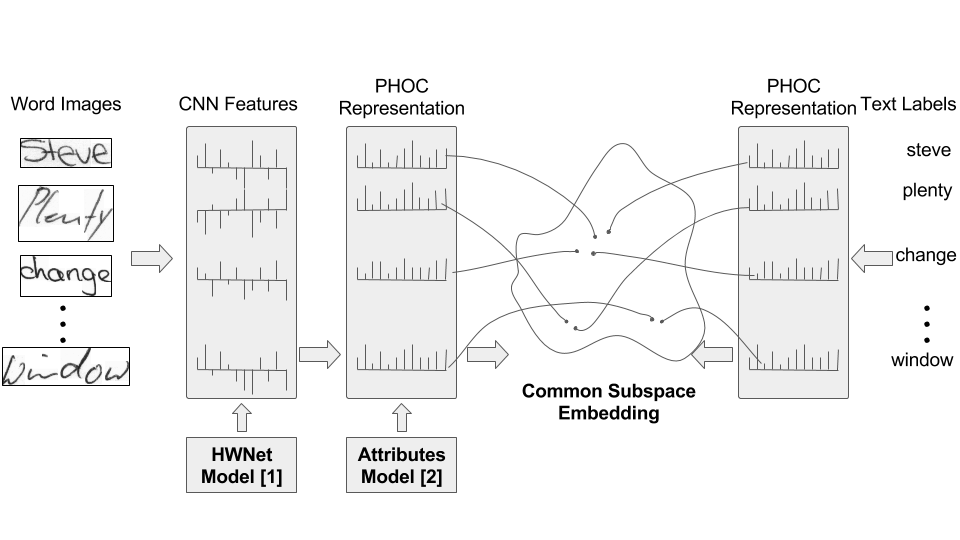

We propose a deep convolutional feature representation that achieves superior performance for word spotting and recognition for handwritten images. We focus on :- (i) enhancing the discriminative ability of the convolutional features using a reduced feature representation that can scale to large datasets, and (ii) enabling query-by-string by learning a common subspace for image and text using the embedded attribute framework. We present our results on popular datasets such as the IAM corpus and historical document collections from the Bentham and George Washington pages. On the challenging IAM dataset, we achieve a state of the art mAP of 91.58% on word spotting using textual queries and a mean word error rate of 6.69% for the word recognition task.

Major Contributions

- Improve the discriminative ability of deep CNN features using HWNet[1] and achieving state of the art results for handwritten word spotting and recognition tasks on IAM, George Washington and Bentham handwritten pages.

- Learning a reduced feature representation that can scale large datasets.

- Enabling query-by-string by learning a common subspace for image and text using the embedded attribute framework.

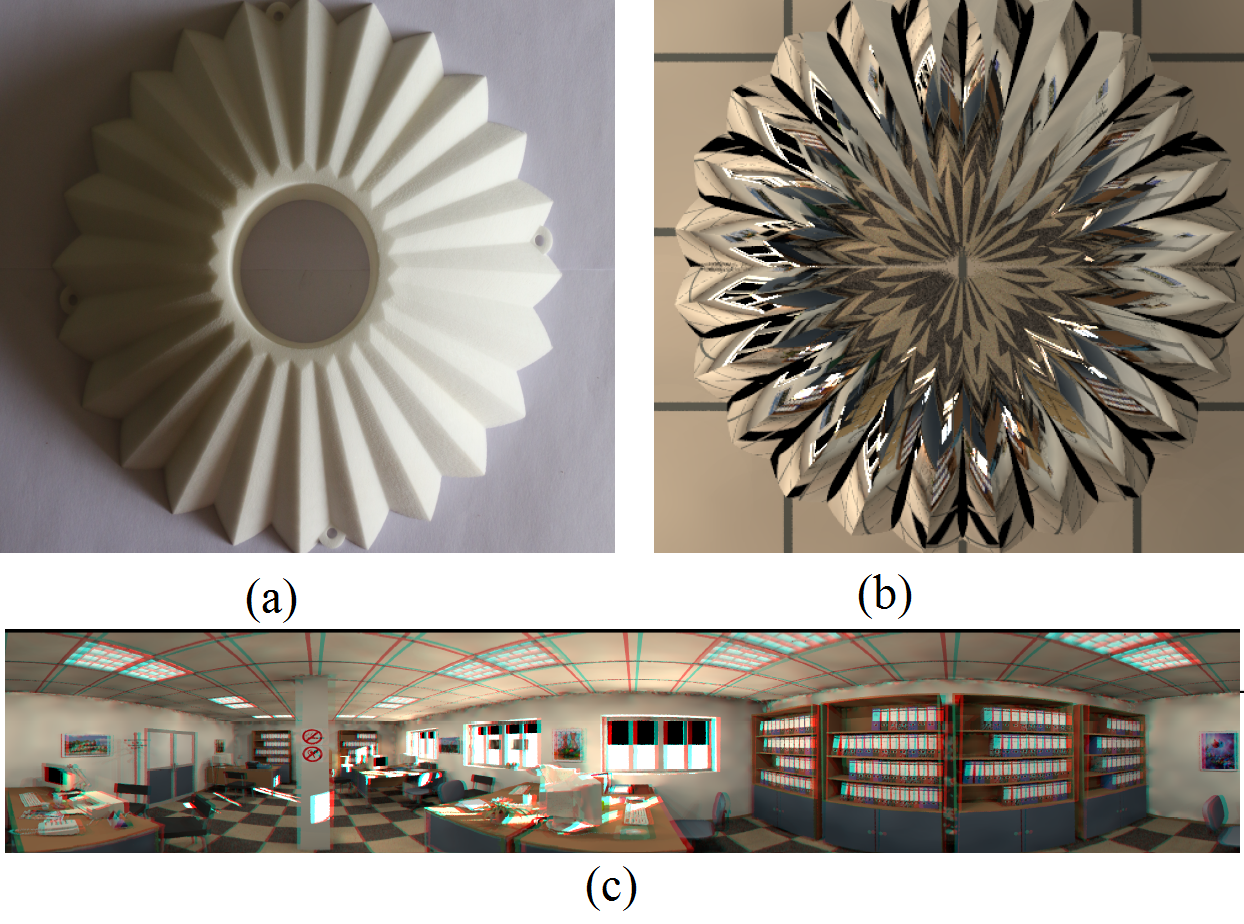

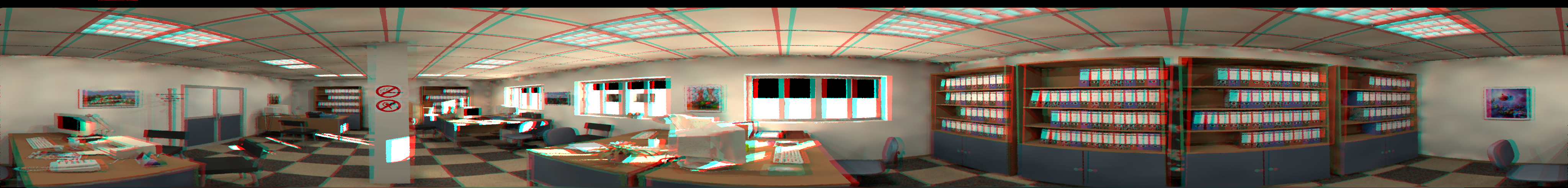

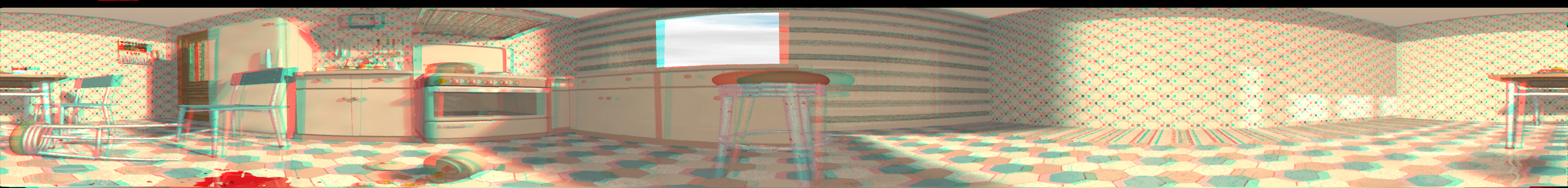

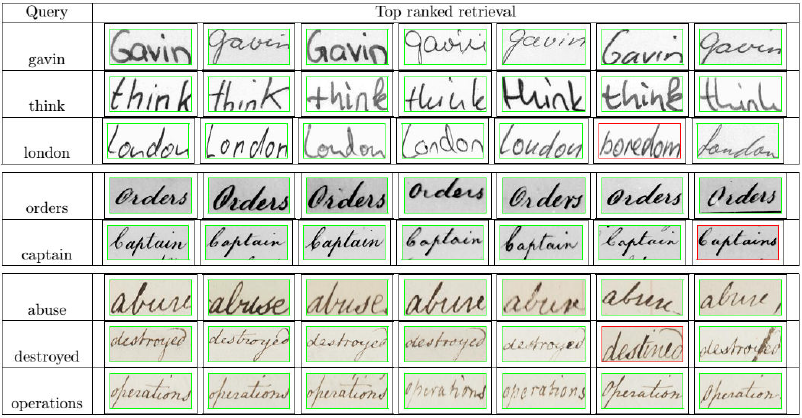

Qualitative Results

Related Publications

Praveen Krishnan, Kartik Dutta and C.V Jawahar - Deep Feature Embedding for Accurate Recognition and Retrieval of Handwritten Text, 15th International Conference on Frontiers in Handwriting Recognition, Shenzhen, China (ICFHR), 2016. [PDF]

Codes

Models

References

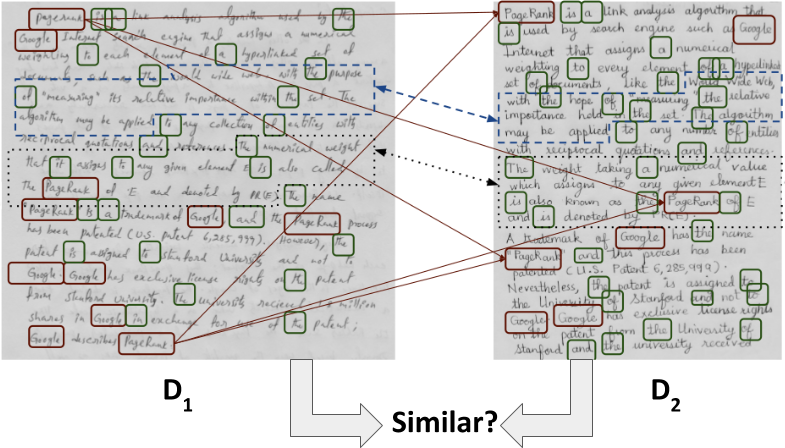

[1] Praveen Krishnan and C.V Jawahar, "Matching Handwritten Document Images", ECCV 2016.

[2] J. Almazán, A. Gordo, A. Fornés, and E. Valveny, "Word spotting and recognition with embedded attributes," PAMI,2014.

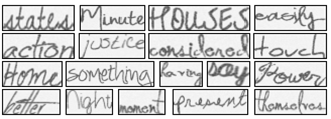

[3] Praveen Krishnan and C.V Jawahar, "Generating Synthetic Data for Text Recognition", arXiv:1608.04224, 2016

Associated People

- Praveen Krishnan *

- Kartik Dutta *

- C.V. Jawahar

* Equal Contribution